The idea for Capsule started with a tweet about reinventing social media.

A day later cryptography researcher, Nadim Kobeissi — best known for authoring the open source e2e encrypted desktop chat app Cryptocat (now discontinued) — had pulled in a pre-seed investment of $100,000 for his lightweight mesh-networked microservices concept, with support coming from angel investor and former Coinbase CTO Balaji Srinivasan, William J. Pulte and Wamda Capital.

The nascent startup has a post-money valuation on paper of $10M, according to Kobeissi, who is working on the prototype — hoping to launch an MVP of Capsule in March (as a web app), after which he intends to raise a seed round (targeting $1M-$1.5M) to build out a team and start developing mobile apps.

For now there’s nothing to see beyond Capsule’s landing page and a pitch deck (which he shared with TechCrunch for review). But Kobeissi says he was startled by the level of interest in the concept.

“I posted that tweet and the expectation that I had was that basically 60 people max would retweet it and then maybe I’ll set up a Kickstarter,” he tells us. Instead the tweet “just completely exploded” and he found himself raising $100k “in a single day” — with $50k paid in there and then.

“I’m not a startup guy. I’ve been running a business based on consulting and based on academic R&D services,” he continues. “But by the end of the day — last Sunday, eight days ago — I was running a Delaware corporation valued at $10M with $100k in pre-seed funding, which is insane. Completely insane.”

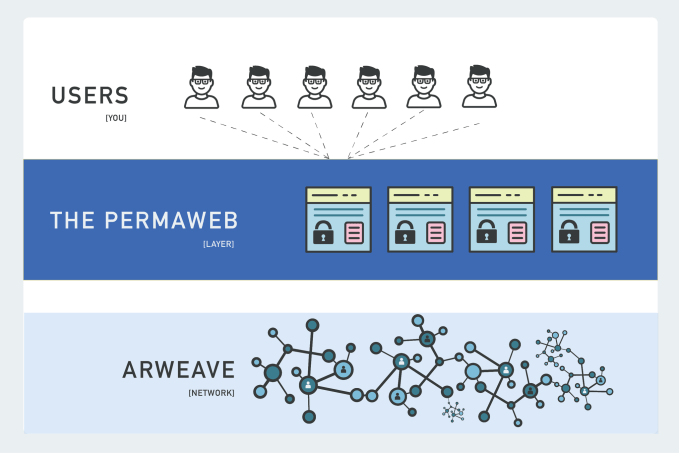

Capsule is just the latest contender for retooling Internet power structures by building infrastructure that radically decentralizes social platforms to make speech more resilient to corporate censorship and control.

The list of decentralized/p2p/federated protocols and standards already out there is very long — even while usage remains low. Extant examples include ActivityPub, Diaspora, Mastodon, p2p Matrix, Scuttlebutt, Solid and Urbit, to name a few.

Interest in the space has been rekindled in recent weeks after mainstream platforms like Facebook and Twitter took decisions to shut down US president Donald Trump’s access to their megaphones — a demonstration of private power that other political leaders have described as problematic.

Kobeissi also takes that view, while adding the caveat that he’s not “personally” concerned about Trump’s deplatforming. But he says he is concerned about giant private corporations having unilateral power to shape Internet speech — whether takedown decisions are being made by Twitter’s trust & safety lead or Amazon Web Services (which recently yanked the plug on right-wing social network Parler for failing to moderate violent views).

He also points to a lawsuit that’s been filed in US court seeking damages and injunctive relief from Apple for allowing Telegram, a messaging platform with 500M+ users, to be made available through its iOS App Store — “despite Apple’s knowledge that Telegram is being used to intimidate, threaten, and coerce members of the public” — raising concerns about “the odds of these efforts catching on”.

“That is kind of terrifying,” he suggests.

Capsule would seek to route around the risk of mass deplatforming via “easy to deploy” p2p microservices — starting with a forthcoming web app.

“When you deploy Capsule right now — I have a prototype that does almost nothing running — it’s basically one binary. And you get that binary and you deploy it and you run it, and that’s it. It sets up a server, it contacts Let’s Encrypt, it gets you a certificate, it uses SQLite for the database, which is a server-less database, all of the assets for the web server are within the binary,” he says, walking through the “really nice technical idea” which snagged $100k in pre-seed backing insanely fast.

“There are no other files — and then once you have it running, in that folder when you set up your capsule server, it’s just the Capsule program and a Capsule database which is a file. And that’s it. And that is so self-contained that it’s embeddable everywhere, that’s migratable — and it’s really quite impossible to get this level of simplicity and elegance so quickly unless you go this route. Then, for the mesh federation thing, we’re just doing HTTPS calls and then having decentralized caching of the databases and so on.”

Among the Twitter back-and-forth about how (or whether) Kobeissi’s concept differs to various other decentralized protocols, someone posted a link to this XKCD cartoon — which lampoons the techie quest to resolve competing standards by proposing a tech that covers all use-cases (yet is of course doomed to increase complexity by +1). So given how many protocols already offer self-hosted/p2p social media services it seems fair to ask what’s different here — and, indeed, why build another open decentralized standard?

Kobeissi argues that existing options for decentralizing social media are either: A) not fully p2p (Mastodon is “self-hosted but not decentralized”, per a competitive analysis on Capsule’s pitch deck, ergo its servers are “vulnerable to Parler-style AWS takedowns”); or B) not focused enough on the specific use-case of social media (some other decentralized protocols like Matrix aim to support many more features/apps than social media and therefore can’t be as lightweight is the argument); or C) simply aren’t easy enough to use to be more than a niche geeky option.

He talks about Capsule having the same level of focus on social media as Signal does on private messaging, for example — albeit intending it to support both short-form ‘tweet’ style public posts and long-form Medium-style postings. But he’s vocal about not wanting any ‘bloat’.

He also invokes Apple’s ‘design for usability’ philosophy. Albeit, it’s a lot easier to say you want to design something that ‘just works’ vs actually pulling off effortless mainstream accessibility. But that’s the bar Kobeissi is setting himself here.

“I always imagine Glenn Greenwald when I think of my user,” he says on the usability point, referring to the outspoken journalist and Intercept co-founder who recently left to launch his own newsletter-based offering on Substack. “He’s the person I see setting this up. Basically the way that this would work is he’d be able to set this up or get someone to set it up really easily — I think Capsule is going to offer automated deployments as also a way to make revenue, by the way, i.e. for a bit extra we deploy the server for you and then you’re self-hosting but we also make a margin off of that — but it’s going to be open source, you can set it up yourself as well and that’s perfectly okay. It’s not going to be hindered at all in that sense.

“In the case of Capsule, each content creator has their own website — has their own address, like Capsule.Greenwald.com — and then people go there and their first discovers of the mesh is through people that they’re interested in hearing from.”

Individual Capsules would be decentralized from the risk of platform-level censorship since they’d be beyond the reach of takedowns by a single centralizing entity. Although they would still be being hosted on the web — and therefore could be subject to a takedown by their own web host. That means illegal speech on Capsule could still be removed. However there wouldn’t be a universal host that could be hit up with the risk of a whole platform being taken down at a sweep — as Parler just was by AWS.

“For every takedown it is entirely between that Capsule user and their hosting provider,” says Kobeissi. “Capsule users are going to have different hosting providers that they’re able to choose and then every time that there is a takedown it is going to be a decision that is made by a different entity. And with a different — perhaps — judgement, so there isn’t this centralized focus where only Amazon Web Services decides who gets to speak or only Twitter decides.”

And while the business of web hosting at platform giant level involves just a handful of cloud hosting giants able to offer the required scalability, he argues that that censorship-prone market concentration goes away once you’re dealing with scores of descentralized social media instances.

“We have the big hosting providers — like AWS, Azure, Google Cloud — but aside from that we have a lot of tiny hosting providers or small businesses… Sure if you’re running a big business you do get to focus on these big providers because they allow you to have these insane servers that are very powerful and deployable very easily but if you’re running a Capsule instance, as a matter of fact, the server resource requirements of running a Capsule instance are generally speaking quite small. In most instances tiny.”

Content would also be harder to scrub from Capsule because the mesh infrastructure would mean posts get mirrored across the network by the poster’s own followers (assuming they have any). So, for example, reposts wouldn’t just vanish the moment the original poster’s account was taken down by their hosting provider.

Separate takedown requests would likely be needed to scrub each reposted instance, adding a lot more friction to the business of content moderation vs the unilateral takedowns that platform giants can rain down now. The aim is to “spare the rest of the community from the danger of being silenced”, as Kobeissi puts it.

Trump’s deplatforming does seem to have triggered a major penny dropping moment for some that allowing a handful of corporate giants to own and operate centalized mass communication machines isn’t exactly healthy for democratic societies as this unilateral control of infrastructure gives them the power to limit speech. (As, indeed, their content-sorting algorithms determine reach and set the agenda of much public debate.)

Current social media infrastructure also provides a few mainstream chokepoints for governments to lean on — amplifying the risk of state censorship.

With concerns growing over the implications of platform power on data flows — and judging by how quickly Kobeissi’s tweet turned heads — we could be on the cusp of an investor-funded scramble to retool Internet infrastructure to redefine where power (and data) lies.

It’s certainly interesting to note that Twitter recently reupped its own decentralized social media open standard push, Bluesky, for example. It obviously wouldn’t want to be left behind any such shift.

“It seems to really have blown up,” Kobeissi adds, returning to his week-old Capsule concept. “I thought when I tweeted that I was maybe the only person who cared. I guess I live in France so I’m not really in tune with what’s going on in the US a lot — but a lot of people care.”

“I am not like a cypherpunk-style person these days, I’m not for full anonymity or full unaccountability online by any stretch,” he adds. “And if this is abused then sincerely it might even be the case that we would encourage — have a guidelines page — for hosting providers like on how to deal with instances of someone hosting an abusive Capsule instance. We do want that accountability to exist. We are not like a full on, crazy town ‘free speech’ wild west thing. We just think that that accountability has to be organic and decentralized — just as originally intended with the Internet.”