Varada, a Tel Aviv-based startup that focuses on making it easier for businesses to query data across services, today announced that it has raised a $12 million Series A round led by Israeli early-stage fund MizMaa Ventures, with participation by Gefen Capital.

“If you look at the storage aspect for big data, there’s always innovation, but we can put a lot of data in one place,” Varada CEO and co-founder Eran Vanounou told me. “But translating data into insight? It’s so hard. It’s costly. It’s slow. It’s complicated.”

That’s a lesson he learned during his time as CTO of LivePerson, which he described as a classic big data company. And just like at LivePerson, where the team had to reinvent the wheel to solve its data problems, again and again, every company — and not just the large enterprises — now struggles with managing their data and getting insights out of it, Vanounou argued.

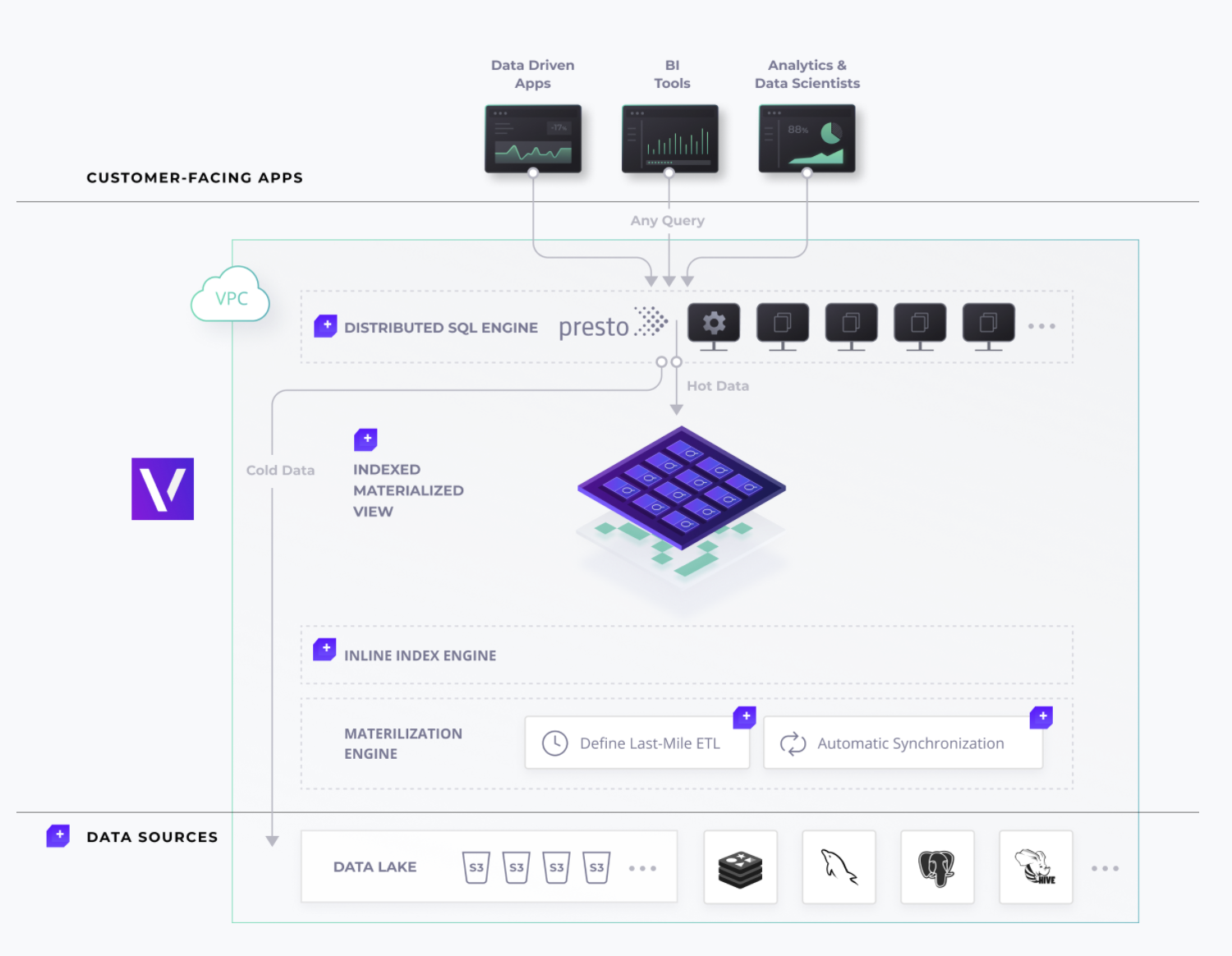

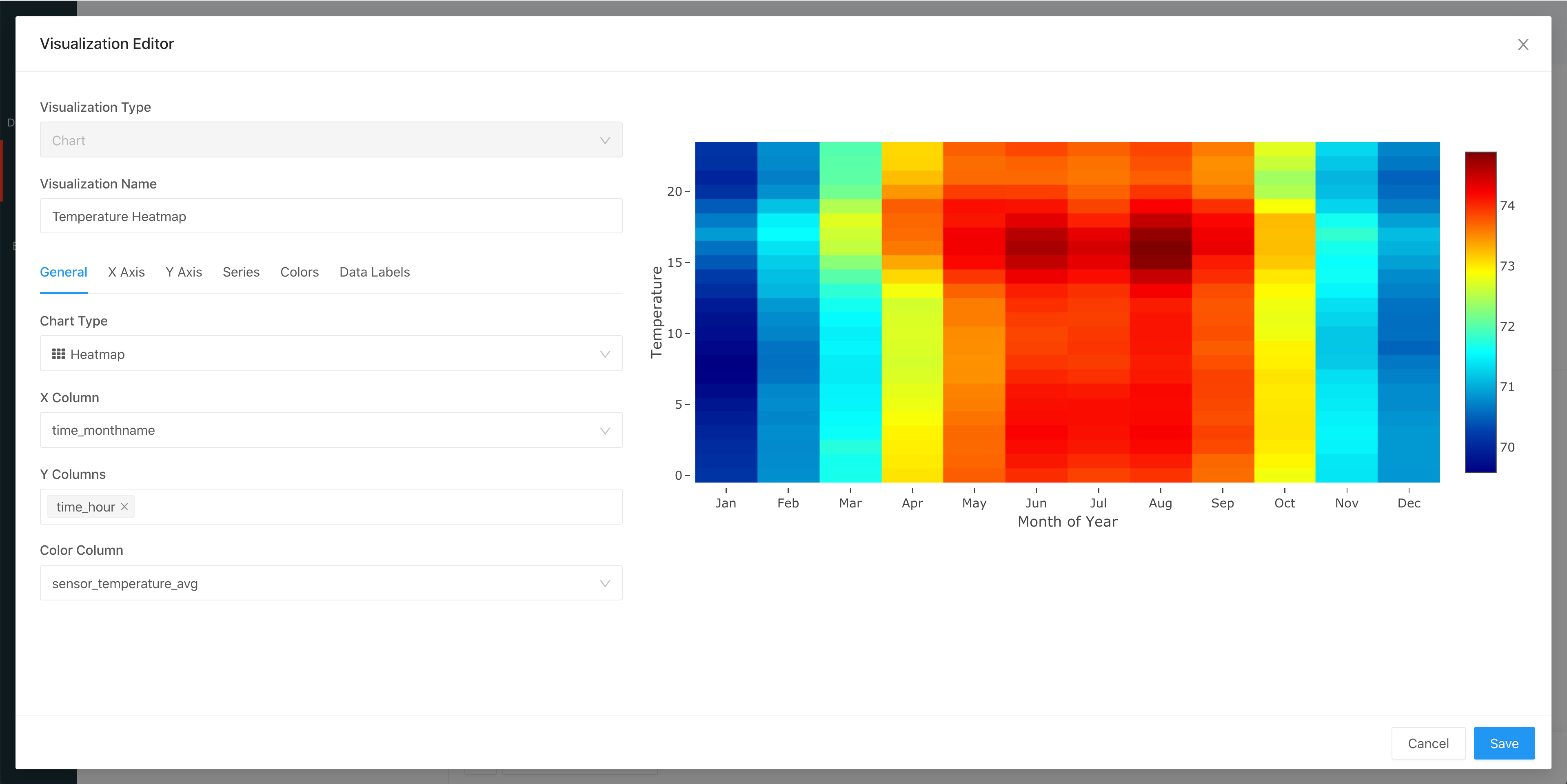

Image Credits: Varada

The rest of the founding team, David Krakov, Roman Vainbrand and Tal Ben-Moshe, already had a lot of experience in dealing with these problems, too, with Ben-Moshe having served at the Chief Software Architect of Dell EMC’s XtremIO flash array unit, for example. They built the system for indexing big data that’s at the core of Varada’s platform (with the open-source Presto SQL query engine being one of the other cornerstones).

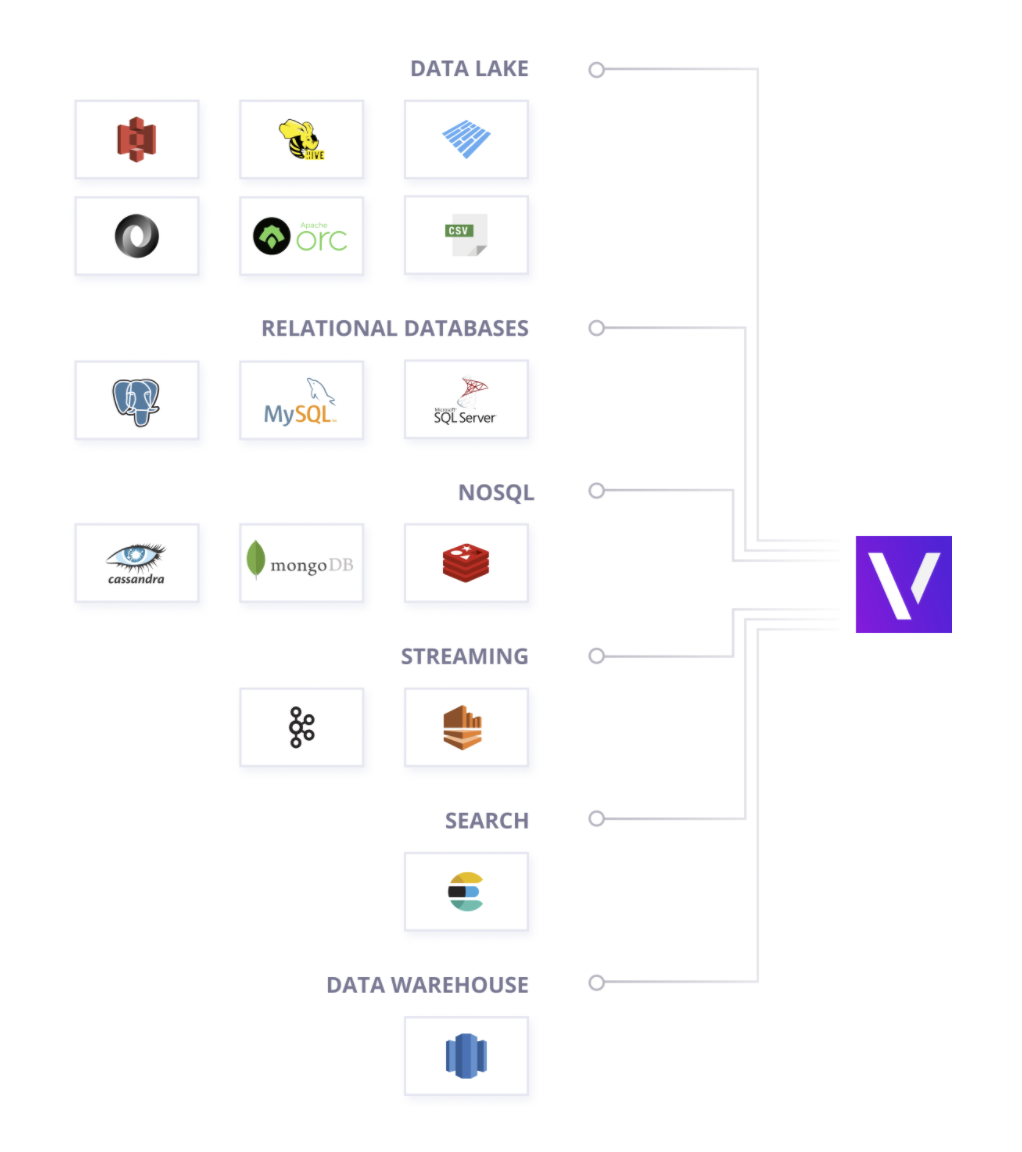

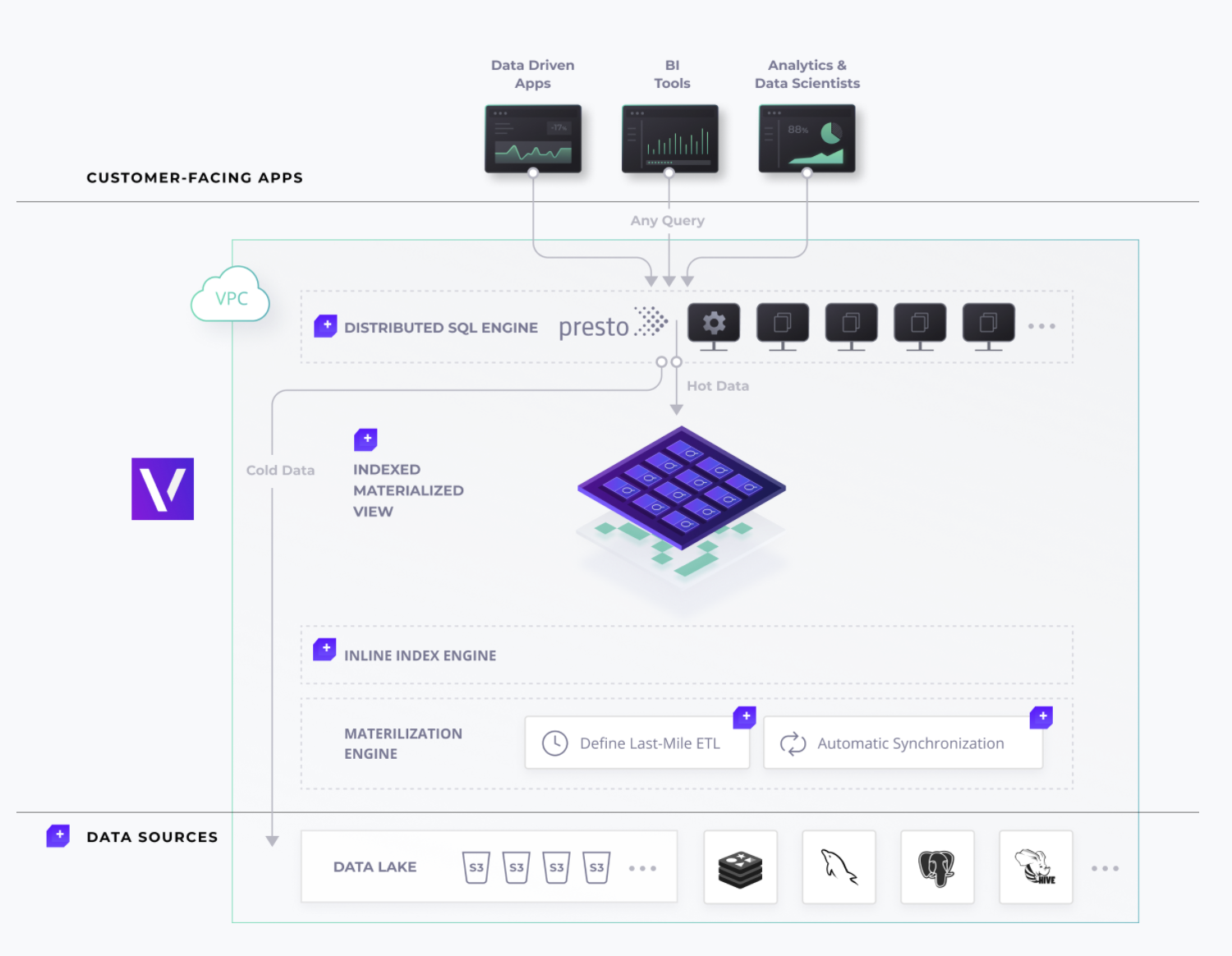

Image Credits: Varada

Essentially, Varada embraces the idea of data lakes and enriches that with its indexing capabilities. And those indexing capabilities is where Varada’s smarts can be found. As Vanounou explained, the company is using a machine learning system to understand when users tend to run certain workloads and then caches the data ahead of time, making the system far faster than its competitors.

“If you think about big organizations and think about the workloads and the queries, what happens during the morning time is different from evening time. What happened yesterday is not what happened today. What happened on a rainy day is not what happened on a shiny day. […] We listen to what’s going on and we optimize. We leverage the indexing technology. We index what is needed when it is needed.”

That helps speed up queries, but it also means less data has to be replicated, which also brings down the cost. AÅs Mizmaa’s Aaron Applebaum noted, since Varada is not a SaaS solution, the buyers still get all of the discounts from their cloud providers, too.

In addition, the system can allocate resources intelligently to that different users can tap into different amounts of bandwidth. You can tell it to give customers more bandwidth than your financial analysts, for example.

“Data is growing like crazy: in volume, in scale, in complexity, in who requires it and what the business intelligence uses are, what the API uses are,” Applebaum said when I asked him why he decided to invest. “And compute is getting slightly cheaper, but not really, and storage is getting cheaper. So if you can make the trade-off to store more stuff, and access things more intelligently, more quickly, more agile — that was the basis of our thesis, as long as you can do it without compromising performance.”

Varada, with its team of experienced executives, architects and engineers, ticked a lot of the company’s boxes in this regard, but he also noted that unlike some other Israeli startups, the team understood that it had to listen to customers and understand their needs, too.

“In Israel, you have a history — and it’s become less and less the case — but historically, there’s a joke that it’s ‘ready, fire, aim.’ You build a technology, you’ve got this beautiful thing and you’re like, ‘alright, we did it,’ but without listening to the needs of the customer,” he explained.

The Varada team is not afraid to compare itself to Snowflake, which at least at first glance seems to make similar promises. Vananou praised the company for opening up the data warehousing market and proving that people are willing to pay for good analytics. But he argues that Varada’s approach is fundamentally different.

“We embrace the data lake. So if you are Mr. Customer, your data is your data. We’re not going to take it, move it, copy it. This is your single source of truth,” he said. And in addition, the data can stay in the company’s virtual private cloud. He also argues that Varada isn’t so much focused on the business users but the technologists inside a company.

If you’ve managed to convince yourself that only large enterprises have the money to take advantage of Business Intelligence (BI), then think again. In the past, companies needed to hire expensive experts to really delve into BI. But these days, there is a range of affordable self-service tools that will allow small- and medium-sized businesses (SMBs) to make use of BI. What’s more, your SMB creates and holds much more data than you realize, which means you can start using BI for your business.

If you’ve managed to convince yourself that only large enterprises have the money to take advantage of Business Intelligence (BI), then think again. In the past, companies needed to hire expensive experts to really delve into BI. But these days, there is a range of affordable self-service tools that will allow small- and medium-sized businesses (SMBs) to make use of BI. What’s more, your SMB creates and holds much more data than you realize, which means you can start using BI for your business. Business Intelligence (BI) has conventionally been limited to big business; only they can afford pricey experts with specialist knowledge who can leverage BI’s value. But the rise of self-service BI tools has leveled the playing field, allowing small- and medium-sized businesses (SMBs) to get in on the game too. And with SMBs now producing far greater volumes of data than in the past, there’s never been a better time to put BI to use in your organization. Here’s what you need to know about BI.

Business Intelligence (BI) has conventionally been limited to big business; only they can afford pricey experts with specialist knowledge who can leverage BI’s value. But the rise of self-service BI tools has leveled the playing field, allowing small- and medium-sized businesses (SMBs) to get in on the game too. And with SMBs now producing far greater volumes of data than in the past, there’s never been a better time to put BI to use in your organization. Here’s what you need to know about BI.