Who will be liable for harmful speech generated by large language models? As advanced AIs such as OpenAI’s GPT-3 are being cheered for impressive breakthroughs in natural language processing and generation — and all sorts of (productive) applications for the tech are envisaged from slicker copywriting to more capable customer service chatbots — the risks of such powerful text-generating tools inadvertently automating abuse and spreading smears can’t be ignored. Nor can the risk of bad actors intentionally weaponizing the tech to spread chaos, scale harm and watch the world burn.

Indeed, OpenAI is concerned enough about the risks of its models going “totally off the rails,” as its documentation puts it at one point (in reference to a response example in which an abusive customer input is met with a very troll-esque AI reply), to offer a free content filter that “aims to detect generated text that could be sensitive or unsafe coming from the API” — and to recommend that users don’t return any generated text that the filter deems “unsafe.” (To be clear, its documentation defines “unsafe” to mean “the text contains profane language, prejudiced or hateful language, something that could be NSFW or text that portrays certain groups/people in a harmful manner.”).

But, given the novel nature of the technology, there are no clear legal requirements that content filters must be applied. So OpenAI is either acting out of concern to avoid its models causing generative harms to people — and/or reputational concern — because if the technology gets associated with instant toxicity that could derail development.

Just recall Microsoft’s ill-fated Tay AI Twitter chatbot — which launched back in March 2016 to plenty of fanfare, with the company’s research team calling it an experiment in “conversational understanding.” Yet it took less than a day to have its plug yanked by Microsoft after web users ‘taught’ the bot to spout racist, antisemitic and misogynistic hate tropes. So it ended up a different kind of experiment: In how online culture can conduct and amplify the worst impulses humans can have.

The same sorts of bottom-feeding internet content has been sucked into today’s large language models — because AI model builders have crawled all over the internet to obtain the massive corpuses of free text they need to train and dial up their language generating capabilities. (For example, per Wikipedia, 60% of the weighted pre-training dataset for OpenAI’s GPT-3 came from a filtered version of Common Crawl — aka a free dataset comprised of scraped web data.) Which means these far more powerful large language models can, nonetheless, slip into sarcastic trolling and worse.

European policymakers are barely grappling with how to regulate online harms in current contexts like algorithmically sorted social media platforms, where most of the speech can at least be traced back to a human — let alone considering how AI-powered text generation could supercharge the problem of online toxicity while creating novel quandaries around liability.

And without clear liability it’s likely to be harder to prevent AI systems from being used to scale linguistic harms.

Take defamation. The law is already facing challenges with responding to automatically generated content that’s simply wrong.

Security research Marcus Hutchins took to TikTok a few months back to show his follows how he’s being “bullied by Google’s AI,” as he put it. In a remarkably chipper clip, considering he’s explaining a Kafka-esque nightmare in which one of the world’s most valuable companies continually publishes a defamatory suggestion about him, Hutchins explains that if you google his name the search engine results page (SERP) it returns includes an automatically generated Q&A — in which Google erroneously states that Hutchins made the WannaCry virus.

Hutchins is actually famous for stopping WannaCry. Yet Google’s AI has grasped the wrong end of the stick on this not-at-all-tricky to distinguish essential difference — and, seemingly, keeps getting it wrong. Repeatedly. (Presumably because so many online articles cite Hutchins’ name in the same span of text as referencing ‘WannaCry’ — but that’s because he’s the guy who stopped the global ransomeware attack from being even worse than it was. So this is some real artificial stupidity in action by Google.)

To the point where Hutchins says he’s all but given up trying to get the company to stop defaming him by fixing its misfiring AI.

“The main problem that’s made this so hard is while raising enough noise on Twitter got a couple of the issues fixed, since the whole system is automated it just adds more later and it’s like playing whack-a-mole,” Hutchins told TechCrunch. “It’s got to the point where I can’t justify raising the issue anymore because I just sound like a broken record and people get annoyed.”

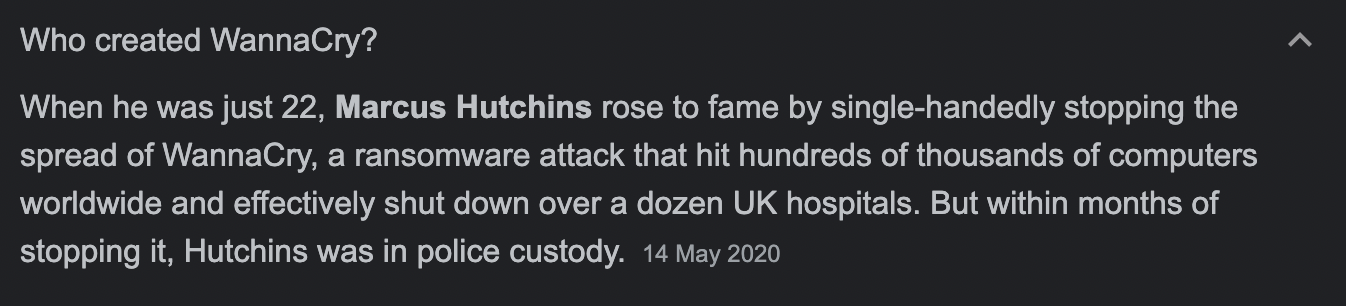

In the months since we asked Google about this erroneous SERP the Q&A it associates with Hutchins has shifted — so instead of asking “What virus did Marcus Hutchins make?” — and surfacing a one word (incorrect) answer directly below: “WannaCry,” before offering the (correct) context in a longer snippet of text sourced from a news article, as it was in April, a search for Hutchins’ name now results in Google displaying the question “Who created WannaCry” (see screengrab below). But it now just fails to answer its own question — as the snippet of text it displays below only talks about Hutchins stopping the spread of the virus.

Image Credits: Natasha Lomas/TechCrunch (screengrab)

So Google has — we must assume — tweaked how the AI displays the Q&A format for this SERP. But in doing that it’s broken the format (because the question it poses is never answered).

Moreover, the misleading presentation which pairs the question “Who created WannaCry?” with a search for Hutchins’ name, could still lead a web user who quickly skims the text Google displays after the question to wrongly believe he is being named as the author of the virus. So it’s not clear it’s much/any improvement on what was being automatically generated before.

In earlier remarks to TechCrunch, Hutchins also made the point that the context of the question itself, as well as the way the result gets featured by Google, can create the misleading impression he made the virus — adding: “It’s unlikely someone googling for say a school project is going to read the whole article when they feel like the answer is right there.”

He also connects Google’s automatically generated text to direct, personal harm, telling us: “Ever since google started featuring these SERPs, I’ve gotten a huge spike in hate comments and even threats based on me creating WannaCry. The timing of my legal case gives the impression that the FBI suspected me but a quick [Google search] would confirm that’s not the case. Now there’s all kinds of SERP results which imply I did, confirming the searcher’s suspicious and it’s caused rather a lot of damage to me.”

Asked for a response to his complaint, Google sent us this statement attributed to a spokesperson:

The queries in this feature are generated automatically and are meant to highlight other common related searches. We have systems in place to prevent incorrect or unhelpful content from appearing in this feature. Generally, our systems work well, but they do not have a perfect understanding of human language. When we become aware of content in Search features that violates our policies, we take swift action, as we did in this case.

The tech giant did not respond to follow-up questions pointing out that its “action” keeps failing to address Hutchins’ complaint.

This is of course just one example — but it looks instructive that an individual, with a relatively large online presence and platform to amplify his complaints about Google’s ‘bullying AI,’ literally cannot stop the company from applying automation technology that keeps surfacing and repeating defamatory suggestions about him.

In his TikTok video, Hutchins suggests there’s no recourse for suing Google over the issue in the US — saying that’s “essentially because the AI is not legally a person no one is legally liable; it can’t be considered libel or slander.”

Libel law varies depending on the country where you file a complaint. And it’s possible Hutchins would have a better chance of getting a court-ordered fix for this SERP if he filed a complaint in certain European markets such as Germany — where Google has previously been sued for defamation over autocomplete search suggestions (albeit the outcome of that legal action, by Bettina Wulff, is less clear but it appears that the claimed false autocomplete suggestions she had complained were being linked to her name by Google’s tech did get fixed) — rather than in the U.S., where Section 230 of the Communications Decency Act provides general immunity for platforms from liability for third-party content.

Although, in the Hutchins SERP case, the question of whose content this is, exactly, is one key consideration. Google would probably argue its AI is just reflecting what others have previously published — ergo, the Q&A should be wrapped in Section 230 immunity. But it might be possible to (counter) argue that the AI’s selection and presentation of text amounts to a substantial remixing which means that speech — or, at least, context — is actually being generated by Google. So should the tech giant really enjoy protection from liability for its AI-generated textual arrangement?

For large language models, it will surely get harder for model makers to dispute that their AIs are generating speech. But individual complaints and lawsuits don’t look like a scalable fix for what could, potentially, become massively scaled automated defamation (and abuse) — thanks to the increased power of these large language models and expanding access as APIs are opened up.

Regulators are going to need to grapple with this issue — and with where liability lies for communications that are generated by AIs. Which means grappling with the complexity of apportioning liability, given how many entities may be involved in applying and iterating large language models, and shaping and distributing the outputs of these AI systems.

In the European Union, regional lawmakers are ahead of the regulatory curve as they are currently working to hash out the details of a risk-based framework the Commission proposed last year to set rules for certain applications of artificial intelligence to try to ensure that highly scalable automation technologies are applied in a way that’s safe and non-discriminatory.

But it’s not clear that the EU’s AI Act — as drafted — would offer adequate checks and balances on malicious and/or reckless applications of large language models as they are classed as general purpose AI systems that were excluded from the original Commission draft.

The Act itself sets out a framework that defines a limited set of “high risk” categories of AI application, such as employment, law enforcement, biometric ID etc, where providers have the highest level of compliance requirements. But a downstream applier of a large language model’s output — who’s likely relying on an API to pipe the capability into their particular domain use case — is unlikely to have the necessary access (to training data, etc.) to be able to understand the model’s robustness or risks it might pose; or to make changes to mitigate any problems they encounter, such as by retraining the model with different datasets.

Legal experts and civil society groups in Europe have raised concerns over this carve out for general purpose AIs. And over a more recent partial compromise text that’s emerged during co-legislator discussions has proposed including an article on general purpose AI systems. But, writing in Euroactiv last month, two civil society groups warned the suggested compromise would create a continued carve-out for the makers of general purpose AIs — by putting all the responsibility on deployers who make use of systems whose workings they’re not, by default, privy to.

“Many data governance requirements, particularly bias monitoring, detection and correction, require access to the datasets on which AI systems are trained. These datasets, however, are in the possession of the developers and not of the user, who puts the general purpose AI system ‘into service for an intended purpose.’ For users of these systems, therefore, it simply will not be possible to fulfil these data governance requirements,” they warned.

One legal expert we spoke to about this, the internet law academic Lilian Edwards — who has previously critiqued a number of limitations of the EU framework — said the proposals to introduce some requirements on providers of large, upstream general-purpose AI systems are a step forward. But she suggested enforcement looks difficult. And while she welcomed the proposal to add a requirement that providers of AI systems such as large language models must “cooperate with and provide the necessary information” to downstream deployers, per the latest compromise text, she pointed out that an exemption has also been suggested for IP rights or confidential business information/trade secrets — which risks fatally undermining the entire duty.

So, TL;DR: Even Europe’s flagship framework for regulating applications of artificial intelligence still has a way to go to latch onto the cutting edge of AI — which it must do if it’s to live up to the hype as a claimed blueprint for trustworthy, respectful, human-centric AI. Otherwise a pipeline of tech-accelerated harms looks all but inevitable — providing limitless fuel for the online culture wars (spam levels of push-button trolling, abuse, hate speech, disinformation!) — and setting up a bleak future where targeted individuals and groups are left firefighting a never-ending flow of hate and lies. Which would be the opposite of fair.

The EU had made much of the speed of its digital lawmaking in recent years but the bloc’s legislators must think outside the box of existing product rules when it comes to AI systems if they’re to put meaningful guardrails on rapidly evolving automation technologies and avoid loopholes that let major players keep sidestepping their societal responsibilities. No one should get a pass for automating harm — no matter where in the chain a ‘black box’ learning system sits, nor how large or small the user — else it’ll be us humans left holding a dark mirror.

Microsoft’s Windows 11 operating system offers a number of improvements over Windows 10, including a new Start menu and a more functional Taskbar. If you have just purchased a laptop running on Windows 11, or are planning to upgrade your current device, then you will need to know how to set it up. In this blog post, we will provide you with a guide on how to customize your Windows 11 laptop.

Microsoft’s Windows 11 operating system offers a number of improvements over Windows 10, including a new Start menu and a more functional Taskbar. If you have just purchased a laptop running on Windows 11, or are planning to upgrade your current device, then you will need to know how to set it up. In this blog post, we will provide you with a guide on how to customize your Windows 11 laptop. Microsoft’s Windows 11 operating system (OS) offers a lot of improvements compared to its older OSes. Here are some easy steps you can follow to set up your Windows 11 laptop and enjoy its features.

Microsoft’s Windows 11 operating system (OS) offers a lot of improvements compared to its older OSes. Here are some easy steps you can follow to set up your Windows 11 laptop and enjoy its features. Windows 11 is available as a free upgrade for Windows 10 users, and many people — including laptop users — are taking advantage of this new operating system. If you’re one of them, then you need to know how to properly tweak Windows 11 features on your laptop so you can make the most of Windows 11.

Windows 11 is available as a free upgrade for Windows 10 users, and many people — including laptop users — are taking advantage of this new operating system. If you’re one of them, then you need to know how to properly tweak Windows 11 features on your laptop so you can make the most of Windows 11.

🫥

🫥