In addition to the new custom Arm-based Axion chips, most of this year's announcements are about AI accelerators, whether built by Google itself or from Nvidia.

© 2024 TechCrunch. All rights reserved. For personal use only.

Steve Thomas - IT Consultant

In addition to the new custom Arm-based Axion chips, most of this year's announcements are about AI accelerators, whether built by Google itself or from Nvidia.

© 2024 TechCrunch. All rights reserved. For personal use only.

The question is whether Nvidia can sustain that growth to become a long-term revenue powerhouse like AWS has become for Amazon.

© 2024 TechCrunch. All rights reserved. For personal use only.

At its GTC conference, Nvidia today announced Nvidia NIM, a new software platform designed to streamline the deployment of custom and pre-trained AI models into production environments. NIM takes the software work Nvidia has done around inferencing and optimizing models and makes it easily accessible by combining a given model with an optimized inferencing engine […]

© 2024 TechCrunch. All rights reserved. For personal use only.

Chip giant Nvidia is hosting a massive AI conference as part of its GTC event this week, which kicks off Monday. With a keynote planned from Jensen Huang, CEO and co-founder, of the company best known in year’s past for its gaming hardware and today for its massive market share in the burgeoning AI hardware […]

© 2024 TechCrunch. All rights reserved. For personal use only.

Looking back at the top enterprise news stories of the year, it's clear that generative AI dominated, but it wasn't the only news.

© 2023 TechCrunch. All rights reserved. For personal use only.

More and more companies are running large language models, which require access to GPUs. The most popular of those by far are from Nvidia, making them expensive and often in short supply. Renting a long-term instance from a cloud provider when you only need access to these costly resources for a single job, doesn’t necessarily […]

© 2023 TechCrunch. All rights reserved. For personal use only.

When Nvidia announced eye-popping earnings on Wednesday with three-digit year-over-year growth, it was easy to get caught up in the excitement. The company brought in $13.5 billion for the quarter, up 101% over the prior year, and well over its $11 billion guidance. That’s certainly something to get excited about.

Nvidia is benefiting from being a company in the right place at the right time, where its GPU chips are in high demand to run large language models and other AI-fueled workloads. That in turn is driving Nvidia’s astonishing growth this quarter. (It’s worth noting that the company set the groundwork for its current success some time ago.)

“Data center compute revenue nearly tripled year on year, driven primarily by accelerating demand for cloud from cloud service providers and large consumer internet companies for our HGX platform, the engine of generative and large language models,” Colette Kress, Nvidia’s executive vice president and chief financial officer, said in the post-earnings report call with analysts.

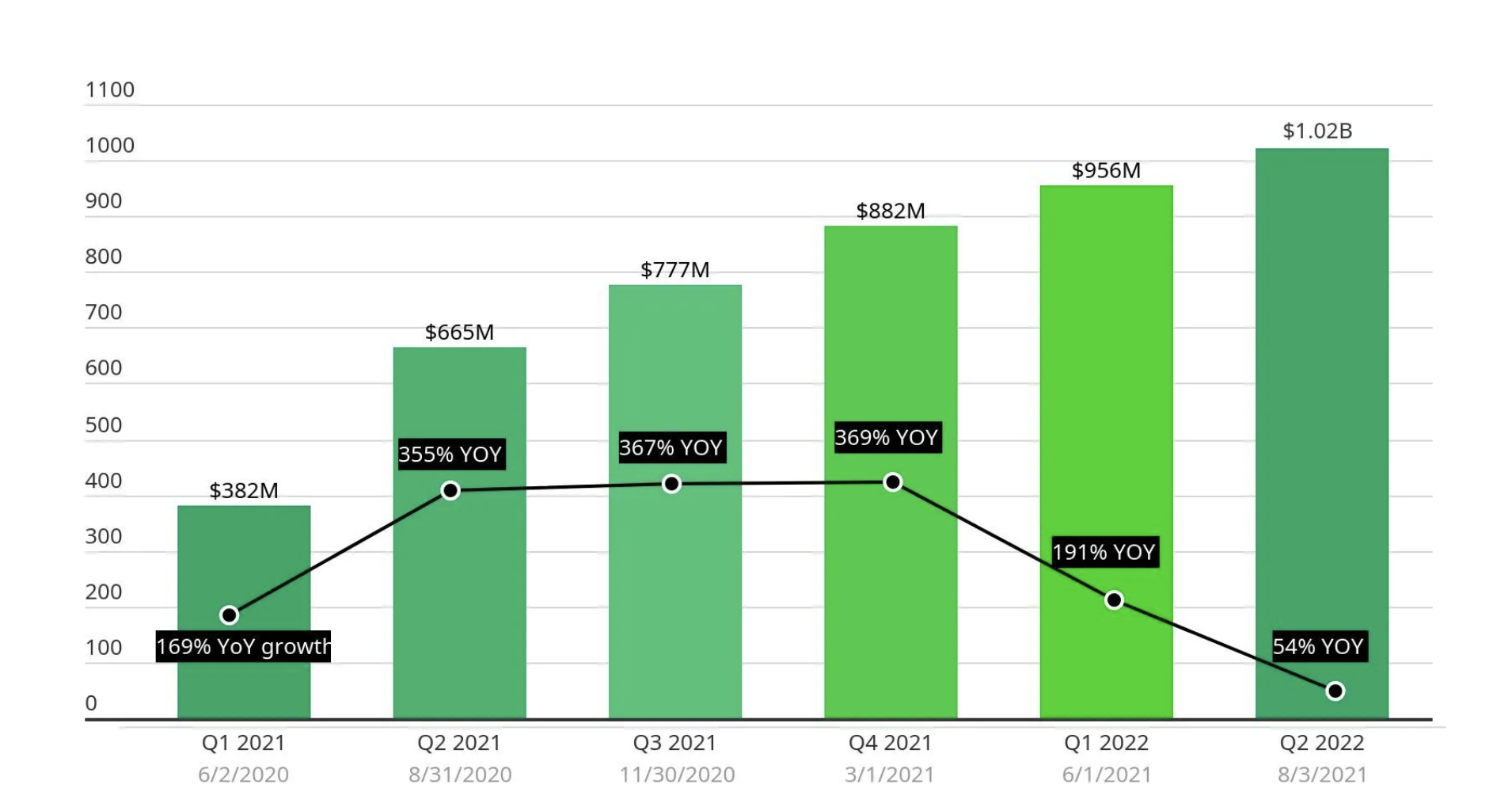

This kind of growth brings to mind the heady days of cloud stocks, some of which soared during the pandemic lockdown as companies accelerated their usage of SaaS to keep their workers connected. Zoom, in particular, took off with five quarters of absolutely astonishing growth during that time.

Zoom pandemic fueled growth. Image Credits: TechCrunch

Today, even double-digit growth is long gone. For its most recent report earlier this month, the company reported revenue of $1.138 billion, up 3.6% over the prior year. That follows five straight quarters of single-digit growth, the last three in the low single digits.

Could Zoom possibly be a cautionary tale for a company like Nvidia riding the generative AI wave? And perhaps more importantly, will this drive unreasonable investor expectations about future performance as it did with Zoom?

It’s interesting to note that Nvidia’s biggest growth area is in the data center, and that web scalers are still building at a rapid pace with plans to add over 300 new data centers in the coming years, per a Synergy Research report from March 2022.

“The future looks bright for hyperscale operators, with double-digit annual growth in total revenues supported in large part by cloud revenues that will be growing in the 20-30% per year range. This in turn will drive strong growth in capex generally and in data center spending specifically,” said John Dinsdale, a chief analyst at Synergy Research Group, in a statement about the report.

At least some percentage of this spending will surely be devoted to resources for running AI workloads, and Nvidia should benefit from that, CEO Jensen Huang told analysts on Wednesday. In fact, he believes that his company’s expansive growth is much more than a flash in the pan.

“There’s about $1 trillion worth of data centers, call it, a quarter of trillion dollars of capital spend each year. You’re seeing that data centers around the world are taking that capital spend and focusing it on the two most important trends of computing today: accelerated computing and generative AI,” Huang said. “And so I think this is not a near-term thing. This is a long-term industry transition, and we’re seeing these two platform shifts happening at the same time.”

If he’s right, perhaps the company can sustain this level of growth, but history suggests that what goes up must eventually come down.

If Zoom is any indication, some businesses that see rapid growth for one reason or another can hold onto that revenue in the future. While it’s certainly less exciting for investors that Zoom’s growth rate has sharply moderated in recent quarters, it’s also true that Zoom has continued to grow. That means it has retained all its prior scale and then some.

Today, Apple saw its market cap pass the $3 trillion threshold. The iPhone maker has reached that landmark before but has never managed to hang on to it through the end of a trading day.

But this morning, with its shares up about 1.4% and a significant $20 billion to $30 billion above the milestone, it seems the company is on pace to finally pull it off.

Less than five years ago, the ‘Big Five,’ which comprised Apple and four other tech companies, was worth a combined $3 trillion. It’s striking how much of a difference a few years can make.

The Exchange explores startups, markets and money.

Read it every morning on TechCrunch+ or get The Exchange newsletter every Saturday.

Flying somewhat underneath the radar in tech and startup-land is just how far technology stocks have rebounded this year. As CNBC wrote this morning, the Nasdaq’s performance in the first half of 2023 could “be the strongest for the index since 1983.” For startups, the rising value of tech stocks is slowly lifting revenue multiples, which reduces the pressure on future fundraising because their public market comparable companies are now worth more.

Apple has certainly benefited from this recent recovery. Its shares had risen a little more than 45.5% so far this year as of Thursday’s close.

Apple has certainly benefited from this recent recovery. Its shares had risen a little more than 45.5% so far this year as of Thursday’s close.

While Apple’s ascent to this milestone is notable, there’s been a greater reshuffling in the ranks of the biggest tech stocks. It’s time to update our acronyms and understand what the required changes tell us about the state of the world.

The tech industry is too broad to discuss collectively. This is doubly true today as previously tech-forward methods of doing business, like e-commerce and mobile, have become the norm, expanding the list of ‘tech’ companies to ludicrous breadth.

As Apple reaches $3T, it’s time to shake up the Big Tech club by Alex Wilhelm originally published on TechCrunch

Snowflake has always been about storing large amounts of unstructured data in the cloud. With two recent acquisitions, Neeva and Streamlit, it will make it easier to search and build applications on top of the data. Today, the company announced a new container service and a partnership with Nvidia to make it easier to build generative AI applications making use of all that data and running them on Nvidia GPUs.

Snowflake senior VP of products Christian Kleinerman says the goal is to let people make use of the data without having to copy and move it to an external application. “We want to enable our customers to bring computation to their enterprise data, and not have to be shipping their enterprise data to all sorts of external systems,” Kleinerman told TechCrunch.

The company is introducing the Snowpark Container Services along with the ability to run containerized applications on top of Nvidia GPUs, all without moving any data outside of Snowflake.

“We’re providing the ability for both customers and partners to run Docker containers inside the security perimeter of Snowflake, giving them controlled access to the enterprise data that lives in Snowflake,” Kleinerman said.

“And the way we’re surfacing this container services is by providing broader instance flexibility through what Snowflake has provided traditionally, and obviously the single biggest vector of flexibility that we’ve been getting requests for is access to GPUs,” he said, which is where the Nvidia partnership comes into play.

Nvidia VP of enterprise computing, Manuvir Das says that he sees Snowflake as a place where companies are storing their key data, and when you can build applications on top of that data, and then run those applications on top of Nvidia GPUs, it becomes a very powerful combination, especially when you bring generative AI into the equation.

He says that when you combine Nvidia’s GPU power along with its NeMo framework, companies can take the data in Snowflake and begin to build refined machine learning models based on their own unique data.

“That’s why this partnership is beautiful, because Snowflake has all that data, and now for the first time has the execution engine to run different pieces of software with that data. We’ve got that execution agent in NeMo that Nvidia has built for training, for fine tuning, for reinforcement learning and all of that,” Das said.

“And so the integration we’re doing together is we’re taking that agent and we’re bringing it to Snowflake’s platform and integrating it in so that all Snowflake customers who have the data now have sort of a first class capability from the Snowflake platform that they can do their model making on using the data, which produces these custom models for them,” he said.

He says that bringing together customer data, the models that they created using that data and then the applications that they’re running that are going to access those models all happening in one place will make it easier to keep secure and govern that data, and the Nvidia technology just makes it all run faster.

Snowflake’s Snowpark Container Service is available in private beta starting today.

Snowflake-Nvidia partnership could make it easier to build generative AI applications by Ron Miller originally published on TechCrunch

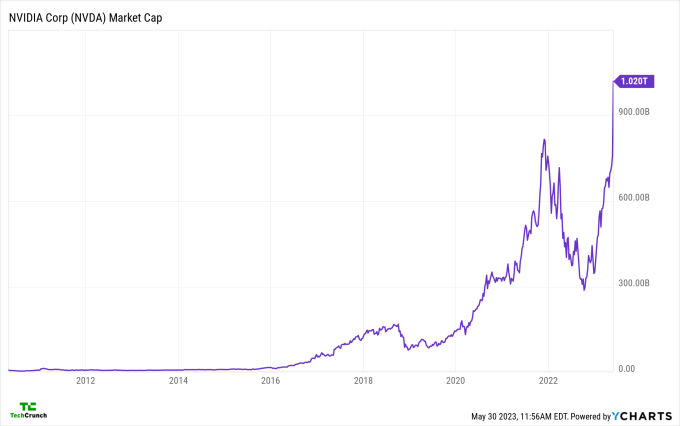

Welcome to the $1 trillion club, Nvidia.

Nvidia’s stock is up more than 6% today, pushing its shares past $413 in the wake of a widely-praised earnings report.

Given that the stock market has battered tech stocks in recent quarters, how is Nvidia breaking away from the pack? What can we learn from its rise?

The club of trillion-dollar companies, measured by market capitalization, is so small that even Meta doesn’t make the cut today. Tesla is only 63% of the way there and Salesforce is not even worth a quarter of the required value it needs to join. To see Nvidia reach a market value of more than $1,000,000,000,000 is therefore a massive endorsement not only of its trailing operating results, but also its anticipated future. Investors expect a lot from Nvidia.

The following chart shows its ascent:

Image Credits: Ycharts

To get our minds around what’s going on, let’s go through the company’s latest earnings report, consider what it is forecasting, and discuss how investors and analysts missed the mark with their own expectations.

Nvidia is not an enterprise software company, so when we look at its results, we do not see what SaaS companies tend to produce: consistent revenue growth coupled with incremental profitability gains.

Instead, in the first quarter of fiscal 2024 ended April 30, Nvidia posted the following:

Welcome to the trillion-dollar club, Nvidia by Alex Wilhelm originally published on TechCrunch

Jensen Huang wants to bring generative AI to every data center, the Nvidia co-founder and CEO said during Computex in Taipei today. During the speech, Huang’s first public speech in almost four years he said, he made a slew of announcements, including chip release dates, its DGX GH200 super computer and partnerships with major companies. Here’s all the news from the two-hour-long keynote.

2. Huang announced the Nvidia Avatar Cloud Engine (ACE) for Games, an customizable AI model foundry service with pre-trained models for game developers. It will give NPCs more character through AI-powered language interactions.

3. Nvidia Cuda computing model now serves four million developers and more than 3,000 applications. Cuda seen 40 million downloads, including 25 million just last year alone.

4. Full volume production of GPU server HGX H100 has begun and is being manufactured by “companies all over Taiwan,” Huang said. He added it is the world’s first computer that has a transformer engine in it.

5. Huang referred to Nvidia’s 2019 acquisition of supercomputer chipmaker Mellanox for $6.9 billion as “one of the greatest strategic decisions” it has ever made.

6. Production of the next generation of Hopper GPUs will start in August 2024, exactly two years after the first generation started manufacture.

7. Nvidia’s GH200 Grace Hopper is now in full production. The superchip boosts 4 PetaFIOPS TE, 72 Arm CPUs connected by chip-to-chip link, 96GB HBM3 and 576 GPU memory. Huang described as the world’s first accelerated computing processor that also has a giant memory: “this is a computer, not a chip.” It is designed for high-resilience data center applications.

8. If the Grace Hopper’s memory is not enough, Nvidia has the solution—the DGX GH200. It’s made by first connecting eight Grace Hoppers togethers with three NVLINK Switches, then connecting the pods together at 900GB together. Then finally, 32 are joined together, with another layer of switches, to connect a total of 256 Grace Hopper chips. The resulting ExaFLOPS Transformer Engine has 144 TB GPU memory and functions as a giant GPU. Huang said the Grace Hopper is so fast it can run the 5G stack in software. Google Cloud, Meta and Microsoft will be the first companies to have access to the DGX GH200 and will perform research into its capabilities.

9. Nvidia and SoftBank have entered into a partnership to introduce the Grace Hopper superchip into SoftBank’s new distributed data centers in Japan. They will be able to host generative AI and wireless applications in a multi-tenant common server platform, reducing costs and energy.

10. The SoftBank-Nvidia partnership will be based on Nvidia MGX reference architecture, which is currently being used in partnership with companies in Taiwan. It gives system manufacturers a modular reference architecture to help them build more than 100 server variations for AI, accelerated computing and omniverse uses. Companies in the partnership include ASRock Rack, Asus, Gigabyte, Pegatron, QCT and Supermicro.

11. Huang announced the Spectrum-X accelerated networking platform to increase the speed of Ethernet-based clouds. It includes the Spectrum 4 switch, which has 128 ports of 400GB per second and 51.2T per second. The switch is designed to enable a new type of Ethernet, Huang said, and was designed end-to-end to do adaptive routing, isolate performance and do in-fabric computing. It also includes the Bluefield 3 Smart Nic, which connects to the Spectrum 4 switch to perform congestion control.

12. WPP, the largest ad agency in the world, has partnered with Nvidia to develop a content engine based on Nvidia Omniverse. It will be capable of producing photos and video content to be used in advertising.

13. Robot platform Nvidia Isaac ARM is now available for anyone who wants to build robots, and is full-stack, from chips to sensors. Isaac ARM starts with a chip called Nova Orin and is the first robotics full-reference stack, said Huang.

Thanks in large to its importance in AI computing, Nvidia’s stock has soared over the past year, and it is currently has a market valuation of about $960 billion, making it one of the most valuable companies in the world (only Apple, Microsoft, Saudi Aramco, Alphabet and Amazon are ranked higher).

All the Nvidia news announced by Jensen Huang at Computex by Catherine Shu originally published on TechCrunch

Jensen Huang wants to bring generative AI to every data center, the Nvidia co-founder and CEO said during Computex in Taipei today. During the speech, Huang’s first public speech in almost four years he said, he made a slew of announcements, including chip release dates, its DGX GH200 super computer and partnerships with major companies. Here’s all the news from the two-hour-long keynote.

2. Huang announced the Nvidia Avatar Cloud Engine (ACE) for Games, an customizable AI model foundry service with pre-trained models for game developers. It will give NPCs more character through AI-powered language interactions.

3. Nvidia Cuda computing model now serves four million developers and more than 3,000 applications. Cuda seen 40 million downloads, including 25 million just last year alone.

4. Full volume production of GPU server HGX H100 has begun and is being manufactured by “companies all over Taiwan,” Huang said. He added it is the world’s first computer that has a transformer engine in it.

5. Huang referred to Nvidia’s 2019 acquisition of supercomputer chipmaker Mellanox for $6.9 billion as “one of the greatest strategic decisions” it has ever made.

6. Production of the next generation of Hopper GPUs will start in August 2024, exactly two years after the first generation started manufacture.

7. Nvidia’s GH200 Grace Hopper is now in full production. The superchip boosts 4 PetaFIOPS TE, 72 Arm CPUs connected by chip-to-chip link, 96GB HBM3 and 576 GPU memory. Huang described as the world’s first accelerated computing processor that also has a giant memory: “this is a computer, not a chip.” It is designed for high-resilience data center applications.

8. If the Grace Hopper’s memory is not enough, Nvidia has the solution—the DGX GH200. It’s made by first connecting eight Grace Hoppers togethers with three NVLINK Switches, then connecting the pods together at 900GB together. Then finally, 32 are joined together, with another layer of switches, to connect a total of 256 Grace Hopper chips. The resulting ExaFLOPS Transformer Engine has 144 TB GPU memory and functions as a giant GPU. Huang said the Grace Hopper is so fast it can run the 5G stack in software. Google Cloud, Meta and Microsoft will be the first companies to have access to the DGX GH200 and will perform research into its capabilities.

9. Nvidia and SoftBank have entered into a partnership to introduce the Grace Hopper superchip into SoftBank’s new distributed data centers in Japan. They will be able to host generative AI and wireless applications in a multi-tenant common server platform, reducing costs and energy.

10. The SoftBank-Nvidia partnership will be based on Nvidia MGX reference architecture, which is currently being used in partnership with companies in Taiwan. It gives system manufacturers a modular reference architecture to help them build more than 100 server variations for AI, accelerated computing and omniverse uses. Companies in the partnership include ASRock Rack, Asus, Gigabyte, Pegatron, QCT and Supermicro.

11. Huang announced the Spectrum-X accelerated networking platform to increase the speed of Ethernet-based clouds. It includes the Spectrum 4 switch, which has 128 ports of 400GB per second and 51.2T per second. The switch is designed to enable a new type of Ethernet, Huang said, and was designed end-to-end to do adaptive routing, isolate performance and do in-fabric computing. It also includes the Bluefield 3 Smart Nic, which connects to the Spectrum 4 switch to perform congestion control.

12. WPP, the largest ad agency in the world, has partnered with Nvidia to develop a content engine based on Nvidia Omniverse. It will be capable of producing photos and video content to be used in advertising.

13. Robot platform Nvidia Isaac ARM is now available for anyone who wants to build robots, and is full-stack, from chips to sensors. Isaac ARM starts with a chip called Nova Orin and is the first robotics full-reference stack, said Huang.

Thanks in large to its importance in AI computing, Nvidia’s stock has soared over the past year, and it is currently has a market valuation of about $960 billion, making it one of the most valuable companies in the world (only Apple, Microsoft, Saudi Aramco, Alphabet and Amazon are ranked higher).

All the Nvidia news announced by Jensen Huang at Computex by Catherine Shu originally published on TechCrunch