During a gold rush, Silicon Valley’s line is to always invest in picks and shovels instead of mining. Sometimes it pays just to do both.

TechCrunch has learned through a company fundraise overview that Beijing-based mining equipment seller Bitmain hit a quarterly revenue of approximately $2 billion in Q1 of this year. Despite a slump in bitcoin prices since the beginning of the year, the company is on track to become the first blockchain-focused company to achieve $10 billion in annual revenue, assuming that the cryptocurrency market doesn’t drop further.

Fortune has previously reported that the company had $1.1 billion in profits in the same quarter, a number in line with these revenue numbers, given a net margin of around 50%.

That growth is extraordinary. From the same source seen by TechCrunch, Bitmain’s revenues last year were $2.5 billion, and around $300 million just the year before that. The company reportedly raised a major venture round of $300-400 million from investors including Sequoia China, at a valuation of $12 billion.

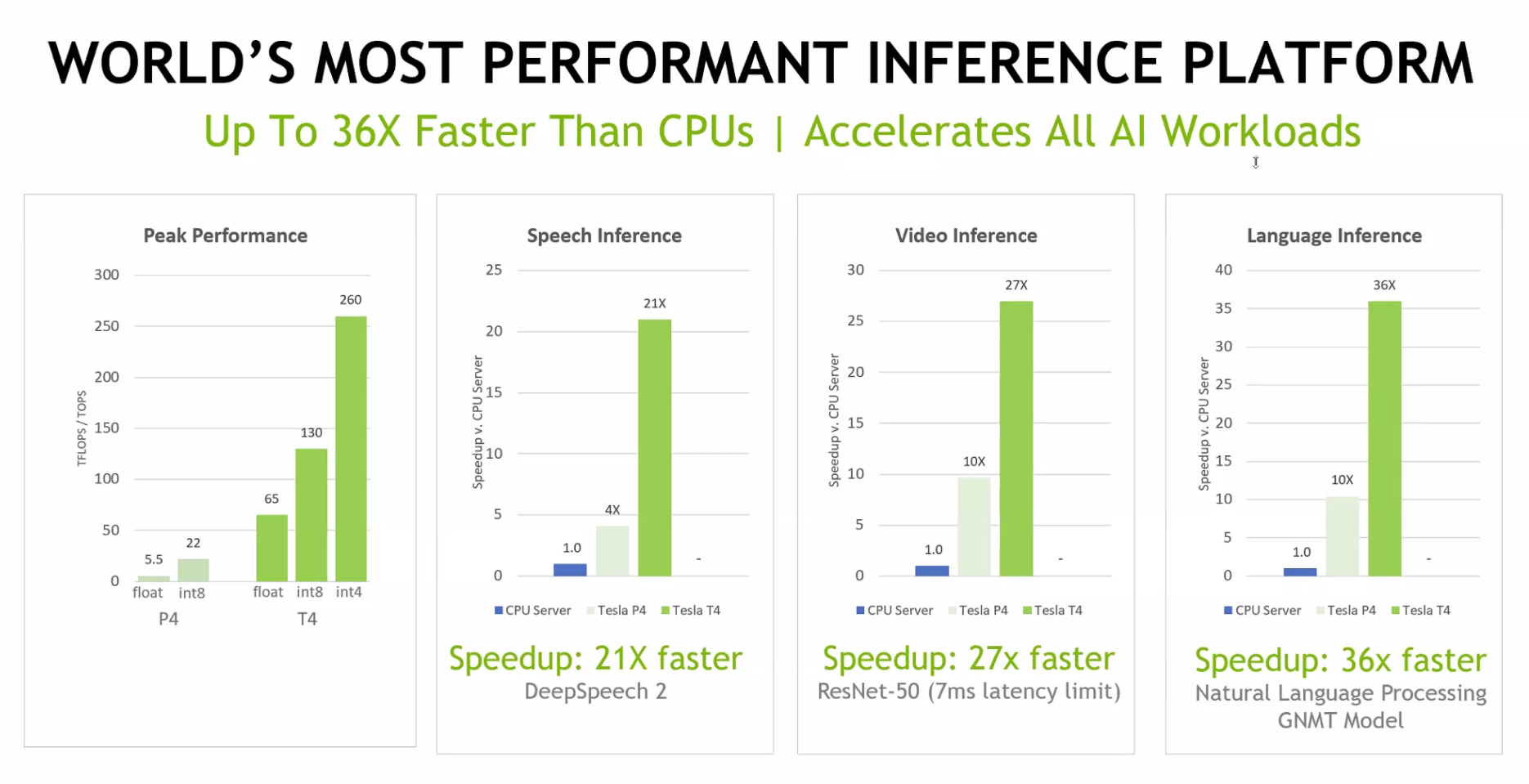

For comparison, popular cryptocurrency wallet Coinbase made $1 billion in revenue in 2017. In addition, Nvidia, a company based out of California that also makes computer chips, generated revenues of $9.7 billion in its 2018 fiscal year (2017 calendar year). Nvidia’s revenues were $3.21 billion in Q1 fiscal year 2019 (Feb-April 2018), and historical revenue figures show a general seasonal uptrend in revenue from Q1 through Q4.

The same overview also shows that Bitmain is exploring an IPO with a valuation between $40-50 billion. That would represent a significant uptick from its most recent valuation, and is almost certainly dependent on the vitality of the broader blockchain ecosystem.

Several of Bitmain’s competitors have filed for IPO since the beginning of 2018 but most of them are significantly smaller in size. For example, Hong Kong- based company Canaan Creative filed for an IPO in May, and the latest was that it was aiming for $1 billion to $2 billion in fundraising with 2017 revenue of USD $204 million.

When contacted for this story, Bitmain declined to comment on the specific numbers TechCrunch has acquired.

A Brief Overview of Bitmain

Bitmain is the world’s dominant producer of cryptocurrency mining chips known as ASICs, or Application-Specific Integrated Circuit. It was founded by Jihan Wu and Micree Zhang in 2013, and the company is currently headquartered in Beijing.

As the story goes, back in 2011, when Wu read Satoshi Nakamoto’s whitepaper on Bitcoin, he emptied his bank account to buy them. Back then, one bitcoin could be purchased for under a dollar. And by 2013, Wu and Zhang decided to build an ASIC chip specifically for bitcoin mining and founded Bitmain. Wu was just 28 at the time.

Cryptocurrency mining is the process of checking and adding new transactions to bitcoin’s immutable ledger, called the blockchain. The blockchain is formed by digital blocks, where transactions are recorded. The act of mining is essentially using math to solve for a cryptographic hash, or an unique signature if you will, to identify new blocks.

The general mining process requires massive processing power and incurs hefty energy costs. In exchange for those expenses, miners are rewarded with a number of bitcoins for each block they add onto the blockchain. Currently, in the case of Bitcoin, the reward for every block discovered is 12.5 bitcoins. At the current average trailing bitcoin price of approximately $6,500, that’s $81,250 up for grabs every 10 minutes, or $11.7 million dollars a day.

Bitmain has several business segments. The first and primary one is selling mining machines outfitted with Bitmain’s chips that are usually a few hundred to a few thousand dollars each. For example, the latest Antminer S9 model is listed as $3,319. Secondly, you can rent Bitmain’s mining machines to mine cryptocurrencies.

Third, you can participate to mine bitcoin as part of Bitmain’s mining pool. A mining pool is a joint group of cryptocurrency miners who combine their computational resources over a network. Bitmain’s two mining pools, Bitmain’s AntPool and BTC.com, collectively control more than 38 percent of the world’s Bitcoin mining power per BTC.com at the moment.

The future of Bitmain is Closely Tied with the Crypto Market

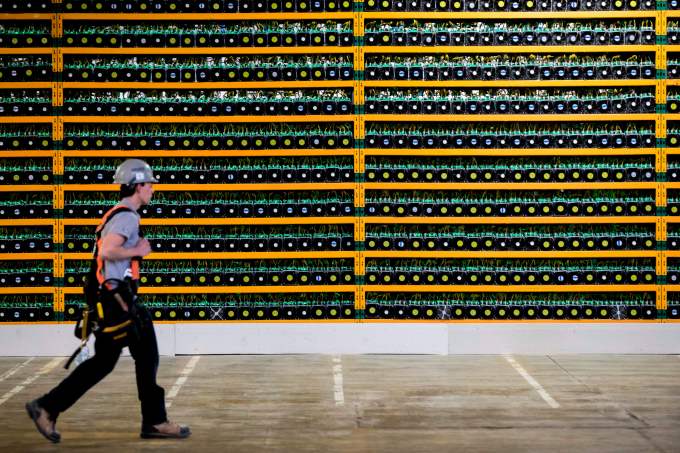

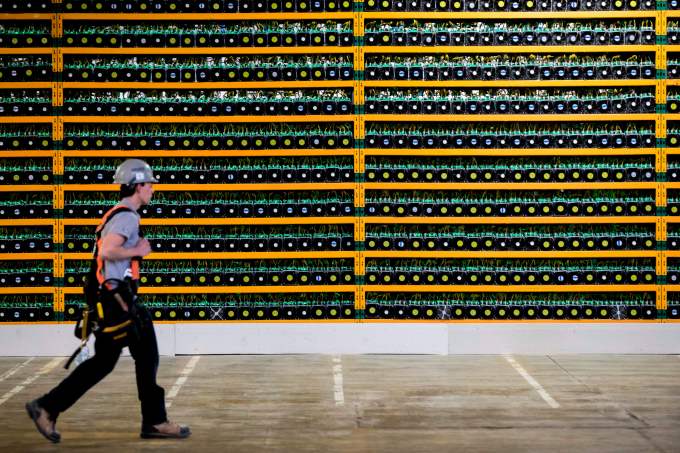

Bitcoin mining is a massive business with influence over energy prices across the world. (LARS HAGBERG/AFP/Getty Images)

Despite its rapid rise to success, Bitmain is ultimately dependent on the price of cryptocurrencies and overall crypto market fluctuations. When there is a bull crypto market, investors would be willing to give a different valuation multiple to the company than if it were in a bear market. In a bear market, the margins are reduced for both the company as well as for its customers, as the economics of mining cryptocurrrncies are no longer as compelling. For example, at the end of 2014, Mt. Gox, a famous Bitcoin exchange at the time, was hacked, spurring a crash in cryptocurrency prices.

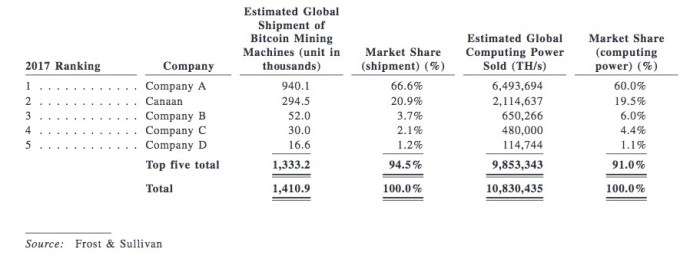

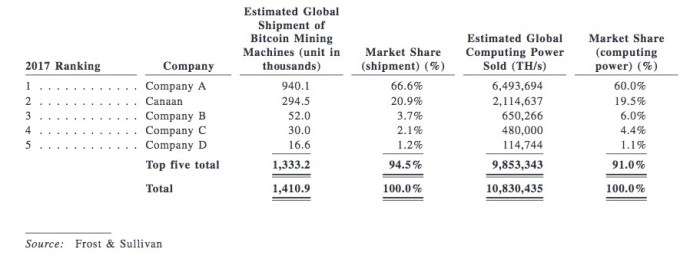

Subsequently, Bitmain went through a bitcoin drought as Bitcoin prices hit low points, and its ASIC chips did not see much demand. It was not appealing to miners to pay for expensive electricity bills to mine a digital currency that was falling in value. But fast forward to now, we have gone through several bull and bear crypto market cycles. According to Frost & Sullivan, in 2017, Bitmain is estimated to have ~67% of the market share in bitcoin mining hardware, and generated 60% of computing power.

Canaan Creative IPO filing. Compay A is Bitmain

One of the fundamental challenges facing any cryptocurrency mining manufacturer such as Bitmain is that the valuation of the company is largely based off of the price of cryptocurrencies. The market in the first half of 2018 has shown that no one really knows when bitcoin prices and the cryptocurrency market will start picking up again Additionally, according to Frost & Sullivan, the ASIC-based blockchain hardware market, which is the market segment that includes Bitmain and Canaan, will see its compound annual growth rate (CAGR) slow to around 57.7% annually between 2017 to 2020, down from 247.6% between 2013 and 2017.

Nonetheless, it seems that Bitmain has planned well ahead to prepare for these macro risks and exposures. The company has raised significant private funding and has been expanding its business into mining new coins and creating new chips outside of cryptocurrency applications.

First, with it’s existing mining rigs, Bitmain can essentially broaden into all SHA256-related coins. So coins such as Bitcoin, Bitcoin Cash, Litecoin, can all be mined on Bitmain’s equipment. The limitation here is largely how fast they can build up more mining equipment and mining centers. The company has broadened it’s geographic reach by developing new mining centers. Most recently, Bitmain revealed that it will build a $500 mn blockchain data center and mining facility in Texas as part of its expansion into the U.S. market, aiming for operations to begin by early 2019.

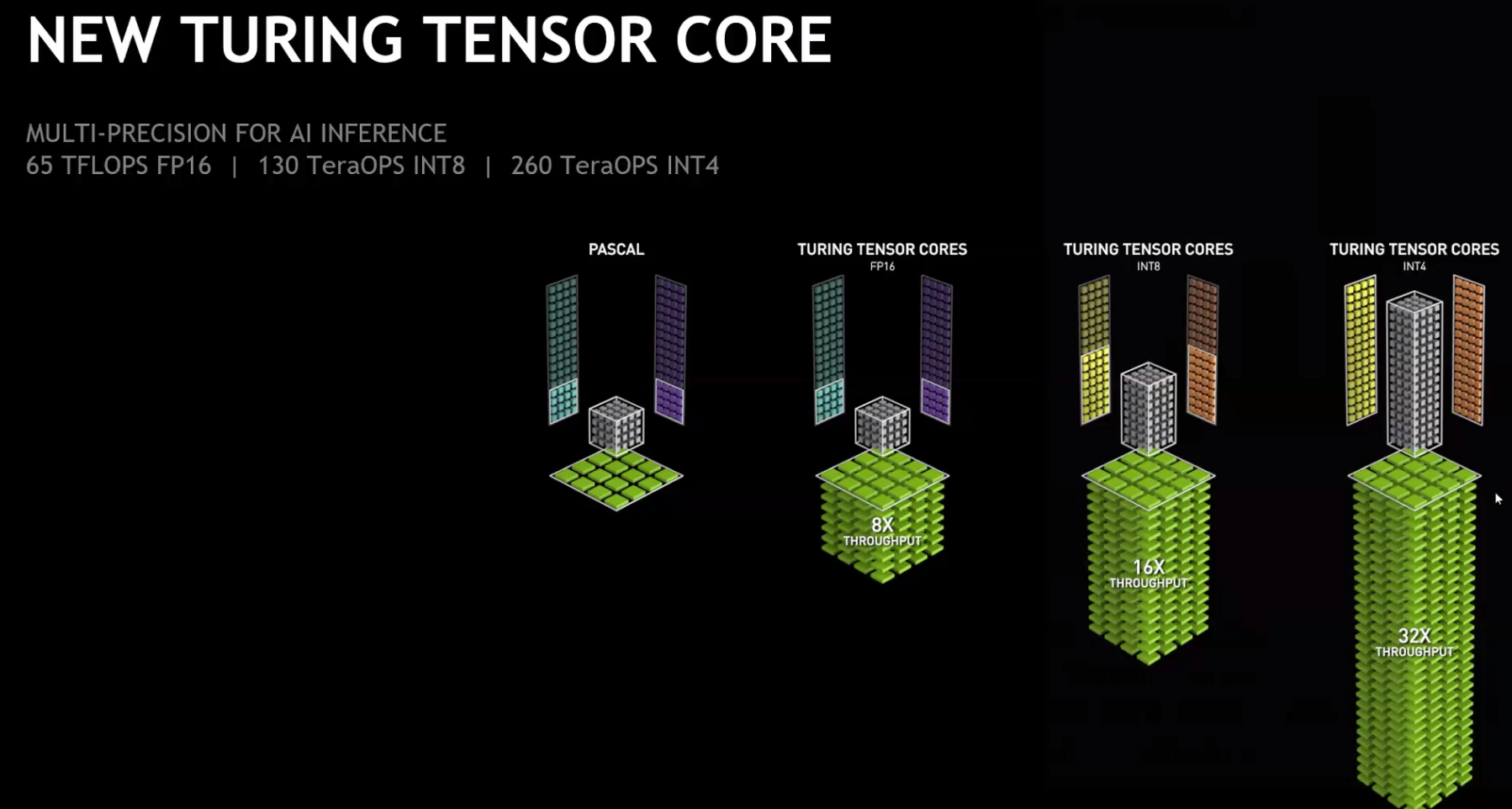

Secondly, Bitmain is also looking to launch their own AI chips by the end of 2018. Interestingly, the AI chips are called Sophons, originated from the key alien technology in the famous trilogy, the Three Body Problem, by Liu Cixin. If things go as planned, Bitmain’s Sophon units could be training neural networks in data centers around the world. Bitmain’s CEO Wu once said that in 5 years, 40% of revenues could come from AI chips.

Lastly, Bitmain has been equipping itself with cash. Lots of it, from a number of the top and largest investors in Asia. Two months ago, China Money Network reported that Bitmain raised a series B round, led by Sequoia Capital China, DST, GIC, Coatue in a $400 million raise, putting the company at a value of $12 billion. Just last week, Chinese tech conglomerate Tencent and Japan’s Softbank, another tech giant whose 15% stake in Uber makes it the drive-hailing app’s largest shareholder, also joined the investor base.

For Bitmain, there are many reasons to stay private as a company, including keeping its quarterly financials private as well as dealing with market fluctuations and the ongoing volatility and uncertainty in the cryptocurrency world. However, the con is that early employees may not get liquidity in their stock options until much later.

Wu has said that a Bitmain IPO would be a “landmark” for both the company and the cryptocurrency space. However, with the current rich crypto private market financing, it’s not so bad of an idea to continue to raise private money and stay out of the public eye. Once Bitmain’s financials become more diversified and cryptocurrency becomes more widely adopted worldwide, the world may then be ready for this $10bn revenue blockchain company.

technologies with deep learning that understands human-to-human conversations. Its Otter.ai product digitizes all voice meetings and video conferences, makes every conversation searchable and also provides speech analytics and insights. Otter.ai is the exclusive provider of automatic meeting transcription for Zoom Video Communications.

technologies with deep learning that understands human-to-human conversations. Its Otter.ai product digitizes all voice meetings and video conferences, makes every conversation searchable and also provides speech analytics and insights. Otter.ai is the exclusive provider of automatic meeting transcription for Zoom Video Communications.

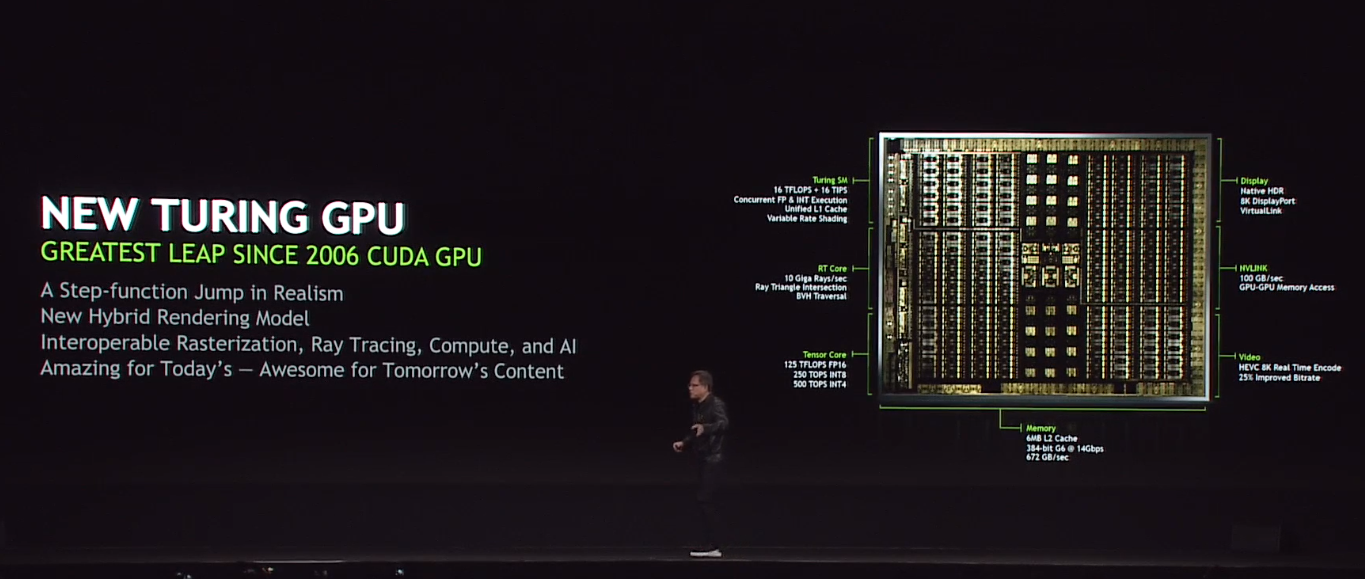

The AI part here is more important than it may seem at first. With NGX, Nvidia today also launched a new platform that aims to bring AI into the graphics pipelines. “NGX technology brings capabilities such as taking a standard camera feed and creating super slow motion like you’d get from a $100,000+ specialized camera,” the company explains, and also notes that filmmakers could use this technology to easily remove wires from photographs or replace missing pixels with the right background.

The AI part here is more important than it may seem at first. With NGX, Nvidia today also launched a new platform that aims to bring AI into the graphics pipelines. “NGX technology brings capabilities such as taking a standard camera feed and creating super slow motion like you’d get from a $100,000+ specialized camera,” the company explains, and also notes that filmmakers could use this technology to easily remove wires from photographs or replace missing pixels with the right background.

Nvidia will power artificial intelligence technology built into its future vehicles, including the new I.D. Buzz, its all-electric retro-inspired camper van concept. The partnership between the two companies also extends to the future vehicles, and will initially focus on so-called “Intelligent Co-Pilot” features, including using sensor data to make driving easier, safer and…

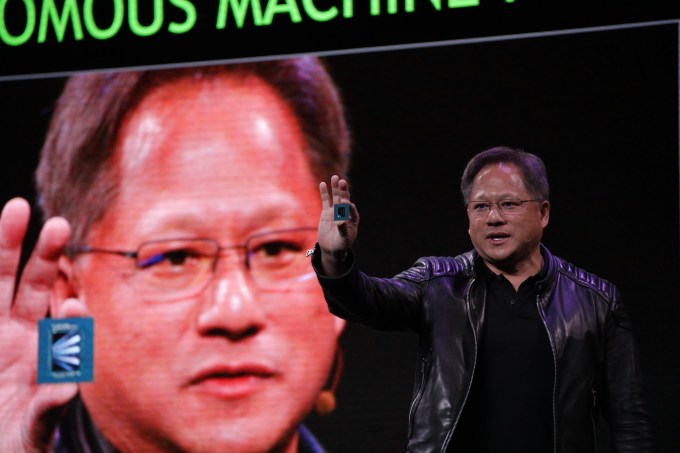

Nvidia will power artificial intelligence technology built into its future vehicles, including the new I.D. Buzz, its all-electric retro-inspired camper van concept. The partnership between the two companies also extends to the future vehicles, and will initially focus on so-called “Intelligent Co-Pilot” features, including using sensor data to make driving easier, safer and…  Nvidia revealed a lot of news about its Xavier autonomous machine intelligence processors at this year’s CES show in Las Vegas. The first production samples of the Xavier are now shipping out to customers, after being unveiled last year, and Nvidia also announced three new variants of its DRIVE AI platform, which are based around Xavier SoCs. The new DRIVE AI offerings include one focused…

Nvidia revealed a lot of news about its Xavier autonomous machine intelligence processors at this year’s CES show in Las Vegas. The first production samples of the Xavier are now shipping out to customers, after being unveiled last year, and Nvidia also announced three new variants of its DRIVE AI platform, which are based around Xavier SoCs. The new DRIVE AI offerings include one focused…