CES may be going ahead as a shortened, pared-down operation this year, but we’re still seeing a decent swathe of announcements prepared for the event still coming out into the wild, in particular among chipmakers who power the world’s computers. Intel has been a regular presence at the show and is continuing that with its run of news today, focusing on the newest, 12th generation of its mobile chip with versions aimed both at enterprises and consumers, alongside updates to its Evo computing platform concept, new 35- and 65-watt processors for desktop, and vPro platform launches.

With some of the lineup announced back in October (it has now dropped the Alder Lake naming that appeared still to be in use then) today’s news is arguably the biggest push that Intel has made in years to promote its processors and build for a range of use cases, ranging from consumers through to more intense gaming, through to enterprise applications and IoT deployments. After what some described as a lacklustre 11th-generation launch, here Intel is unveiling no less than 28 new 12th-generation Intel Core mobile processors, and an addition 22 desktop processors.

Intel claims that the mobile processors are clocking in speeds of up to 40% faster than its previous generations

“Intel‘s new performance hybrid architecture is helping to accelerate the pace of innovation and the future of compute,” said Gregory Bryant, executive vice president and general manager of Intel’s Client Computing Group, in a statement. “We want to bring that idea of ubiquitous computing to life,” he added in a presentation today at CES.

The H-series of the 12th Gen Intel core mobile processors come in four main categories, i3, i5, i7, and i9. The i9-12900HK is the fastest of the range of eight and are one of the first from Intel to build performance and efficient cores together on the same chip to better handle heavy workloads. They come with frequencies of up to 5GHz, 14 cores (6 for performance, 8 for efficiency) and 20 threads for applications that are multi-threaded, and they also offer memory support for DDR5/LPDDR5 and DDR4/LPDDR4 modules up to 4800 MT/s. Intel says this is a first in the industry for H-series mobile processors.

They offer support for Deeplink for optimized power usage; Thunderbolt 4 for faster data transfers (up to 40 Gbps) and connectivity; and Intel’s new integrated WiFi 6E, which Intel dubs its “Killer” WiFi and will be available in nearly all laptops running Intel’s 12th generation chips. The interesting thing about this latest WiFi version is that it essentially optimizes for gameplay and other bandwidth-intensive activities: latency is created by putting the most powerful applications on channels separate from the rest of the applications on a device that might also be using bandwidth (these are relegated to lower bandwidth channels) that now essentially run in the background. Bands can also be merged intelligently by the system when more power for a specific application is needed. All this will be available from February 2022, Intel said.

H-series, it added, is now in full production, with Acer, Dell, Gigabyte, HP, Lenovo, MSi, and Razer among those building machines using it, some 100 designs in all covering both Windows and Chrome operating systems.

In terms of applications that Intel is highlighting for its chip use, in addition to enterprises and more casual consumers, it continues to focus on gaming. No surprise there, given the demands of the most advanced games and gamers today, which have become major drivers for improving compute power. To that end, Intel is making sure its chips are in that mix with the 12th-generation chip.

That has included both investing in gaming companies (such as Razer), as well as working closely with developers to optimize speeds on its processors. Intel said that work with Hitman 3, for example, so that its chips could support the audio and graphics processing in the game increased frame rates by up to 8%.

“Tuning games to achieve maximum performance can be daunting,” says Maurizio de Pascale, chief technology officer at IO Interactive, in a statement. “But partnering with Intel and leveraging their decades of expertise has yielded fantastic results – especially optimizing for the powerful 12th Gen Intel Core processors. As an example, anyone who plays on a laptop

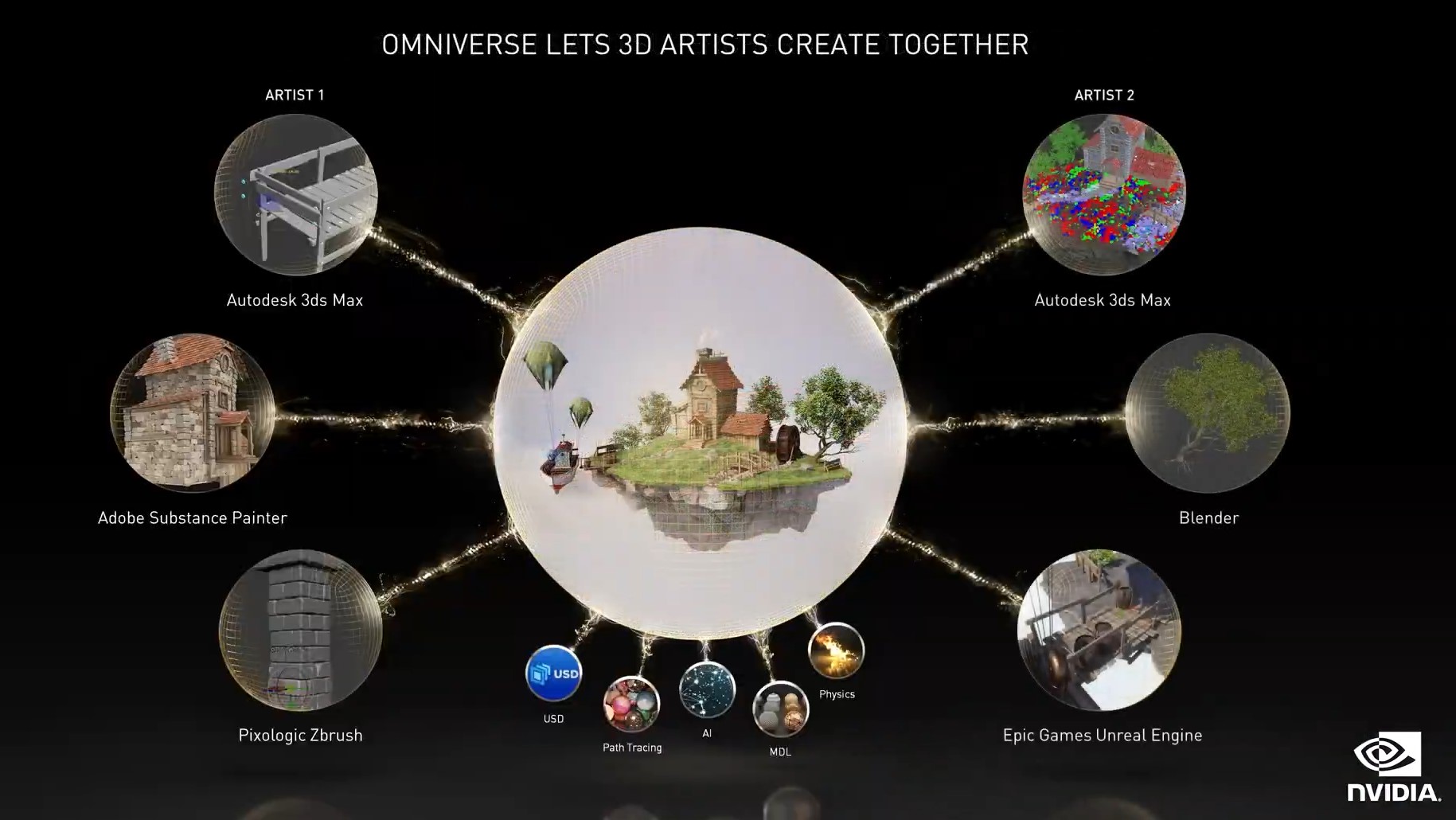

Content application remains another major part of the market for Intel, with customers building software and hardware optimized for its chips including Adobe, Autodesk, Foundry Blender, Dolby, Dassault, Magix and more. Indeed, the processor now sites at the centre of all of these as they live as digital activities. They also represent a large number of verticals that Intel can target, including product designers, engineers, broadcasting and streaming, architects, creators, scientific visualization.

The 22 new processors getting unveiled are coming in both 65 watt and 35 watt varieties. Alongside the higher wattage (and thus higher energy consuming) chip, Intel also launched a new Laminar cooler.

Another strand of Intel’s work over the last several years has been to approach the specifications of computers running its chips in a more holistic way to integrate what it is building with where it can be put to use, by way of its Evo platform and Project Athena. Intel said that there are not more than 100 co-engineered designs using the 12th-generation chips based on these, ranging from foldable displays to more standard laptops, with many of them launching during the first half of this year.

Evo specifications already cover responsiveness, battery life, instant wake, and fast charge, and Intel said that a new addition to that range will be a new parameter, “intelligent collaboration”, which will be focused on how many of us are using computers today, for remote collaboration, videoconferencing and the features that make it better such as AI-based noise cancellation, better WiFi usage, and enhanced camera and other imaging effects. This will be likely where its $100-$150 million acquisition of screen mirroring tech provider Screenovate, which it confirmed in December, will fit.

At a time when Intel continues to face a lot of competition from the likes of AMD and Nvidia, and Apple makes yet more moves to distance itself from the company, continuing to move ahead and reinforce the partners that it does have, and build an ecosystem around that, is the strategy that the company will continue to pursue, as long as it keeps up its end of the innovation bargain.

“Microsoft and Intel have a long history of partnering together to deliver incredible performance and seamless experiences to people all over the world,” said Panos Panay, chief product officer at Microsoft, in a statement. “Whether playing the latest AAA title, encoding 8K video or developing complex geological models, the combination of Windows 11 and the new 12th-gen Intel Core mobile processors means you’re getting a powerhouse experience.”