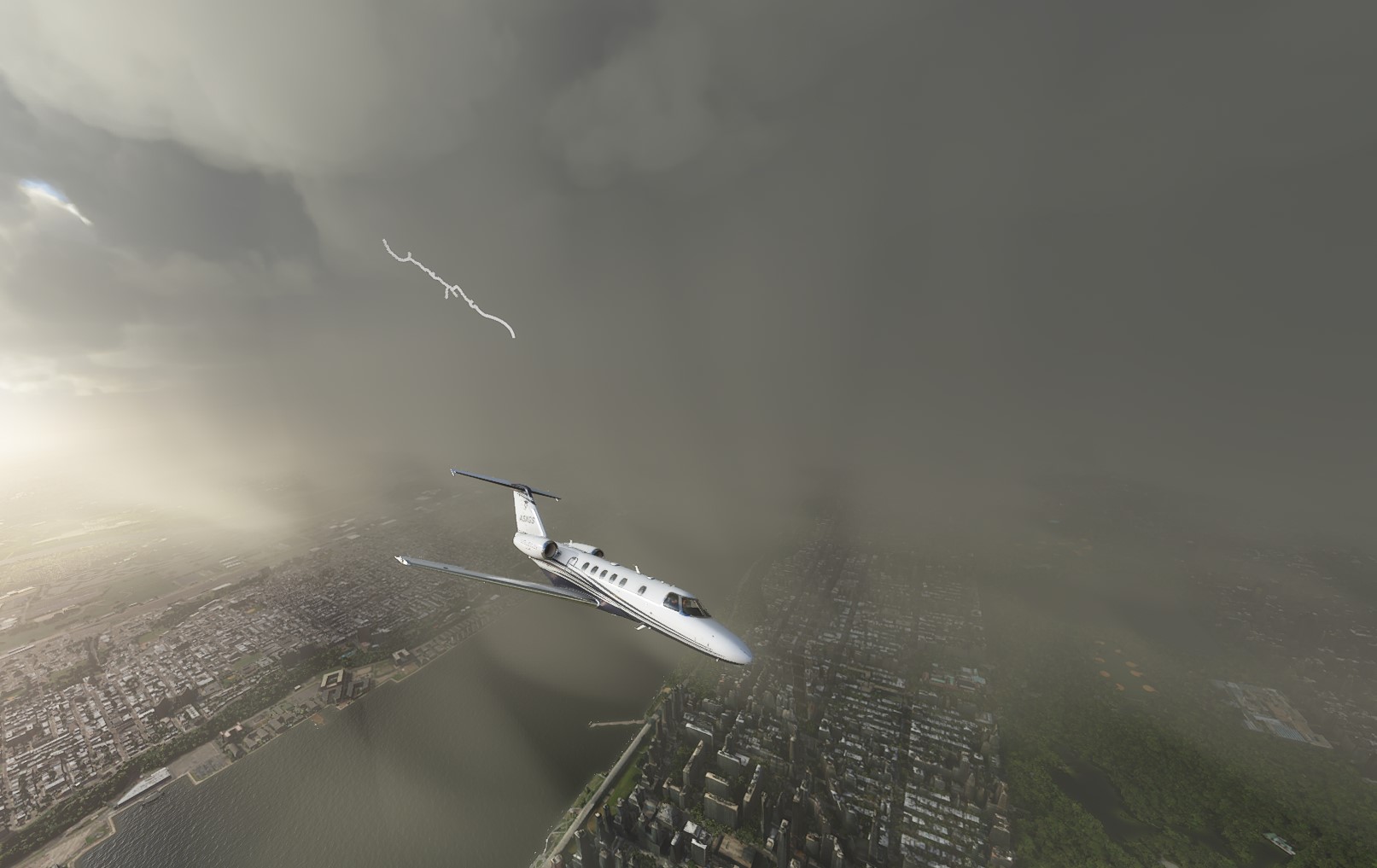

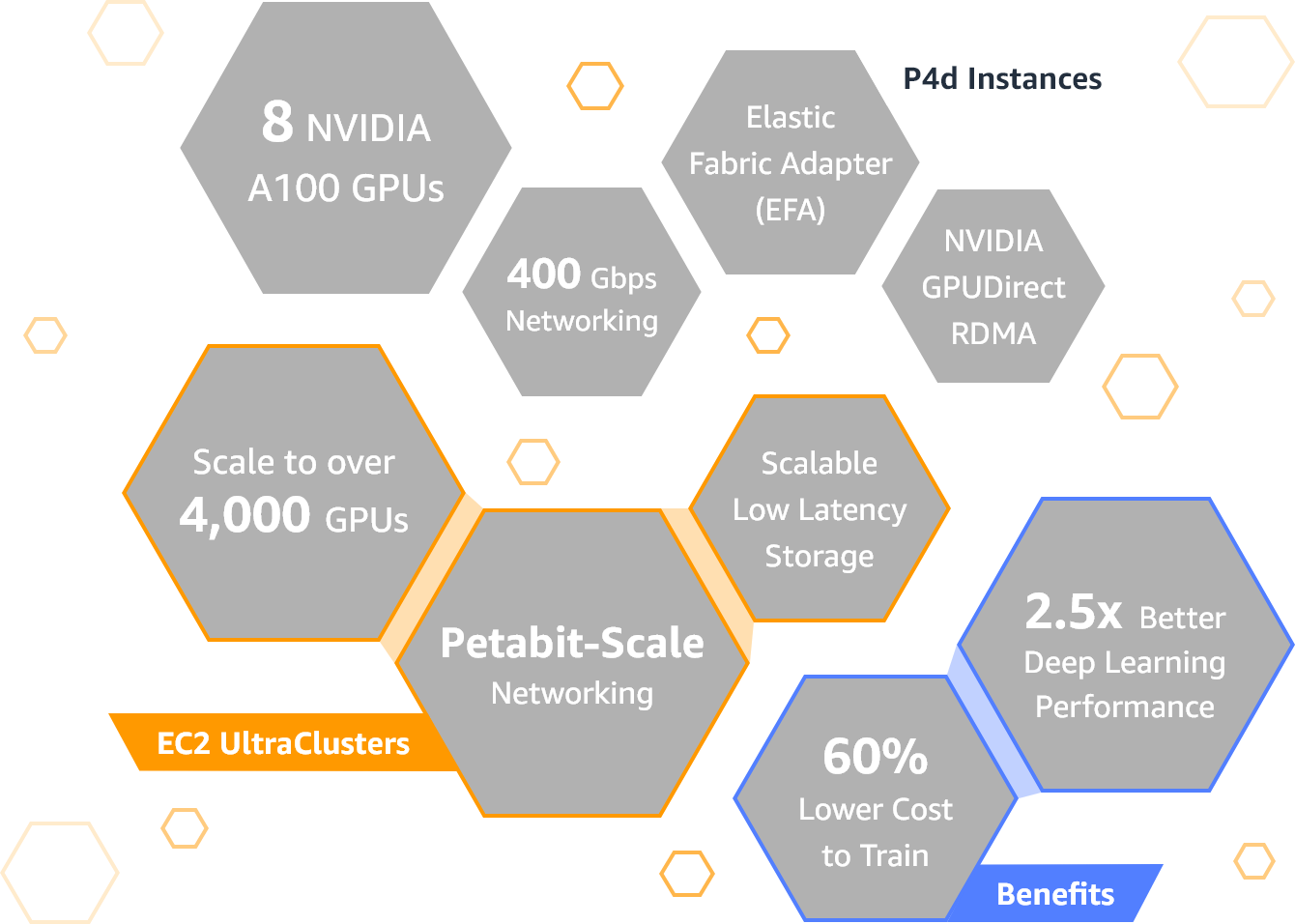

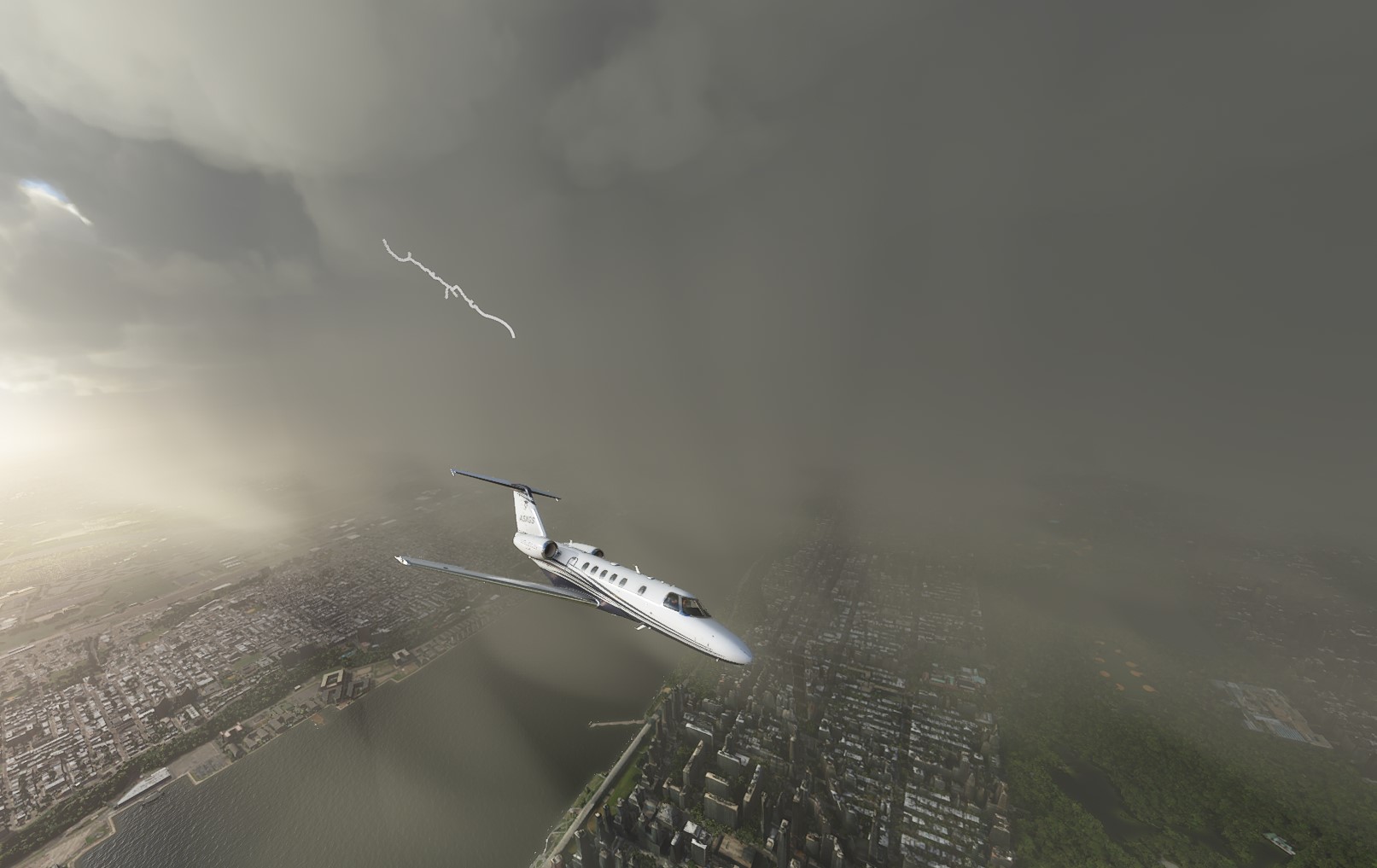

For the last two weeks, I’ve been flying around the world in a preview of Microsoft’s new Flight Simulator. Without a doubt, it’s the most beautiful flight simulator yet, and it’ll make you want to fly low and slow over your favorite cities because — if you pick the right one — every street and house will be there in more detail than you’ve ever seen in a game. Weather effects, day and night cycles, plane models — it all looks amazing. You can’t start it up and not fawn over the graphics.

But the new Flight Simulator is also still very much a work in progress, too, even just a few weeks before the scheduled launch date on August 18. It’s officially still in beta, so there’s still time to fix at least some of the issues I list below. Because Microsoft and Asobo Studios, which was responsible for the development of the simulator, are using Microsoft’s AI tech in Azure to automatically generate much of the scenery based on Microsoft’s Bing Maps data, you’ll find a lot of weirdness in the world. There are taxiway lights in the middle of runways, giant hangars and crew buses at small private fields, cars randomly driving across airports, giant trees growing everywhere (while palms often look like giant sticks), bridges that are either under water or big blocks of black over a river — and there are a lot of sunken boats, too.

When the system works well, it’s absolutely amazing. Cities like Barcelona, Berlin, San Francisco, Seattle, New York and others that are rendered using Microsoft’s photogrammetry method look great — including and maybe especially at night.

Image Credits: Microsoft

The rendering engine on my i7-9700K with an Nvidia 2070 Super graphics card never let the frame rate drop under 30 frames per second (which is perfectly fine for a flight simulator) and usually hovered well over 40, all with the graphics setting pushed up to the maximum and with a 2K resolution.

When things don’t work, though, the effect is stark because it’s so obvious. Some cities, like Las Vegas, look like they suffered some kind of catastrophe, as if the city was abandoned and nature took over (which in the case of the Vegas Strip doesn’t sound like such a bad thing, to be honest).

Image Credits: TechCrunch

Thankfully, all of this is something that Microsoft and Asobo can fix. They’ll just need to adjust their algorithms, and because a lot of the data is streamed, the updates should be virtually automatic. The fact that they haven’t done so yet is a bit of a surprise.

Image Credits: TechCrunch

Chances are you’ll want to fly over your house the day you get Flight Simulator. If you live in the right city (and the right part of that city), you’ll likely be lucky and actually see your house with its individual texture. But for some cities, including London, for example, the game only shows standard textures, and while Microsoft does a good job at matching the outlines of buildings in cities where it doesn’t do photogrammetry, it’s odd that London or Amsterdam aren’t on that list (though London apparently features a couple of wind turbines in the city center now), while Münster, Germany is.

Once you get to altitude, all of those problems obviously go away (or at least you won’t see them). But given the graphics, you’ll want to spend a lot of time at 2,000 feet or below.

Image Credits: TechCrunch

What really struck me in playing the game in its current state is how those graphical inconsistencies set the standard for the rest of the experience. The team says its focus is 100% on making the simulator as realistic as possible, but then the virtual air traffic control often doesn’t use standard phraseology, for example, or fails to hand you off to the right departure control when you leave a major airport, for example. The airplane models look great and feel pretty close to real (at least for the ones I’ve flown myself), but some currently show the wrong airspeed, for example. Some planes use modern glass cockpits with the Garmin 1000 and G3X, but those still feel severely limited.

But let me be clear here. Despite all of this, even in its beta state, Flight Simulator is a technical marvel and it will only get better over time.

Image Credits: TechCrunch

Let’s walk through the user experience a bit. The install on PC (the Xbox version will come at some point in the future) is a process that downloads a good 90GB so that you can play offline as well. The install process asks you if you are OK with streaming data, too, and that can quickly add up. After reinstalling the game and doing a few flights for screenshots, the game had downloaded about 10GB already — it adds up quickly and is something you should be aware of if you’re on a metered connection.

[gallery ids="2024272,2024274,2024275,2024276,2024277,2024278,2024281"]

Once past the long install, you’ll be greeted by a menu screen that lets you start a new flight, go for one of the landing challenges or other activities the team has set up (they are really proud of their Courchevel scenery) and go through the games’ flight training program.

Image Credits: Microsoft

That training section walks you through eight activities that will help you get the basics of flying a Cessna 152. Most take fewer than 10 minutes and you’ll get a bit of a de-brief after, but I’m not sure it’s enough to keep a novice from getting frustrated quickly (while more advanced players will just skip this section altogether anyway).

I mostly spent my time flying the small general aviation planes in the sim, but if you prefer a Boeing 747 or Airbus 320neo, you get that option, too, as well as some turboprops and business jets. I’ll spend some more time with those before the official launch. All of the planes are beautifully detailed inside and out and except for a few bugs, everything works as expected.

To actually start playing, you’ll head for the world map and choose where you want to start your flight. What’s nice here is that you can pick any spot on your map, not just airports. That makes it easy to start flying over a city, for example. As you zoom into the map, you can see airports and landmarks (where the landmarks are either real sights like Germany’s Neuschwanstein Castle or cities that have photogrammetry data). If a town doesn’t have photogrammetry data, it will not appear on the map.

As of now, the flight planning features are pretty basic. For visual flights, you can go direct or VOR to VOR, and that’s it. For IFR flights, you choose low or high-altitude airways. You can’t really adjust any of these, just accept what the simulator gives you. That’s not really how flight planning works (at the very least you would want to take the local weather into account), so it would be nice if you could customize your route a bit more. Microsoft partnered with NavBlue for airspace data, though the built-in maps don’t do much with this data and don’t even show you the vertical boundaries of the airspace you are in.

Image Credits: TechCrunch

It’s always hard to compare the plane models and how they react to the real thing. Best I can tell, at least the single-engine Cessnas that I’m familiar with mostly handle in the same way I would expect them to in reality. Rudder controls feel a bit overly sensitive by default, but that’s relatively easy to adjust. I only played with a HOTAS-style joystick and rudder setup. I wouldn’t recommend playing with a mouse and keyboard, but your mileage may vary.

Live traffic works well, but none of the general aviation traffic around my local airports seems to show up, even though Microsoft partner FlightAware shows it.

As for the real/AI traffic in general, the sim does a pretty good job managing that. In the beta, you won’t really see the liveries of any real airlines yet — at least for the most part — I spotted the occasional United plane in the latest builds. Given some of Microsoft’s own videos, more are coming soon. Except for the built-in models you can fly in the sim, Flight Simulator is still missing a library of other airplane models for AI traffic, though again, I would assume that’s in the works, too.

Image Credits: TechCrunch

We’re three weeks out from launch. I would expect the team to be able to fix many of these issues and we’ll revisit all of them for our final review. My frustration with the current state of the game is that it’s so often so close to perfect that when it falls short of that, it’s especially jarring because it yanks you out of the experience.

Don’t get me wrong, though, flying in FS2020 is already a great experience. Even when there’s no photogrammetry, cities and villages look great once you get over 3,000 feet or so. The weather and cloud simulation — in real time — beats any add-on for today’s flight simulators. Airports still need work, but having cars drive around and flaggers walking around planes that are pushing back help make the world feel more alive. Wind affects the waves on lakes and oceans (and windsocks on airports). This is truly a next-generation flight simulator.

Image Credits: Microsoft

Microsoft and Asobo have to walk a fine line between making Flight Simulator the sim that hardcore fans want and an accessible game that brings in new players. I’ve played every version of Flight Simulator since the 90s, so getting started took exactly zero time. My sense is that new players simply looking for a good time may feel a bit lost at first, despite Microsoft adding landing challenges and other more gamified elements to the sim. In a press briefing, the Asobo team regularly stressed that it aimed for realism over anything else — and I’m perfectly ok with that. We’ll have to see if that translates to being a fun experience for casual players, too.

The

The  If you’re looking to spend a little less money, and get an enclosure that’s a bit more barebones but that still offers excellent performance, check out the

If you’re looking to spend a little less money, and get an enclosure that’s a bit more barebones but that still offers excellent performance, check out the