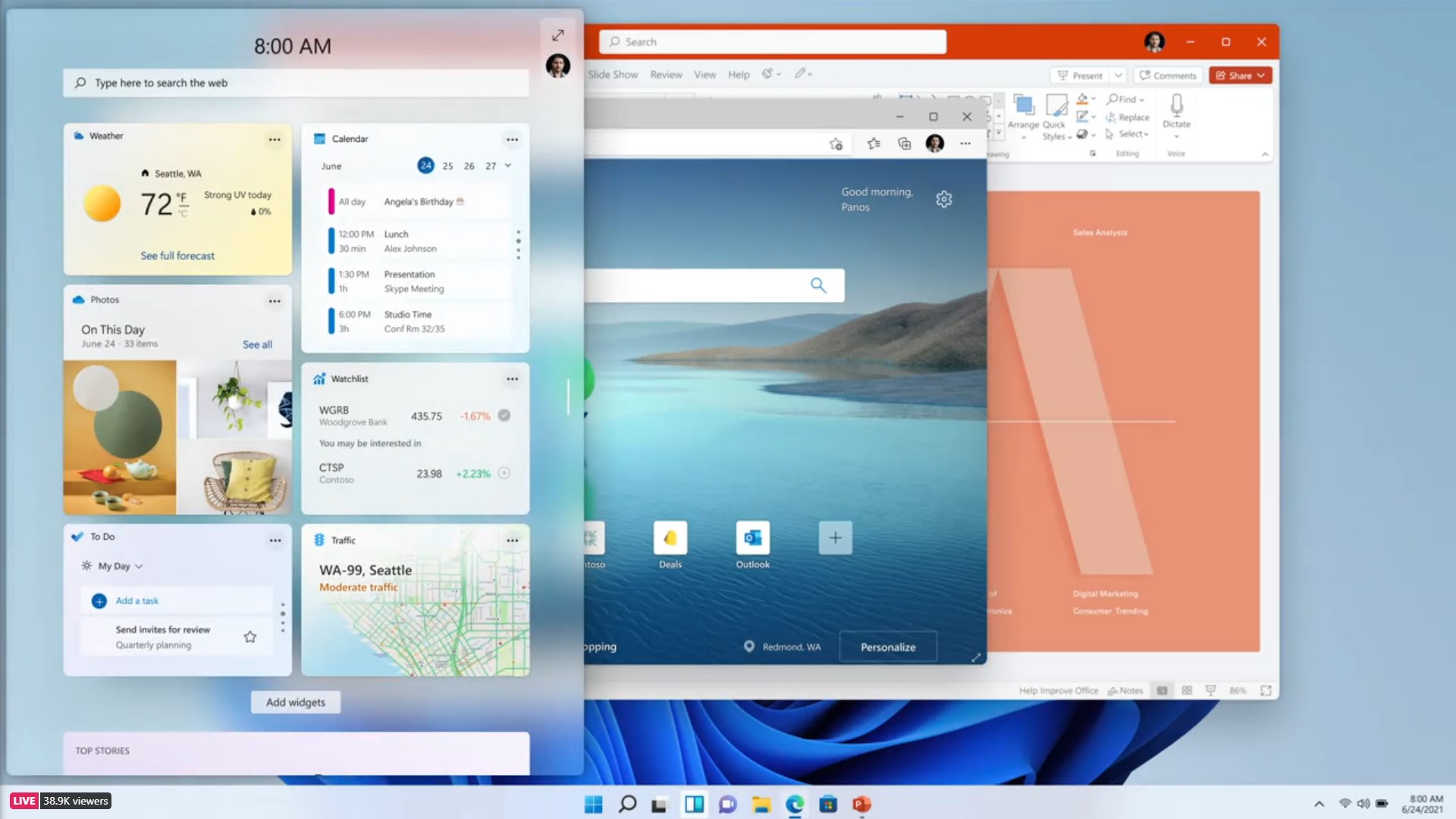

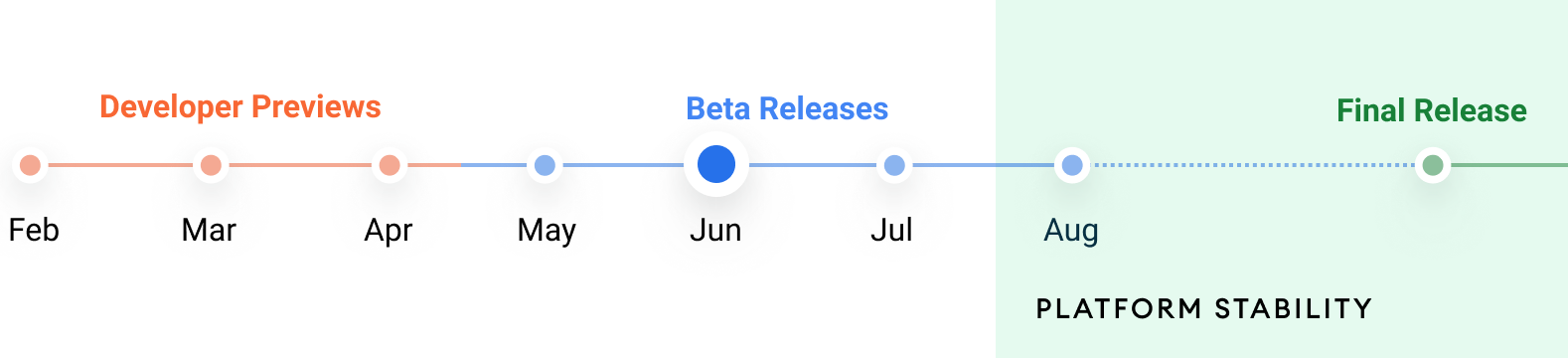

With the upcoming release of iOS 15 for Apple mobile devices, Apple’s built-in search feature known as Spotlight will become a lot more functional. In what may be one of its bigger updates since it introduced Siri Suggestions, the new version of Spotlight is becoming an alternative to Google for several key queries, including web images and information about actors, musicians, TV shows and movies. It will also now be able to search across your photo library, deliver richer results for contacts, and connect you more directly with apps and the information they contain. It even allows you to install apps from the App Store without leaving Spotlight itself.

Spotlight is also more accessible than ever before.

Years ago, Spotlight moved from its location to the left of the Home screen to become available with a swipe down in the middle of any screen in iOS 7, which helped grow user adoption. Now, it’s available with the same swipe down gesture on the iPhone’s Lock Screen, too.

Apple showed off a few of Spotlight’s improvements during its keynote address at its Worldwide Developer Conference, including the search feature’s new cards for looking up information on actors, movies and shows, as well as musicians. This change alone could redirect a good portion of web searches away from Google or dedicated apps like IMDb.

For years, Google has been offering quick access to common searches through its Knowledge Graph, a knowledge base that allows it to gather information from across sources and then use that to add informational panels above and the side of its standard search results. Panels on actors, musicians, shows and movies are available as part of that effort.

But now, iPhone users can just pull up this info on their home screen.

The new cards include more than the typical Wikipedia bio and background information you may expect — they also showcase links to where you can listen or watch content from the artist or actor or movie or show in question. They include news articles, social media links, official websites, and even direct you to where the searched person or topic may be found inside your own apps. (E.g. a search for “Billie Eilish” may direct you to her tour tickets inside SeatGeek, or a podcast where she’s a guest).

Image Credits: Apple

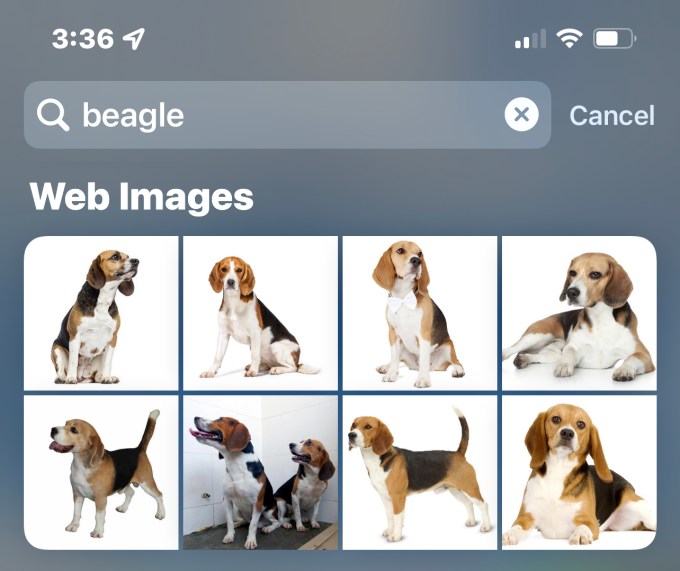

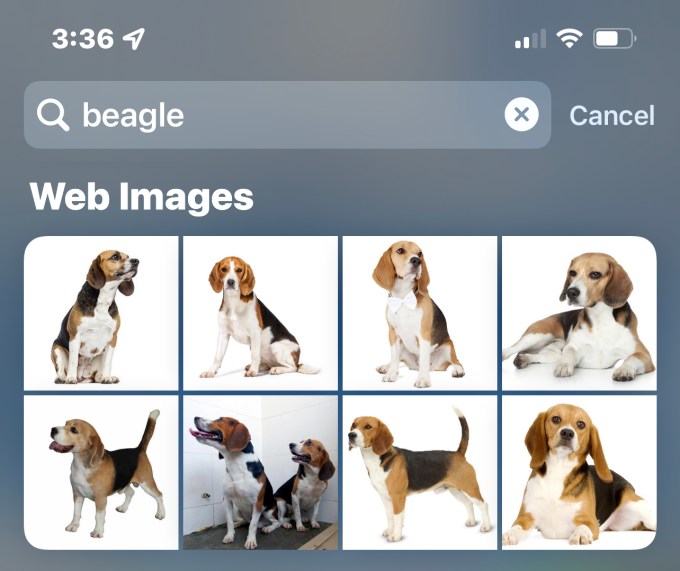

For web image searches, Spotlight also now allows you to search for people, places, animals, and more from the web — eating into another search vertical Google today provides.

Image Credits: iOS 15 screenshot

Your personal searches have been upgraded with richer results, too, in iOS 15.

When you search for a contact, you’ll be taken to a card that does more than show their name and how to reach them. You’ll also see their current status (thanks to another iOS 15 feature), as well as their location from FindMy, your recent conversations on Messages, your shared photos, calendar appointments, emails, notes, and files. It’s almost like a personal CRM system.

Image Credits: Apple

Personal photo searches have also been improved. Spotlight now uses Siri intelligence to allow you to search your photos by the people, scenes, elements in your photos, as well as by location. And it’s able to leverage the new Live Text feature in iOS 15 to find the text in your photos to return relevant results.

This could make it easier to pull up photos where you’ve screenshot a recipe, a store receipt, or even a handwritten note, Apple said.

Image Credits: Apple

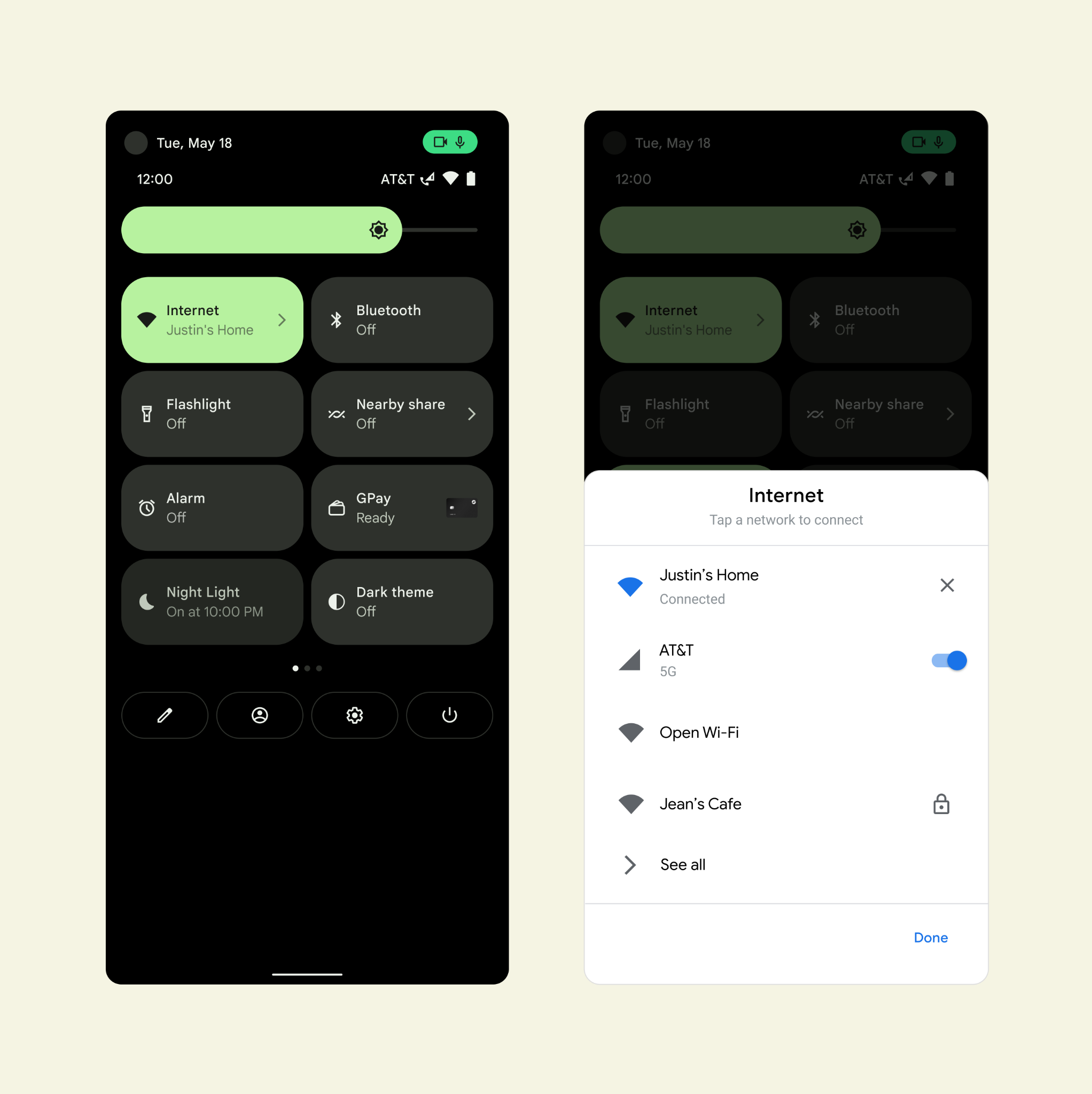

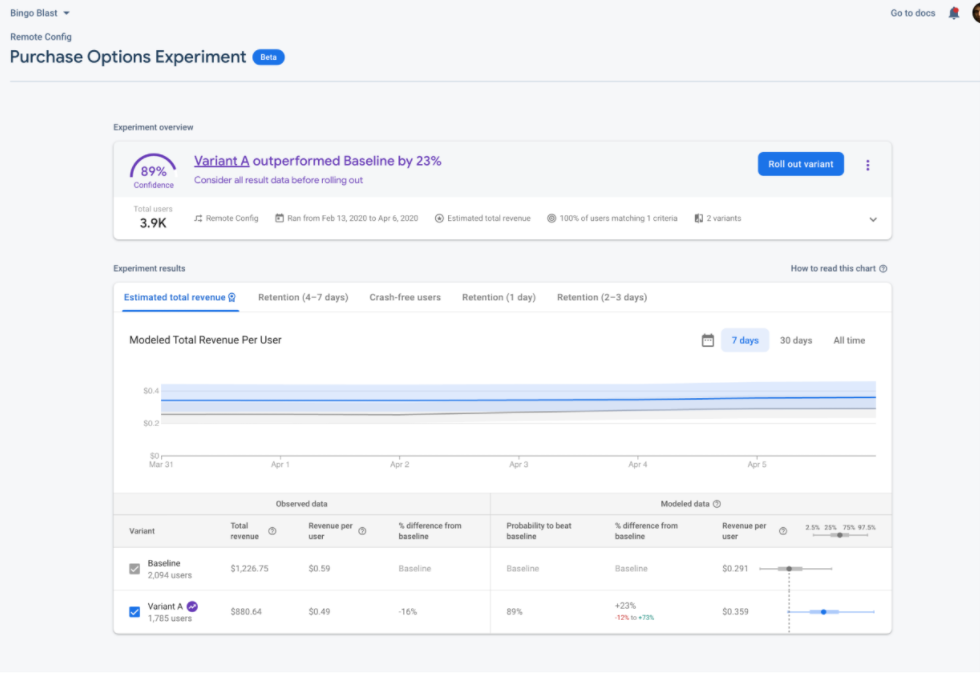

A couple of features related to Spotlight’s integration with apps weren’t mentioned during the keynote.

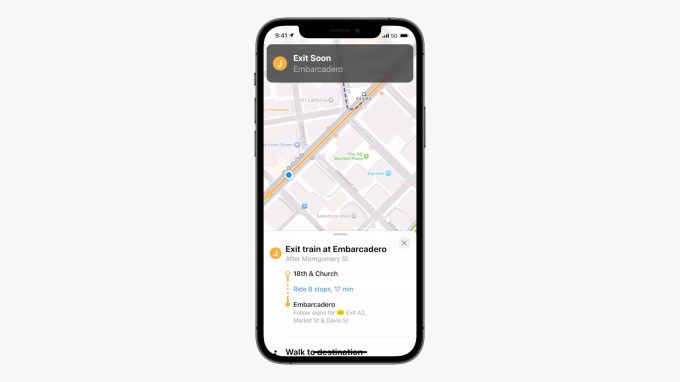

Spotlight will now display action buttons on the Maps results for businesses that will prompt users to engage with that business’s app. In this case, the feature is leveraging App Clips, which are small parts of a developer’s app that let you quickly perform a task even without downloading or installing the app in question. For example, from Spotlight you may be prompted to pull up a restaurant’s menu, buy tickets, make an appointment, order takeout, join a waitlist, see showtimes, pay for parking, check prices and more.

The feature will require the business to support App Clips in order to work.

Image Credits: iOS 15 screenshot

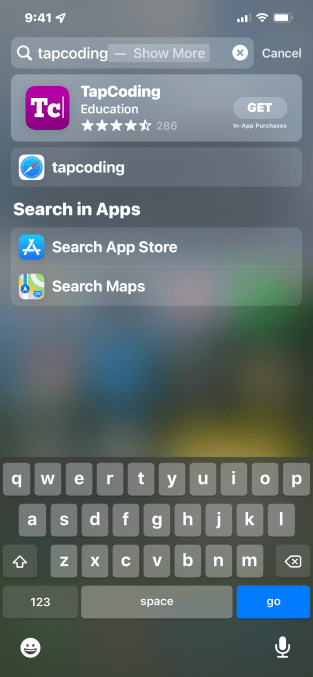

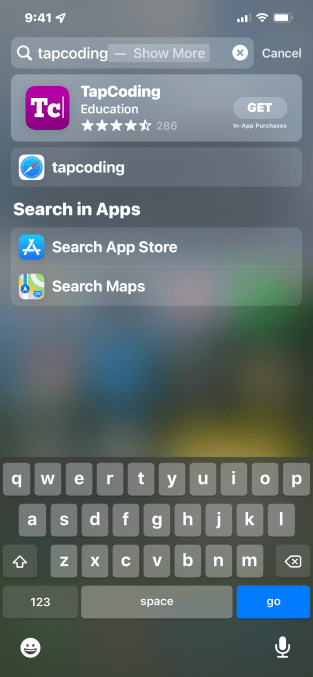

Another under-the-radar change — but a significant one — is the new ability to install apps from the App Store directly from Spotlight.

This could prompt more app installs, as it reduces the steps from a search to a download, and makes querying the App Store more broadly available across the operating system.

Developers can additionally choose to insert a few lines of code to their app to make data from the app discoverable within Spotlight and customize how it’s presented to users. This means Spotlight can work as a tool for searching content from inside apps — another way Apple is redirecting users away from traditional web searches in favor of apps.

However, unlike Google’s search engine, which relies on crawlers that browse the web to index the data it contains, Spotlight’s in-app search requires developer adoption.

Still, it’s clear Apple sees Spotlight as a potential rival to web search engines, including Google’s.

“Spotlight is the universal place to start all your searches,” said Apple SVP of Software Engineering Craig Federighi during the keynote event.

Spotlight, of course, can’t handle “all” your searches just yet, but it appears to be steadily working towards that goal.