It’s been over five years since NSA whistleblower Edward Snowden lifted the lid on government mass surveillance programs, revealing, in unprecedented detail, quite how deep the rabbit hole goes thanks to the spread of commercial software and connectivity enabling a bottomless intelligence-gathering philosophy of ‘bag it all’.

Yet technology’s onward march has hardly broken its stride.

Government spying practices are perhaps more scrutinized, as a result of awkward questions about out-of-date legal oversight regimes. Though whether the resulting legislative updates, putting an official stamp of approval on bulk and/or warrantless collection as a state spying tool, have put Snowden’s ethical concerns to bed seems doubtful — albeit, it depends on who you ask.

The UK’s post-Snowden Investigatory Powers Act continues to face legal challenges. And the government has been forced by the courts to unpick some of the powers it helped itself to vis-à-vis people’s data. But bulk collection, as an official modus operandi, has been both avowed and embraced by the state.

In the US, too, lawmakers elected to push aside controversy over a legal loophole that provides intelligence agencies with a means for the warrantless surveillance of American citizens — re-stamping Section 702 of FISA for another six years. So of course they haven’t cared a fig for non-US citizens’ privacy either.

Increasingly powerful state surveillance is seemingly here to stay, with or without adequately robust oversight. And commercial use of strong encryption remains under attack from governments.

But there’s another end to the surveillance telescope. As I wrote five years ago, those who watch us can expect to be — and indeed are being — increasingly closely watched themselves as the lens gets turned on them:

“Just as our digital interactions and online behaviour can be tracked, parsed and analysed for problematic patterns, pertinent keywords and suspicious connections, so too can the behaviour of governments. Technology is a double-edged sword – which means it’s also capable of lifting the lid on the machinery of power-holding institutions like never before.”

We’re now seeing some of the impacts of this surveillance technology cutting both ways.

With attention to detail, good connections (in all senses) and the application of digital forensics all sorts of discrete data dots can be linked — enabling official narratives to be interrogated and unpicked with technology-fuelled speed.

Witness, for example, how quickly the Kremlin’s official line on the Skripal poisonings unravelled.

After the UK released CCTV of two Russian suspects of the Novichok attack in Salisbury, last month, the speedy counter-claim from Russia, presented most obviously via an ‘interview’ with the two ‘citizens’ conducted by state mouthpiece broadcaster RT, was that the men were just tourists with a special interest in the cultural heritage of the small English town.

Nothing to see here, claimed the Russian state, even though the two unlikely tourists didn’t appear to have done much actual sightseeing on their flying visit to the UK during the tail end of a British winter (unless you count vicarious viewing of Salisbury’s wikipedia page).

But digital forensics outfit Bellingcat, partnering with investigative journalists at The Insider Russia, quickly found plenty to dig up online, and with the help of data-providing tips. (We can only speculate who those whistleblowers might be.)

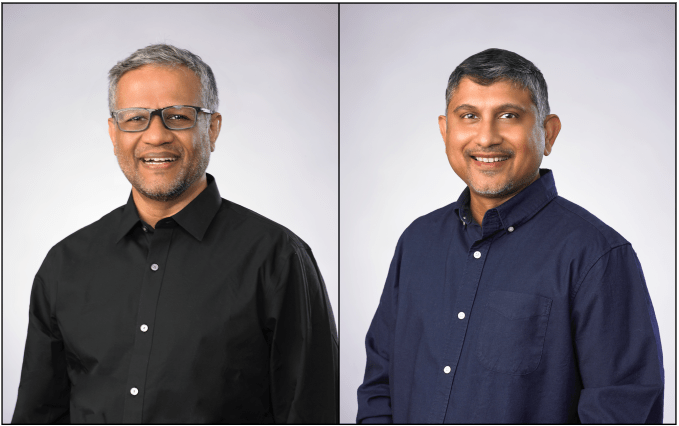

Their investigation made use of a leaked database of Russian passport documents; passport scans provided by sources; publicly available online videos and selfies of the suspects; and even visual computing expertise to academically cross-match photos taken 15 years apart — to, within a few weeks, credibly unmask the ‘tourists’ as two decorated GRU agents: Anatoliy Chepiga and Dr Alexander Yevgeniyevich Mishkin.

When public opinion is faced with an official narrative already lacking credibility that’s soon set against external investigation able to closely show workings and sources (where possible), and thus demonstrate how reasonably constructed and plausible is the counter narrative, there’s little doubt where the real authority is being shown to lie.

And who the real liars are.

That the Kremlin lies is hardly news, of course. But when its lies are so painstakingly and publicly unpicked, and its veneer of untruth ripped away, there is undoubtedly reputational damage to the authority of Vladimir Putin.

The sheer depth and availability of data in the digital era supports faster-than-ever evidence-based debunking of official fictions, threatening to erode rogue regimes built on lies by pulling away the curtain that invests their leaders with power in the first place — by implying the scope and range of their capacity and competency is unknowable, and letting other players on the world stage accept such a ‘leader’ at face value.

The truth about power is often far more stupid and sordid than the fiction. So a powerful abuser, with their workings revealed, can be reduced to their baser parts — and shown for the thuggish and brutal operator they really are, as well as proved a liar.

On the stupidity front, in another recent and impressive bit of cross-referencing, Bellingcat was able to turn passport data pertaining to another four GRU agents — whose identities had been made public by Dutch and UK intelligence agencies (after they had been caught trying to hack into the network of the Organisation for the Prohibition of Chemical Weapons) — into a long list of 305 suggestively linked individuals also affiliated with the same GRU military unit, and whose personal data had been sitting in a publicly available automobile registration database… Oops.

There’s no doubt certain governments have wised up to the power of public data and are actively releasing key info into the public domain where it can be poured over by journalists and interested citizen investigators — be that CCTV imagery of suspects or actual passport scans of known agents.

A cynic might call this selective leaking. But while the choice of what to release may well be self-serving, the veracity of the data itself is far harder to dispute. Exactly because it can be cross-referenced with so many other publicly available sources and so made to speak for itself.

Right now, we’re in the midst of another fast-unfolding example of surveillance apparatus and public data standing in the way of dubious state claims — in the case of the disappearance of Washington Post journalist Jamal Khashoggi, who went into the Saudi consulate in Istanbul on October 2 for a pre-arranged appointment to collect papers for his wedding and never came out.

Saudi authorities first tried to claim Khashoggi left the consulate the same day, though did not provide any evidence to back up their claim. And CCTV clearly showed him going in.

Yesterday they finally admitted he was dead — but are now trying to claim he died quarrelling in a fistfight, attempting to spin another after-the-fact narrative to cover up and blame-shift the targeted slaying of a journalist who had written critically about the Saudi regime.

Since Khashoggi went missing, CCTV and publicly available data has also been pulled and compared to identify a group of Saudi men who flew into Istanbul just prior to his appointment at the consulate; were caught on camera outside it; and left Turkey immediately after he had vanished.

Including naming a leading Saudi forensics doctor, Dr Salah Muhammed al-Tubaigy, as being among the party that Turkish government sources also told journalists had been carrying a bone saw in their luggage.

Men in the group have also been linked to Saudi crown prince Mohammed bin Salman, via cross-referencing travel records and social media data.

“In a 2017 video published by the Saudi-owned Al Ekhbariya on YouTube, a man wearing a uniform name tag bearing the same name can be seen standing next to the crown prince. A user with the same name on the Saudi app Menom3ay is listed as a member of the royal guard,” writes the Guardian, joining the dots on another suspected henchman.

A marked element of the Khashoggi case has been the explicit descriptions of his fate leaked to journalists by Turkish government sources, who have said they have recordings of his interrogation, torture and killing inside the building — presumably via bugs either installed in the consulate itself or via intercepts placed on devices held by the individuals inside.

This surveillance material has reportedly been shared with US officials, where it must be shaping the geopolitical response — making it harder for President Trump to do what he really wants to do, and stick like glue to a regional US ally with which he has his own personal financial ties, because the arms of that state have been recorded in the literal act of cutting off the fingers and head of a critical journalist, and then sawing up and disposing of the rest of his body.

Attempts by the Saudis to construct a plausible narrative to explain what happened to Khashoggi when he stepped over its consulate threshold to pick up papers for his forthcoming wedding have failed in the face of all the contrary data.

Meanwhile, the search for a body goes on.

And attempts by the Saudis to shift blame for the heinous act away from the crown prince himself are also being discredited by the weight of data…

And while it remains to be seen what sanctions, if any, the Saudis will face from Trump’s conflicted administration, the crown prince is already being hit where it hurts by the global business community withdrawing in horror from the prospect of being tainted by bloody association.

The idea that a company as reputation-sensitive as Apple would be just fine investing billions more alongside the Saudi regime, in SoftBank’s massive Vision Fund vehicle, seems unlikely, to say the least.

Thanks to technology’s surveillance creep the world has been given a close-up view of how horrifyingly brutal the Saudi regime can be — and through the lens of an individual it can empathize with and understand.

Safe to say, supporting second acts for regimes that cut off fingers and sever heads isn’t something any CEO would want to become famous for.

The power of technology to erode privacy is clearer than ever. Down to the very teeth of the bone saw. But what’s also increasingly clear is that powerful and at times terrible capability can be turned around to debase power itself — when authorities themselves become abusers.

So the flip-side of the surveillance state can be seen in the public airing of the bloody colors of abusive regimes.

Turns out, microscopic details can make all the difference to geopolitics.

RIP Jamal Khashoggi

It’s absolutely necessary for your business to update Windows 10, mainly for the security patches that will protect your business. Updating basically puts your computers on hold, but this is much much better than hackers exploiting gaps in unpatched systems. Need to speed up the waiting process? You can with these tips!

It’s absolutely necessary for your business to update Windows 10, mainly for the security patches that will protect your business. Updating basically puts your computers on hold, but this is much much better than hackers exploiting gaps in unpatched systems. Need to speed up the waiting process? You can with these tips! Updating your Windows 10 is an essential security measure for protecting your business from threats such as malware or ransomware. It’s free! And you don’t have to do much, all you have to do is wait. However, for some, that’s the downside. Can’t stand waiting? One of these will speed up your Windows 10 update.

Updating your Windows 10 is an essential security measure for protecting your business from threats such as malware or ransomware. It’s free! And you don’t have to do much, all you have to do is wait. However, for some, that’s the downside. Can’t stand waiting? One of these will speed up your Windows 10 update. In May 2019, Microsoft will be releasing another Windows 10 major update with security patches, bug fixes, and new features. More than improving user experience, these updates will help your organization secure your IT systems. If you can’t afford to let an update be a long and frustrating process, here are some tips that will speed it up.

In May 2019, Microsoft will be releasing another Windows 10 major update with security patches, bug fixes, and new features. More than improving user experience, these updates will help your organization secure your IT systems. If you can’t afford to let an update be a long and frustrating process, here are some tips that will speed it up.

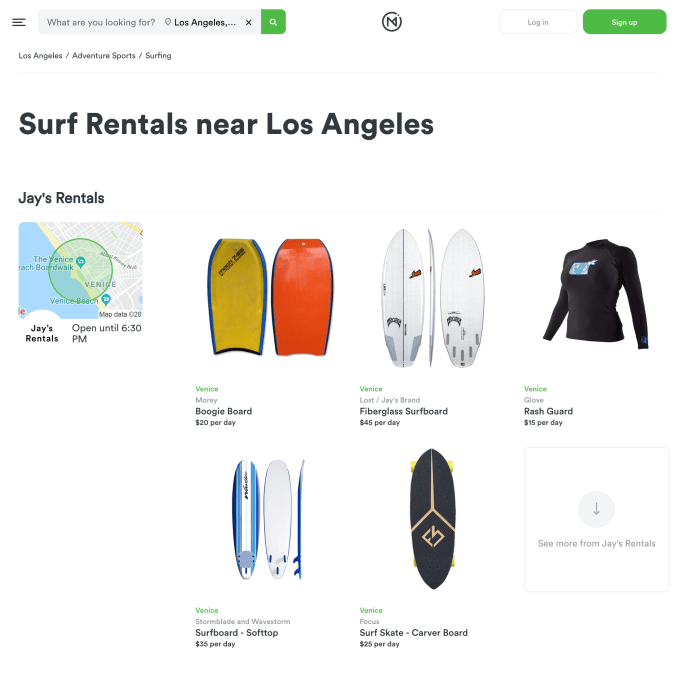

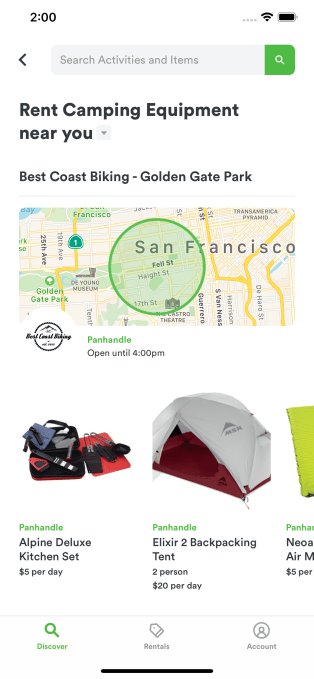

Meanwhile, Omni noticed some semi-pro renters had cropped up on its platform whowere buying tons of a popular item like chairs on Amazon, shipping them to its warehouse, then renting them out and quickly recouping their costs. It saw an opportunity to partner with local retailers who could give it instant supplies of items in new markets while handling all the pick up and drop off logistics.

Meanwhile, Omni noticed some semi-pro renters had cropped up on its platform whowere buying tons of a popular item like chairs on Amazon, shipping them to its warehouse, then renting them out and quickly recouping their costs. It saw an opportunity to partner with local retailers who could give it instant supplies of items in new markets while handling all the pick up and drop off logistics.