Google is playing catch-up in the cloud, and as such it wants to provide flexibility to differentiate itself from AWS and Microsoft. Today, the company announced a couple of new options to help separate it from the cloud storage pack.

Storage may seem stodgy, but it’s a primary building block for many cloud applications. Before you can build an application you need the data that will drive it, and that’s where the storage component comes into play.

One of the issues companies have as they move data to the cloud is making sure it stays close to the application when it’s needed to reduce latency. Customers also require redundancy in the event of a catastrophic failure, but still need access with low latency. The latter has been a hard problem to solve until today when Google introduced a new dual-regional storage option.

As Google described it in the blog post announcing the new feature, “With this new option, you write to a single dual-regional bucket without having to manually copy data between primary and secondary locations. No replication tool is needed to do this and there are no network charges associated with replicating the data, which means less overhead for you storage administrators out there. In the event of a region failure, we transparently handle the failover and ensure continuity for your users and applications accessing data in Cloud Storage.”

This allows companies to have redundancy with low latency, while controlling where it goes without having to manually move it should the need arise.

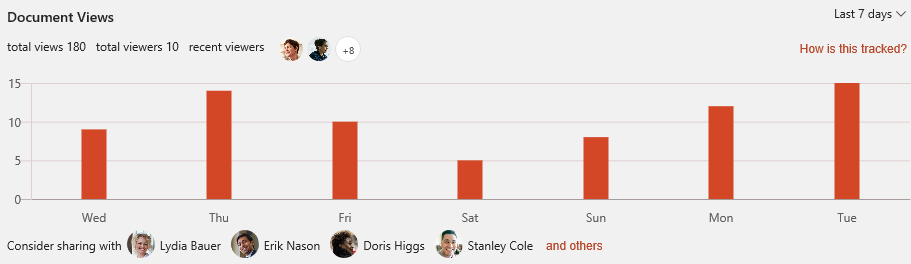

Knowing what you’re paying

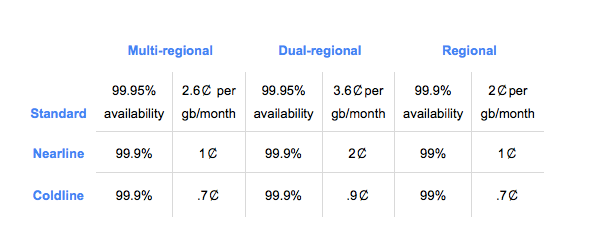

Companies don’t always require instant access to data, and Google (and other cloud vendors) offer a variety of storage options, making it cheaper to store and retrieve archived data. As of today, Google is offering a clear way to determine costs, based on customer storage choice types. While it might not seem revolutionary to let customers know what they are paying, Dominic Preuss, Google’s director of product management says it hasn’t always been a simple matter to calculate these kinds of costs in the cloud. Google decided to simplify it by clearly outlining the costs for medium (Nearline) and long-term (Coldline) storage across multiple regions.

As Google describes it, “With multi-regional Nearline and Coldline storage, you can access your data with millisecond latency, it’s distributed redundantly across a multi-region (U.S., EU or Asia), and you pay archival prices. This is helpful when you have data that won’t be accessed very often, but still needs to be protected with geographically dispersed copies, like media archives or regulated content. It also simplifies management.”

Under the new plan, you can select the type of storage you need, the kind of regional coverage you want and you can see exactly what you are paying.

Google Cloud storage pricing options. Chart: Google

Each of these new storage services has been designed to provide additional options for Google Cloud customers, giving them more transparency around pricing and flexibility and control over storage types, regions and the way they deal with redundancy across data stores.