The iPhone XS proves one thing definitively: that the iPhone X was probably one of the most ambitious product bets of all time.

When Apple told me in 2017 that they put aside plans for the iterative upgrade that they were going to ship and went all in on the iPhone X because they thought they could jump ahead a year, they were not blustering. That the iPhone XS feels, at least on the surface, like one of Apple’s most “S” models ever is a testament to how aggressive the iPhone X timeline was.

I think there will be plenty of people who will see this as a weakness of the iPhone XS, and I can understand their point of view. There are about a half-dozen definitive improvements in the XS over the iPhone X, but none of them has quite the buzzword-worthy effectiveness of a marquee upgrade like 64-bit, 3D Touch or wireless charging — all benefits delivered in previous “S” years.

That weakness, however, is only really present if you view it through the eyes of the year-over-year upgrader. As an upgrade over an iPhone X, I’d say you’re going to have to love what they’ve done with the camera to want to make the jump. As a move from any other device, it’s a huge win and you’re going head-first into sculpted OLED screens, face recognition and super durable gesture-first interfaces and a bunch of other genre-defining moves that Apple made in 2017, thinking about 2030, while you were sitting back there in 2016.

Since I do not have an iPhone XR, I can’t really make a call for you on that comparison, but from what I saw at the event and from what I know about the tech in the iPhone XS and XS Max from using them over the past week, I have some basic theories about how it will stack up.

For those with interest in the edge of the envelope, however, there is a lot to absorb in these two new phones, separated only by size. Once you begin to unpack the technological advancements behind each of the upgrades in the XS, you begin to understand the real competitive edge and competence of Apple’s silicon team, and how well they listen to what the software side needs now and in the future.

Whether that makes any difference for you day to day is another question, one that, as I mentioned above, really lands on how much you like the camera.

But first, let’s walk through some other interesting new stuff.

Notes on durability

As is always true with my testing methodology, I treat this as anyone would who got a new iPhone and loaded an iCloud backup onto it. Plenty of other sites will do clean room testing if you like comparison porn, but I really don’t think that does most folks much good. By and large most people aren’t making choices between ecosystems based on one spec or another. Instead, I try to take them along on prototypical daily carries, whether to work for TechCrunch, on vacation or doing family stuff. A foot injury precluded any theme parks this year (plus, I don’t like to be predictable) so I did some office work, road travel in the center of California and some family outings to the park and zoo. A mix of uses cases that involves CarPlay, navigation, photos and general use in a suburban environment.

In terms of testing locale, Fresno may not be the most metropolitan city, but it’s got some interesting conditions that set it apart from the cities where most of the iPhones are going to end up being tested. Network conditions are pretty adverse in a lot of places, for one. There’s a lot of farmland and undeveloped acreage and not all of it is covered well by wireless carriers. Then there’s the heat. Most of the year it’s above 90 degrees Fahrenheit and a good chunk of that is spent above 100. That means that batteries take an absolute beating here and often perform worse than other, more temperate, places like San Francisco. I think that’s true of a lot of places where iPhones get used, but not so much the places where they get reviewed.

That said, battery life has been hard to judge. In my rundown tests, the iPhone XS Max clearly went beast mode, outlasting my iPhone X and iPhone XS. Between those two, though, it was tougher to tell. I try to wait until the end of the period I have to test the phones to do battery stuff so that background indexing doesn’t affect the numbers. In my ‘real world’ testing in the 90+ degree heat around here, iPhone XS did best my iPhone X by a few percentage points, which is what Apple does claim, but my X is also a year old. I didn’t fail to get through a pretty intense day of testing with the XS once though.

In terms of storage I’m tapping at the door of 256GB, so the addition of 512GB option is really nice. As always, the easiest way to determine what size you should buy is to check your existing free space. If you’re using around 50% of what your phone currently has, buy the same size. If you’re using more, consider upgrading because these phones are only getting faster at taking better pictures and video and that will eat up more space.

The review units I was given both had the new gold finish. As I mentioned on the day, this is a much deeper, brassier gold than the Apple Watch Edition. It’s less ‘pawn shop gold’ and more ‘this is very expensive’ gold. I like it a lot, though it is hard to photograph accurately — if you’re skeptical, try to see it in person. It has a touch of pink added in, especially as you look at the back glass along with the metal bands around the edges. The back glass has a pearlescent look now as well, and we were told that this is a new formulation that Apple created specifically with Corning. Apple says that this is the most durable glass ever in a smartphone.

My current iPhone has held up to multiple falls over 3 feet over the past year, one of which resulted in a broken screen and replacement under warranty. Doubtless multiple YouTubers will be hitting this thing with hammers and dropping it from buildings in beautiful Phantom Flex slo-mo soon enough. I didn’t test it. One thing I am interested in seeing develop, however, is how the glass holds up to fine abrasions and scratches over time.

My iPhone X is riddled with scratches both front and back, something having to do with the glass formulation being harder, but more brittle. Less likely to break on impact but more prone to abrasion. I’m a dedicated no-caser, which is why my phone looks like it does, but there’s no way for me to tell how the iPhone XS and XS Max will hold up without giving them more time on the clock. So I’ll return to this in a few weeks.

Both the gold and space grey iPhones XS have been subjected to a coating process called physical vapor deposition or PVD. Basically metal particles get vaporized and bonded to the surface to coat and color the band. PVD is a process, not a material, so I’m not sure what they’re actually coating these with, but one suggestion has been Titanium Nitride. I don’t mind the weathering that has happened on my iPhone X band, but I think it would look a lot worse on the gold, so I’m hoping that this process (which is known to be incredibly durable and used in machine tooling) will improve the durability of the band. That said, I know most people are not no-casers like me so it’s likely a moot point.

Now let’s get to the nut of it: the camera.

Bokeh let’s do it

I’m (still) not going to be comparing the iPhone XS to an interchangeable lens camera because portrait mode is not a replacement for those, it’s about pulling them out less. That said, this is closest its ever been.

One of the major hurdles that smartphone cameras have had to overcome in their comparisons to cameras with beautiful glass attached is their inherent depth of focus. Without getting too into the weeds (feel free to read this for more), because they’re so small, smartphone cameras produce an incredibly compressed image that makes everything sharp. This doesn’t feel like a portrait or well composed shot from a larger camera because it doesn’t produce background blur. That blur was added a couple of years ago with Apple’s portrait mode and has been duplicated since by every manufacturer that matters — to varying levels of success or failure.

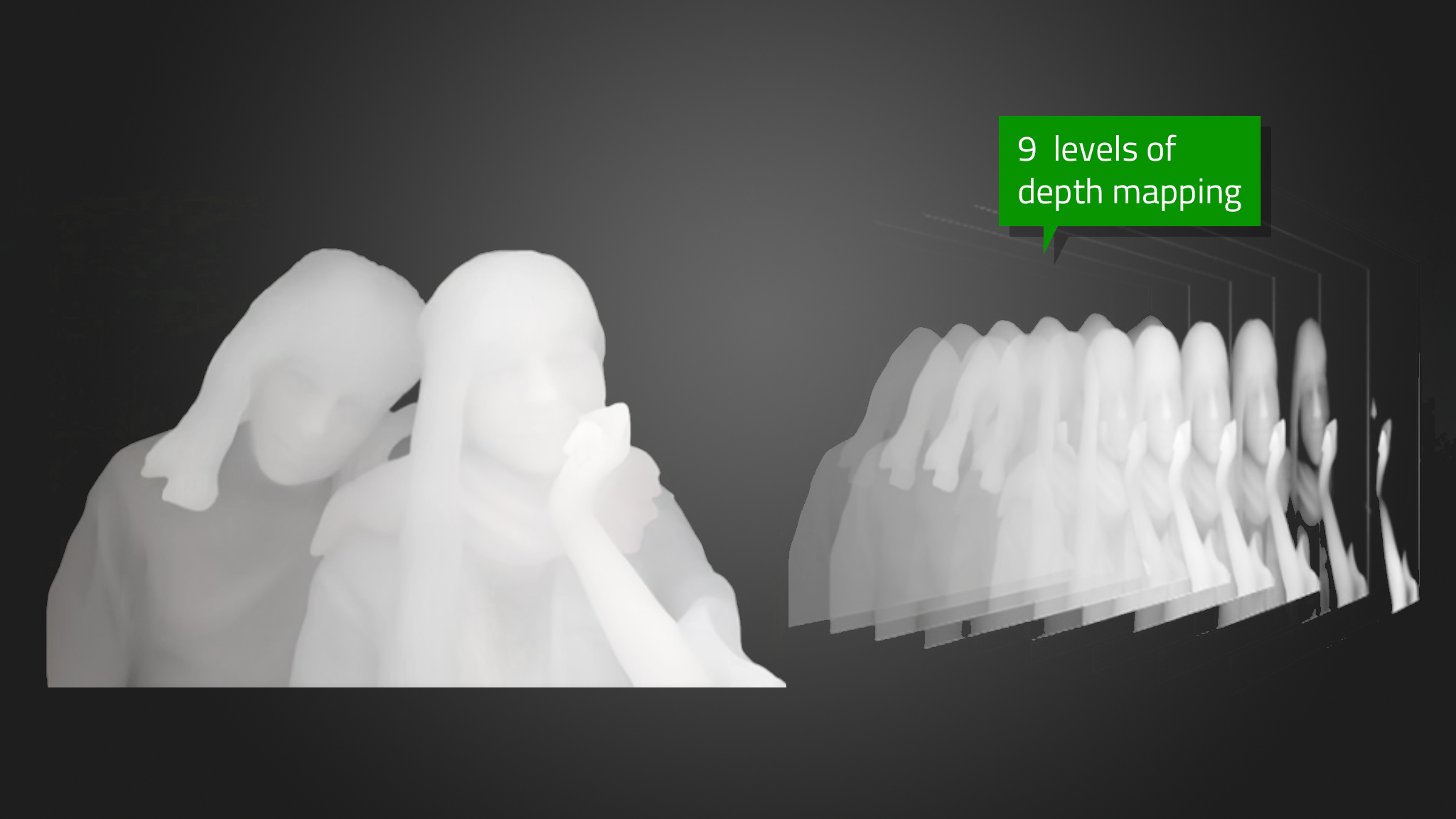

By and large, most manufacturers do it in software. They figure out what the subject probably is, use image recognition to see the eyes/nose/mouth triangle is, build a quick matte and blur everything else. Apple does more by adding the parallax of two lenses OR the IR projector of the TrueDepth array that enables Face ID to gather a 9-layer depth map.

As a note, the iPhone XR works differently, and with less tools, to enable portrait mode. Because it only has one lens it uses focus pixels and segmentation masking to ‘fake’ the parallax of two lenses.

With the iPhone XS, Apple is continuing to push ahead with the complexity of its modeling for the portrait mode. The relatively straightforward disc blur of the past is being replaced by a true bokeh effect.

Background blur in an image is related directly to lens compression, subject-to-camera distance and aperture. Bokeh is the character of that blur. It’s more than just ‘how blurry’, it’s the shapes produced from light sources, the way they change throughout the frame from center to edges, how they diffuse color and how they interact with the sharp portions of the image.

Bokeh is to blur what seasoning is to a good meal. Unless you’re the chef, you probably don’t care what they did you just care that it tastes great.

Well, Apple chef-ed it the hell up with this. Unwilling to settle for a templatized bokeh that felt good and leave it that, the camera team went the extra mile and created an algorithmic model that contains virtual ‘characteristics’ of the iPhone XS’s lens. Just as a photographer might pick one lens or another for a particular effect, the camera team built out the bokeh model after testing a multitude of lenses from all of the classic camera systems.

I keep saying model because it’s important to emphasize that this is a living construct. The blur you get will look different from image to image, at different distances and in different lighting conditions, but it will stay true to the nature of the virtual lens. Apple’s bokeh has a medium-sized penumbra, spreading out light sources but not blowing them out. It maintains color nicely, making sure that the quality of light isn’t obscured like it is with so many other portrait applications in other phones that just pick a spot and create a circle of standard gaussian or disc blur.

Check out these two images, for instance. Note that when the light is circular, it retains its shape, as does the rectangular light. It is softened and blurred, as it would when diffusing through the widened aperture of a regular lens. The same goes with other shapes in reflected light scenarios.

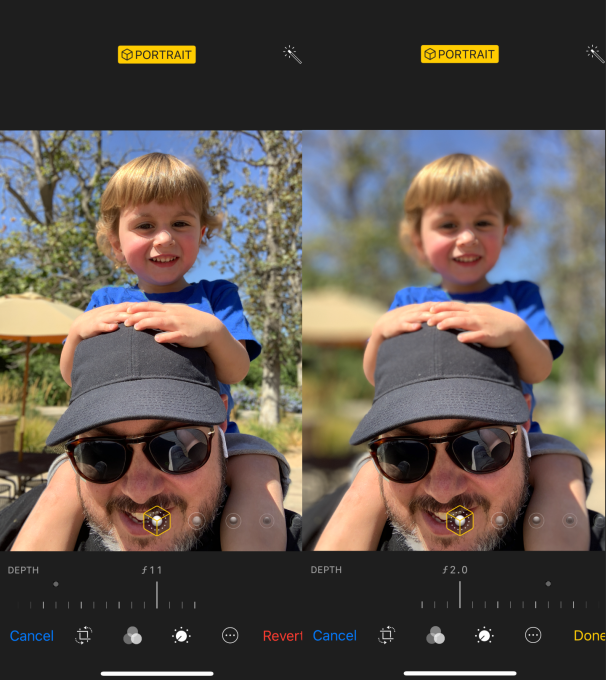

Now here’s the same shot from an iPhone X, note the indiscriminate blur of the light. This modeling effort is why I’m glad that the adjustment slider proudly carries f-stop or aperture measurements. This is what this image would look like at a given aperture, rather than a 0-100 scale. It’s very well done and, because it’s modeled, it can be improved over time. My hope is that eventually, developers will be able to plug in their own numbers to “add lenses” to a user’s kit.

And an adjustable depth of focus isn’t just good for blurring, it’s also good for un-blurring. This portrait mode selfie placed my son in the blurry zone because it focused on my face. Sure, I could turn the portrait mode off on an iPhone X and get everything sharp, but now I can choose to “add” him to the in-focus area while still leaving the background blurry. Super cool feature I think is going to get a lot of use.

It’s also great for removing unwanted people or things from the background by cranking up the blur.

And yes, it works on non humans.

If you end up with an iPhone XS, I’d play with the feature a bunch to get used to what a super wide aperture lens feels like. When its open all the way to f1.4 (not the actual widest aperture of the lens btw, this is the virtual model we’re controlling) pretty much only the eyes should be in focus. Ears, shoulders, maybe even nose could be out of the focus area. It takes some getting used to but can produce dramatic results.

Developers do have access to one new feature though, the segmentation mask. This is a more precise mask that aids in edge detailing, improving hair and fine line detail around the edges of a portrait subject. In my testing it has led to better handling of these transition areas and less clumsiness. It’s still not perfect, but it’s better. And third-party apps like Halide are already utilizing it. Halide’s co-creator, Sebastiaan de With, says they’re already seeing improvements in Halide with the segmentation map.

“Segmentation is the ability to classify sets of pixels into different categories,” says de With. “This is different than a “Hot dog, not a hot dog” problem, which just tells you whether a hot dog exists anywhere in the image. With segmentation, the goal is drawing an outline over just the hot dog. It’s an important topic with self driving cars, because it isn’t enough to tell you there’s a person somewhere in the image. It needs to know that person is directly in front of you. On devices that support it, we use PEM as the authority for what should stay in focus. We still use the classic method on old devices (anything earlier than iPhone 8), but the quality difference is huge.

The above is an example shot in Halide that shows the image, the depth map and the segmentation map.

My testing of portrait mode on the iPhone XS says that it is massively improved, but that there are still some very evident quirks that will lead to weirdness in some shots like wrong things made blurry and halos of light appearing around subjects. It’s also not quite aggressive enough on foreground objects — those should blur too but only sometimes do. But the quirks are overshadowed by the super cool addition of the adjustable background blur.

Live preview of the depth control in the camera view is not in iOS 12 at the launch of the iPhone XS, but it will be coming in a future version of iOS 12 this fall.

I also shoot a huge amount of photos with the telephoto lens. It’s closer to what you’d consider to be a standard lens on a camera. The normal lens is really wide and once you acclimate to the telephoto you’re left wondering why you have a bunch of pictures of people in the middle of a ton of foreground and sky. If you haven’t already, I’d say try defaulting to 2x for a couple of weeks and see how you like your photos. For those tight conditions or really broad landscapes you can always drop it back to the wide. Because of this, any iPhone that doesn’t have a telephoto is a basic non-starter for me, which is going to be one of the limiters on people moving to iPhone XR from iPhone X, I believe. Even iPhone 8 Plus users who rely on the telephoto I believe will miss it if they don’t go to the XS.

But, man, Smart HDR is where it’s at

I’m going to say something now that is surely going to cause some Apple followers to snort, but it’s true. Here it is:

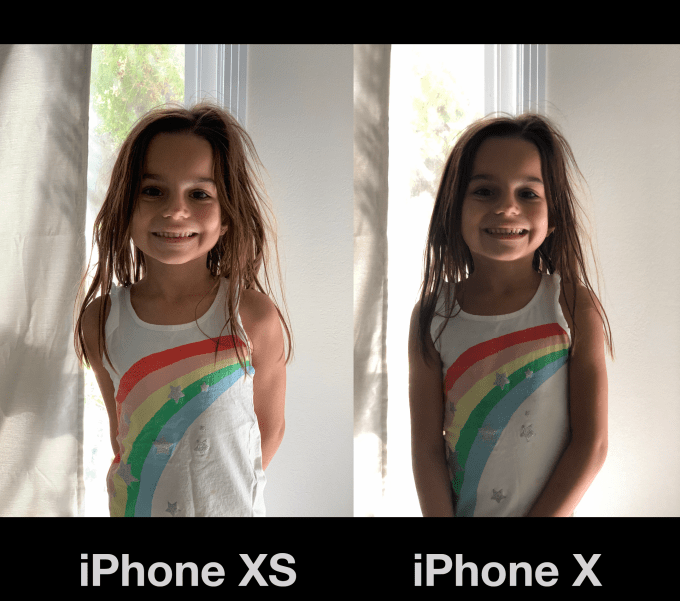

For a company as prone to hyperbole and Maximum Force Enthusiasm about its products, I think that they have dramatically undersold how much improved photos are from the iPhone X to the iPhone XS. It’s extreme, and it has to do with a technique Apple calls Smart HDR.

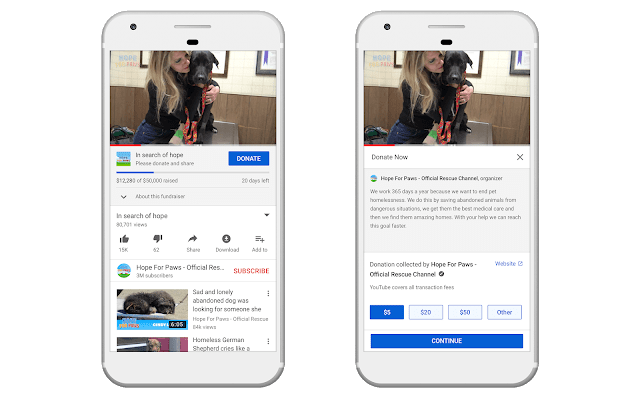

Smart HDR on the iPhone XR encompasses a bundle of techniques and technology including highlight recovery, rapid-firing the sensor, an OLED screen with much improved dynamic range and the Neural Engine/image signal processor combo. It’s now running faster sensors and offloading some of the work to the CPU, which enables firing off nearly two images for every one it used to in order to make sure that motion does not create ghosting in HDR images, it’s picking the sharpest image and merging the other frames into it in a smarter way and applying tone mapping that produces more even exposure and color in the roughest of lighting conditions.

iPhone XS shot, better range of tones, skintone and black point

iPhone X Shot, not a bad image at all, but blocking up of shadow detail, flatter skin tone and blue shift

Nearly every image you shoot on an iPhone XS or iPhone XS Max will have HDR applied to it. It does it so much that Apple has stopped labeling most images with HDR at all. There’s still a toggle to turn Smart HDR off if you wish, but by default it will trigger any time it feels it’s needed.

And that includes more types of shots that could not benefit from HDR before. Panoramic shots, for instance, as well as burst shots, low light photos and every frame of Live Photos is now processed.

The results for me have been massively improved quick snaps with no thought given to exposure or adjustments due to poor lighting. Your camera roll as a whole will just suddenly start looking like you’re a better picture taker, with no intervention from you. All of this is capped off by the fact that the OLED screens in the iPhone XS and XS Max have a significantly improved ability to display a range of color and brightness. So images will just plain look better on the wider gamut screen, which can display more of the P3 color space.

Under the hood

As far as Face ID goes, there has been no perceivable difference for me in speed or number of positives, but my facial model has been training on my iPhone X for a year. It’s starting fresh on iPhone XS. And I’ve always been lucky that Face ID has just worked for me most of the time. The gist of the improvements here are jumps in acquisition times and confirmation of the map to pattern match. There is also supposed to be improvements in off-angle recognition of your face, say when lying down or when your phone is flat on a desk. I tried a lot of different positions here and could never really definitively say that iPhone XS was better in this regard, though as I said above, it very likely takes training time to get it near the confidence levels that my iPhone X has stored away.

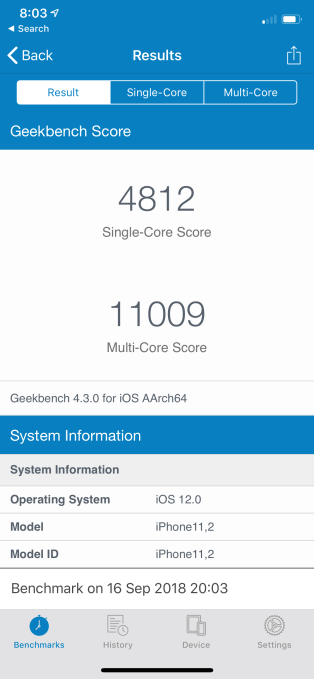

In terms of CPU performance the world’s first at-scale 7nm architecture has paid dividends. You can see from the iPhone XS benchmarks that it compares favorably to fast laptops and easily exceeds iPhone X performance.

The Neural Engine and better A12 chip has meant for better frame rates in intense games and AR, image searches, some small improvement in app launches. One easy way to demonstrate this is the video from the iScape app, captured on an iPhone X and an iPhone XS. You can see how jerky and FPS challenged the iPhone X is in a similar AR scenario. There is so much more overhead for AR experiences I know developers are going to be salivating for what they can do here.

The stereo sound is impressive, surpassingly decent separation for a phone and definitely louder. The tradeoff is that you get asymmetrical speaker grills so if that kind of thing annoys you you’re welcome.

Upgrade or no

Every other year for the iPhone I see and hear the same things — that the middle years are unimpressive and not worthy of upgrading. And I get it, money matters, phones are our primary computer and we want the best bang for our buck. This year, as I mentioned at the outset, the iPhone X has created its own little pocket of uncertainty by still feeling a bit ahead of its time.

I don’t kid myself into thinking that we’re going to have an honest discussion about whether you want to upgrade from the iPhone X to iPhone XS or not. You’re either going to do it because you want to or you’re not going to do it because you don’t feel it’s a big enough improvement.

And I think Apple is completely fine with that because iPhone XS really isn’t targeted at iPhone X users at all, it’s targeted at the millions of people who are not on a gesture-first device that has Face ID. I’ve never been one to recommend someone upgrade every year anyway. Every two years is more than fine for most folks — unless you want the best camera, then do it.

And, given that Apple’s fairly bold talk about making sure that iPhones last as long as they can, I think that it is well into the era where it is planning on having a massive installed user base that rents iPhones from it on a monthly or yearly or biennial period. Because that user base will need for-pay services that Apple can provide. And it seems to be moving in that direction already, with phones as old as the five-year-old iPhone 5s still getting iOS updates.

With the iPhone XS, we might just be seeing the true beginning of the iPhone-as-a-service era.

Please don't

Please don't