Matt Weinberg

Contributor

Matt Weinberg is a former White House appointee with the U.S. Small Business Administration, where he served as a Senior Advisor in the Office of Investment and Innovation.

More posts by this contributor

New fifth-generation “5G” network technology will equip the United States with a superior wireless platform unlocking transformative economic potential. However, 5G’s success is contingent on modernizing outdated policy frameworks that dictate infrastructure overhauls and establishing the proper balance of public-private partnerships to encourage investment and deployment.

Most people have heard by now of the coming 5G revolution. Compared to 4G, this next-generation technology will deliver near-instantaneous connection speed, significantly lower latency – meaning near-zero buffer times – and increased connectivity capacity to allow billions of devices and applications to come online and communicate simultaneously and seamlessly.

While 5G is often discussed in future tense, the reality is it’s already here. Its capabilities were displayed earlier this year at the Olympics in Pyeongchang, South Korea, where Samsung and Intel class="m_4430823757643656150MsoHyperlink">showcased a 5G enabled virtual reality (VR) broadcasting experience to event goers. In addition, multiple U.S. carriers including Verizon, AT&T and Sprint have announced commercial deployments in select markets by the end of 2018, while chipmaker Qualcomm unveiled last month its new 5G millimeter-wave module that outfits smartphones with 5G compatibility.

BARCELONA, SPAIN – 2018/02/26: View of the phone company QUALCOMM technology 5G in the Mobile World Congress.

The Mobile World Congress 2018 is being hosted in Barcelona from 26 February to 1st March. (Photo by Ramon Costa/SOPA Images/LightRocket via Getty Images)

While this commitment from 5G commercial developers is promising, long-term success of 5G is ultimately dependent on addressing two key issues.

The first step is ensuring the right policies are established at the federal, state and municipal levels in the U.S. that will allow the buildout of needed infrastructure, namely “small cells”. This equipment is designed to fit on streetlights, lampposts and buildings. You may not even notice them as you walk by, but they are critical to adding capacity to the network and transmitting wireless activity quickly and reliably.

In many communities across the U.S., 20th century infrastructure policies are slowing the emergence of bringing next-generation networks and technologies online. Issues including costs per small cell attachment, permitting around public rights-of-way and deadlines on application reviews are all less-than-exciting topics of conversation but act as real threats to achieving timely implementation of 5G according to recent research from Accenture and the 5G Americas organization.

Policymakers can mitigate these setbacks by taking inventory of their own policy frameworks and, where needed, streamlining and modernizing processes. For instance, current small cell permit applications can take upwards of 18 to 24 months to advance through the approval process as a result of needed buy-in from many local commissions, city councils, etc. That’s an incredible amount of time for a community to wait around and ultimately fall behind on next-generation access. As a result, policymakers are beginning to act.

13 states, including Florida, Ohio, and Texas have already passed bills alleviating some of the local infrastructure hurdles accompanying increased broadband network deployment, including delays and pricing. Additionally, this year, the Federal Communications Commission (FCC) has moved on multiple orders that look to remedy current 5G roadblocks including opening up commercial access to more amounts of needed high-, mid- and low-band spectrum.

The second step is identifying areas in which public and private entities can partner to drive needed capital and resources towards 5G initiatives. These types of collaborations were first made popular in Europe, where we continue to see significant advancement of infrastructure initiatives through combined public-private planning including the European Commission and European ICT industry’s 5G Infrastructure Public Private Partnership (5G PPP).

The U.S. is increasing its own public-private levels of planning. In 2015, the Obama Administration’s Department of Transportation launched its successful “Smart City Challenge” encouraging planning and funding in U.S. cities around advanced connectivity. More recently, the National Science Foundation (NSF) awarded New York City a $22.5 million grant through its Platforms for Advanced Wireless Research (PAWR) initiative to create and deploy the first of a series of wireless research hubs focused on 5G-related breakthroughs including high-bandwidth and low-latency data transmission, millimeter wave spectrum, next-generation mobile network architecture, and edge cloud computing integration.

While these efforts should be applauded, it’s important to remember they are merely initial steps. A recent study conducted by CTIA, a leading trade association for the wireless industry, found that the United States remains behind both China and South Korea in 5G development. If other countries beat the U.S. to the punch, which some anticipate is already happening, companies and sectors that require ubiquitous, fast, and seamless connection – like autonomous transportation for example – could migrate, develop, and evolve abroad casting lasting negative impact on U.S. innovation.

The potential economic gains are also significant. A 2017 Accenture report predicts an additional $275 billion in infrastructure investments from the private sector, resulting in up to 3 million new jobs and a gross domestic product (GDP) increase of $500 billion. That’s just on the infrastructure side alone. On the global scale, we could see as much as $12 trillion in additional economic activity according to discussion at the World Economic Forum Annual Meeting in January.

Former President John F. Kennedy once said, “Conformity is the jailer of freedom and the enemy of growth.” When it comes to America’s technology evolution, this quote holds especially true. Our nation has led the digital revolution for decades. Now with 5G, we have the opportunity to unlock an entirely new level of innovation that will make our communities safer, more inclusive and more prosperous for all.

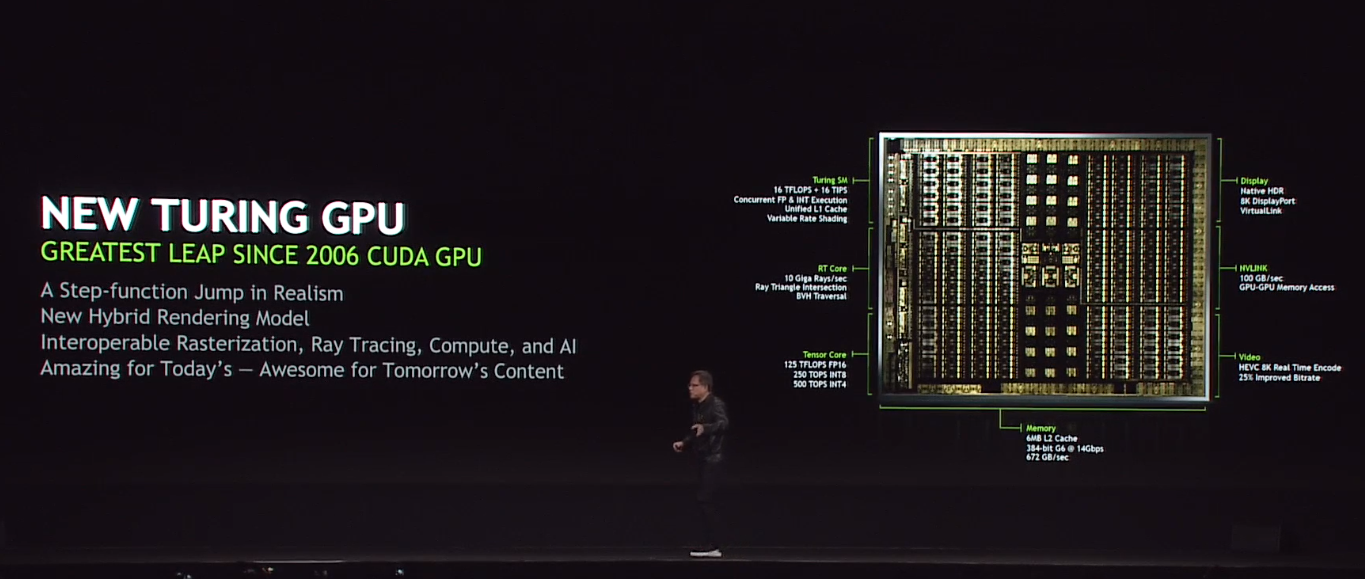

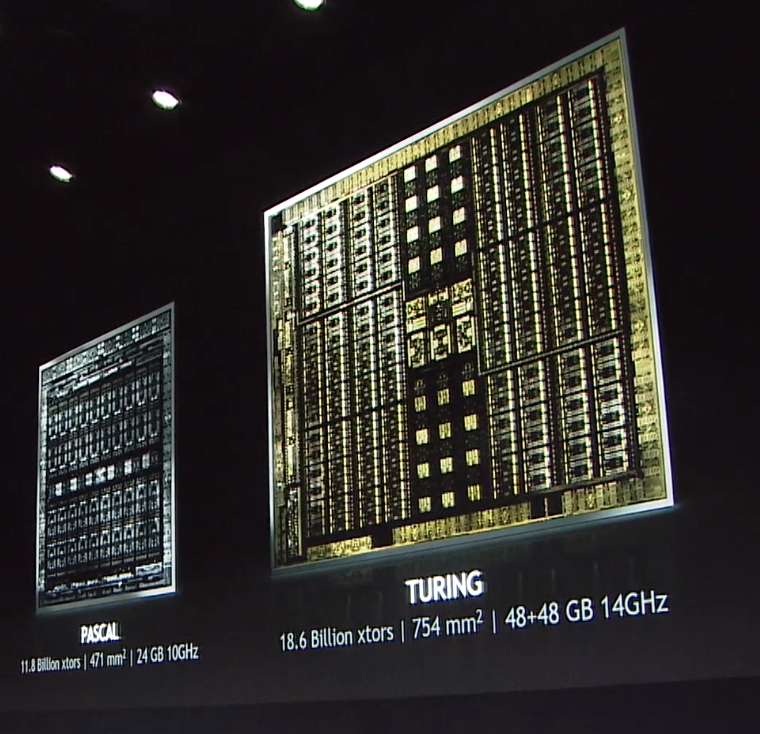

The AI part here is more important than it may seem at first. With NGX, Nvidia today also launched a new platform that aims to bring AI into the graphics pipelines. “NGX technology brings capabilities such as taking a standard camera feed and creating super slow motion like you’d get from a $100,000+ specialized camera,” the company explains, and also notes that filmmakers could use this technology to easily remove wires from photographs or replace missing pixels with the right background.

The AI part here is more important than it may seem at first. With NGX, Nvidia today also launched a new platform that aims to bring AI into the graphics pipelines. “NGX technology brings capabilities such as taking a standard camera feed and creating super slow motion like you’d get from a $100,000+ specialized camera,” the company explains, and also notes that filmmakers could use this technology to easily remove wires from photographs or replace missing pixels with the right background.

Apple’s VR ambitions continue: according to a new report from the Financial Times, Apple has acquired an augmented reality startup called Flyby Media, which developed technology that allows mobile phones to “see” the world around them. The company, notably, had worked with Google in the past, as it was the first consumer-facing application to use the image recognition…

Apple’s VR ambitions continue: according to a new report from the Financial Times, Apple has acquired an augmented reality startup called Flyby Media, which developed technology that allows mobile phones to “see” the world around them. The company, notably, had worked with Google in the past, as it was the first consumer-facing application to use the image recognition…  Art doesn’t have to be an end product. Thanks to Oculus’ new internal creation tool, Quill, illustrators can draw in virtual reality and let audiences see their creations come to life stroke by stroke around them. Quill works much like Tilt Brush, the VR painting app Google acquired. Using Oculus’ Touch controllers and motion cameras, Quill users can select different brushes…

Art doesn’t have to be an end product. Thanks to Oculus’ new internal creation tool, Quill, illustrators can draw in virtual reality and let audiences see their creations come to life stroke by stroke around them. Quill works much like Tilt Brush, the VR painting app Google acquired. Using Oculus’ Touch controllers and motion cameras, Quill users can select different brushes…  How did Facebook go from 1 billion to 8 billion videos views per day in 18 months without the whole server farm catching fire? It’s called SVE, short for streaming video engine. SVE lets Facebook slice videos into little chunks, cutting the delay from upload to viewing by 10X. And to ensure the next generation of 360 and virtual reality videos load fast too, it’s invented and…

How did Facebook go from 1 billion to 8 billion videos views per day in 18 months without the whole server farm catching fire? It’s called SVE, short for streaming video engine. SVE lets Facebook slice videos into little chunks, cutting the delay from upload to viewing by 10X. And to ensure the next generation of 360 and virtual reality videos load fast too, it’s invented and…  So about this time of year my inbox gets flooded with junk from Sundance. Reps, PR, invites to parties. Raise a chalice with Matt Damon and Stella Artois at Sundance! [Sure, why not]. Raise a glass with Canon [sense a trend?]. See the Extraordinary Difference Vaseline is Making [what?]. You get the picture. But for the last couple of years, the big inbox trend for Sundance has been VR. In…

So about this time of year my inbox gets flooded with junk from Sundance. Reps, PR, invites to parties. Raise a chalice with Matt Damon and Stella Artois at Sundance! [Sure, why not]. Raise a glass with Canon [sense a trend?]. See the Extraordinary Difference Vaseline is Making [what?]. You get the picture. But for the last couple of years, the big inbox trend for Sundance has been VR. In…