Platforms like Figma have changed the game when it comes to how creatives and other stakeholders in the production and product team conceive and iterate around two-dimensional designs. Now, a company called Gravity Sketch has taken that concept into 3D, leveraging tools like virtual reality headsets to let designers and others dive into and better visualize a product’s design as it’s being made; and the London-based startup is today announcing $33 million in funding to take its own business to the next dimension.

The Series A is coming as Gravity Sketch passes 100,000 users, including product design teams at firms like Adidas, Reebok, Volkswagen and Ford.

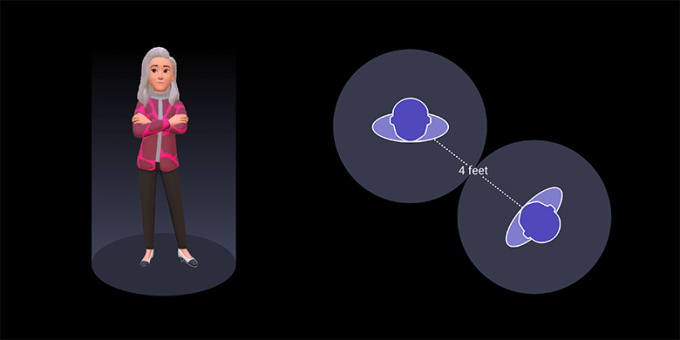

The funding will be used to continue expanding the functionality of its platform, with special attention going to expanding LandingPad, a collaboration feature it has built to support “non-designer” stakeholders to be able to see and provide feedback on the design process earlier in the development cycle of a product.

The round is being led by Accel, with GV (formerly known as Google Ventures) and previous backers Kindred Capital, Point Nine and Forward Partners (all from its seed round in 2020) also participating, along with unnamed individual investors. The company has now raised over $40 million.

Co-founded Oluwaseyi Sosanya (CEO), Daniela Paredes Fuentes (CXO) and Daniel Thomas (CTO), Sosanya and Fuentes met when they were both doing a joint design/engineering degree across the Royal College of Art and Imperial College in London. They also went on to work together in industrial design at Jaguar Land Rover. Across those and other experiences, the two found that they were encountering the same problems in the process of doing their jobs.

Much design in its earliest stages is often still sketched by hand, Sosanya noted, “but machines for tooling run on digital files.” That is just one of the steps when something is lost or complicated in translation: “From sketches to digital files is a very arduous process,” he said, involving perhaps seven or eight versions of the same drawing. Then technical drawings need to be produced, and then modeling for production, all complicated by the fact that the object is three-dimensional.

“There were so many inefficiencies, that the end result was never that close to the original intent,” he said. It wasn’t just design teams being involved, either, but marketing and manufacturing and finance and executive teams as well.

One issue is that we think in 3D, but skills need to be learned, and most digital drawing is designed to cater to, translating that into a 2D surface. “People sketch to bring ideas into the world, but the problem is that people need to learn to sketch, and that leads to a lot of miscommunication,” Paredes Fuentes added.

Even sketches that a designer makes may not be true to the original idea. “Communications and conversations were happening too late in the process,” she said. The idea, she noted, is to bring in collaboration earlier so that input and potential changes can be snagged earlier, too, making the whole design and manufacturing process less expensive overall.

Gravity Sketch’s solution is a platform that tapped into innovations in computer vision and augmented and virtual reality to let teams of people collaborate and work together in 3D from day one.

The approach that Gravity Sketch takes is to be “agnostic” in its approach, Sosanya said, meaning that it can be used from first sketch through to manufacturing; or files can be imported from it into whatever tooling software a company happens to be using; or designs might not go into a physical realm at any point at all: more recently, designers have been building NFT objects on Gravity Sketch.

One thing that it’s not doing is providing stress tests or engineering calculations, instead making the platform as limitless as possible as an engine for creativity. Bringing that too soon into the process would be “forcing boundaries,” Sosanya said. “We want to be as unrestricted as a piece of paper, but in the third dimension. We feed in engineering tools but that comes after you’ve proposed a solution.”

Although there are plenty of design software makers in the market today, there’s been relatively little built to address what Paredes Fuentes described as “spatial thinkers,” and so although companies like Adobe have made acquisitions like Allegorithmic to bring in 3D expertise, it has yet to bring out a 3D design engine.

“It’s highly difficult to build a geometry engine from the ground up,” Sosanya said. “A lot haven’t dared to step in because it’s a very complex space because of the 3D aspect. The tech enables a lot of things but taking the approach we have is what has brought us success.” That approach is not just to make it possible to “step into” the design process from the start through a 3D virtual reality environment (it provides apps for iOS, Steam and Oculus Quest and Rift), but also to use computers and smartphones to collaborate together as well.

While a lot of the target is to bring tools to the commercial world, Gravity Sketch has also found traction in education, with around 170 schools and universities also using the platform to complement their own programs. It said that revenues in the last year have grown four-fold, although it doesn’t disclose actual revenue numbers. Some 70% of its customers are in the U.S.

The investment will be used to continue developing Gravity Sketch’s LandingPad collaboration features to better support the non-designer stakeholders essential to the design process — a reflection of Gravity Sketch’s belief that greater diversity in the design industry and more voices in the development process will result in better performing products on the market. Companies – including the likes of Miro and Figma – have already disrupted the 2D space, enabling teams to co-create and collaborate quickly and inclusively in online workspaces, and now Gravity Sketch’s inclusive features are set to shake up the 3D environment. The funds will also be used to enhance the platform’s creative tools and scale the company’s sales, customer success and onboarding teams.

“In today’s climate, online collaboration tools have emerged as a necessity for businesses that want to stay agile and connect their teams in the most interactive, authentic and productive way possible,” said Harry Nelis, a partner at Accel, in a statement. “Design is no different, and we’ve been blown away by Gravity Sketch’s innovative, forward-looking suite of collaboration design tools that are already revolutionising workflows across numerous industries. Moreover, we expect that 3D design – coupled with the advent of virtual reality – will only grow in importance as brands race to build the emerging metaverse. The early organic traction and tier one brands that Oluwaseyi, Daniela and the Gravity Sketch team have already secured as customers are extremely impressive. We’re excited to partner with them and help them realise their dream of a more efficient, sustainable and democratic design world.”

The fifth generation of mobile networks, or 5G, is poised to revolutionize Voice over Internet Protocol (VoIP) for businesses. 5G

The fifth generation of mobile networks, or 5G, is poised to revolutionize Voice over Internet Protocol (VoIP) for businesses. 5G  Fifth generation technology, or 5G, will significantly enhance the performance of your business’s Voice over Internet Protocol (VoIP) systems. That’s because

Fifth generation technology, or 5G, will significantly enhance the performance of your business’s Voice over Internet Protocol (VoIP) systems. That’s because  5G is the next generation of wireless technology, and it is set to revolutionize the way we use the internet mainly because of its

5G is the next generation of wireless technology, and it is set to revolutionize the way we use the internet mainly because of its

]

]