There’s a special place in Hell for people who think it’s funny to rape a 7-year-old girl’s avatar in an online virtual world designed for children. Yes, that happened. Roblox, a hugely popular online game for kids, was hacked by an individual who subverted the game’s protection systems in order to have customized animations appear. This allowed two male avatars to gang rape a young girl’s avatar on a playground in one of the Roblox games.

The company has now issued an apology to the victim and its community, and says it has determined how the hacker was able to infiltrate its system so it can prevent future incidents.

The mother of the child, whose avatar was the victim of the in-game sexual assault, was nearby when the incident took place. She says her child showed her what was happening on the screen and she took the device away, fortunately shielding her daughter from seeing most of the activity. The mother then captured screenshots of the event in order to warn others.

She described the incident in a public Facebook post that read, in part:

At first, I couldn’t believe what I was seeing. My sweet and innocent daughter’s avatar was being VIOLENTLY GANG-RAPED ON A PLAYGROUND by two males. A female observer approached them and proceeded to jump on her body at the end of the act. Then the 3 characters ran away, leaving my daughter’s avatar laying on her face in the middle of the playground.

Words cannot describe the shock, disgust, and guilt that I am feeling right now, but I’m trying to put those feelings aside so I can get this warning out to others as soon as possible. Thankfully, I was able to take screenshots of what I was witnessing so people will realize just how horrific this experience was. *screenshots in comments for those who can stomach it* Although I was immediately able to shield my daughter from seeing the entire interaction, I am shuddering to think of what kind of damage this image could have on her psyche, as well as any other child that could potentially be exposed to this.

Roblox has since issued a statement about the attack:

Roblox’s mission is to inspire imagination and it is our responsibility to provide a safe and civil platform for play. As safety is our top priority — we have robust systems in place to protect our platform and users. This includes automated technology to track and monitor all communication between our players as well as a large team of moderators who work around the clock to review all the content uploaded into a game and investigate any inappropriate activity. We provide parental controls to empower parents to create the most appropriate experience for their child, and we provide individual users with protective tools, such as the ability to block another player.

The incident involved one bad actor that was able to subvert our protective systems and exploit one instance of a game running on a single server. We have zero tolerance for this behavior and we took immediate action to identify how this individual created the offending action and put safeguards in place to prevent it from happening again. In addition, the offender was identified and permanently banned from the platform. Our work on safety is never-ending and we are committed to ensuring that one individual does not get in the way of the millions of children who come to Roblox to play, create, and imagine.

The timing of the incident is particularly notable for the kids’ gaming platform, which has more than 60 million monthly active users and is now raising up to $150 million to grow its business. The company has been flying under the radar for years, while quietly amassing a large audience of both players and developers who build its virtual worlds. Roblox recently stated that it expects to pay out its content creators $70 million in 2018, which is double that of last year.

Roblox has a number of built-in controls to guard against bad behavior, including a content filter and a system that has moderators reviewing images, video and audio files before they’re uploaded to Roblox’s site. It also offers parental controls that let parents decide who can chat with their kids, or the ability to turn chat off. And parents can restrict kids under 13 from accessing anything but a curated list of age-appropriate games.

However, Roblox was also in the process of moving some of its older user-generated games to a newer system that’s more secure. The hacked game was one of several that could have been exploited in a similar way.

Since the incident, Roblox had its developers remove all the other potentially vulnerable games and ask their creators to move them over to the newer, more fortified system. Most have done so, and those who have not will not see their games allowed back online until that occurs. The games that are online now are not vulnerable to the exploit the hacker used.

The company responded quickly to take action, in terms of taking the game offline, banning the player and reaching out the mother — who has since agreed to help Roblox get the word out to others about the safeguards parents can use to protect kids in Roblox further.

But the incident raises questions as to whether kids should be playing these sorts of massive multiplayer games at such a young age at all.

Roblox, sadly, is not surprised that someone was interested in a hack like this.

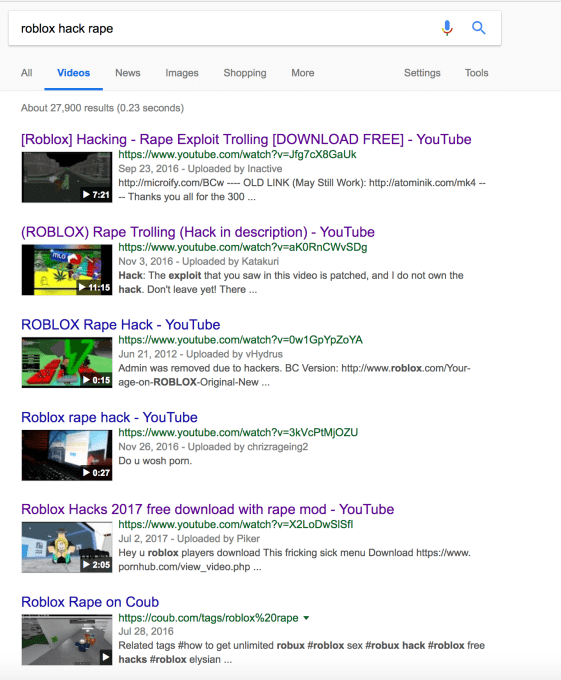

YouTube is filled with videos of Roblox rape hacks and exploits, in fact. The company submits takedown requests to YouTube when videos like this are posted, but YouTube only takes action on a fraction of the requests. (YouTube has its own issues around content moderation.)

It’s long past time for there to be real-world ramifications for in-game assaults that can have lasting psychological consequences on victims, when those victims are children.

Roblox, for its part, is heavily involved in discussions about what can be done, but the issue is complex. COPPA laws prevent Roblox from collecting data on its users, including their personal information, because the law is meant to protect kids’ privacy. But the flip side of this is that Roblox has no way of tracking down hackers like this.

“I think that we’re not the only one pondering the challenges of this. I think every platform company out there is struggling with the same thing,” says Tami Bhaumik, head of marketing and community safety at Roblox.

“We’re members of the Family Online Safety Institute, which is over 30 companies who share best practices around digital citizenship and child safety and all of that,” she continues. “And this is a constant topic of conversation that we all have – in terms of how do we use technology, how do we use A.I. and machine learning? Do we work with the credit card companies to try to verify [users]? How do we get around not violating COPPA regulations?,” says Bhaumik.

“The problem is super complex, and I don’t think anyone involved has solved that yet,” she adds.

One solution could be forcing parents to sign up their kids and add a credit card, which would remain uncharged unless kids broke the rules.

That could dampen user growth to some extent — locking out the under-banked, those hesitant to use their credit cards online and those just generally distrustful of gaming companies and unwanted charges. It would mean kids couldn’t just download the app and play.

But Roblox has the momentum and scale now to lock things down. There’s enough demand for the game that it could create more of a barrier to entry if it chose to, in an effort to better protect users. After all, if players knew they’d be fined (or their parents would be), it would be less attractive to break the rules.

The Book Box FAQ also noted that Amazon will use members’ recent purchase history on its site to make sure the box doesn’t include any books the customer had already purchased.

The Book Box FAQ also noted that Amazon will use members’ recent purchase history on its site to make sure the box doesn’t include any books the customer had already purchased.

However, the app wasn’t heavily marketed by Amazon, and many parents don’t even know it exists, it seems.

However, the app wasn’t heavily marketed by Amazon, and many parents don’t even know it exists, it seems. The company at the time was fresh on the heels of

The company at the time was fresh on the heels of

That’s problematic because it could make minors

That’s problematic because it could make minors

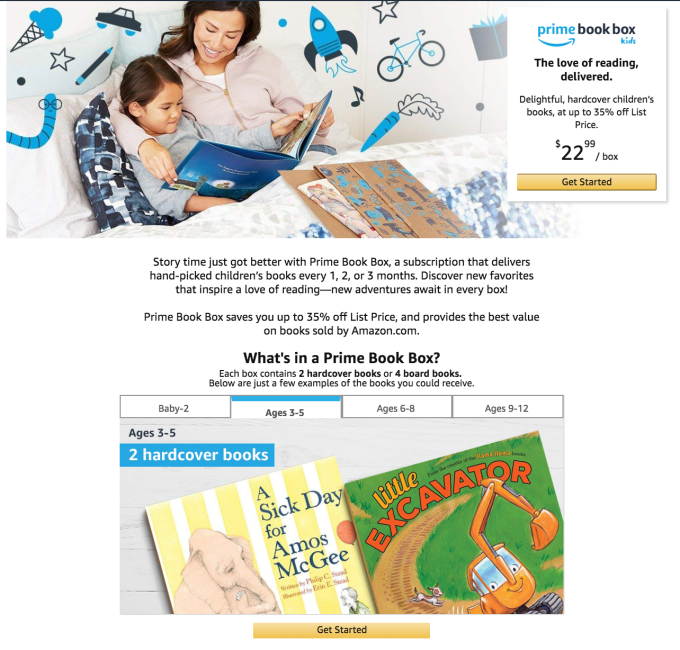

The increased scrutiny brought on by the Cambridge Analytica debacle, Russian election interference, screentime addiction, lack of protections against fake news, and lax policy towards conspiracy theorists and dangerous content has triggered a reckoning for Facebook.

The increased scrutiny brought on by the Cambridge Analytica debacle, Russian election interference, screentime addiction, lack of protections against fake news, and lax policy towards conspiracy theorists and dangerous content has triggered a reckoning for Facebook.