Nvidia launches a new GPU architecture and the Grace CPU Superchip

At its annual GTC conference for AI developers, Nvidia today announced its next-gen Hopper GPU architecture and the Hopper H100 GPU, as well as a new data center chip that combines the GPU with a high-performance CPU, which Nvidia calls the “Grace CPU Superchip” (not to be confused with the Grace Hopper Superchip).

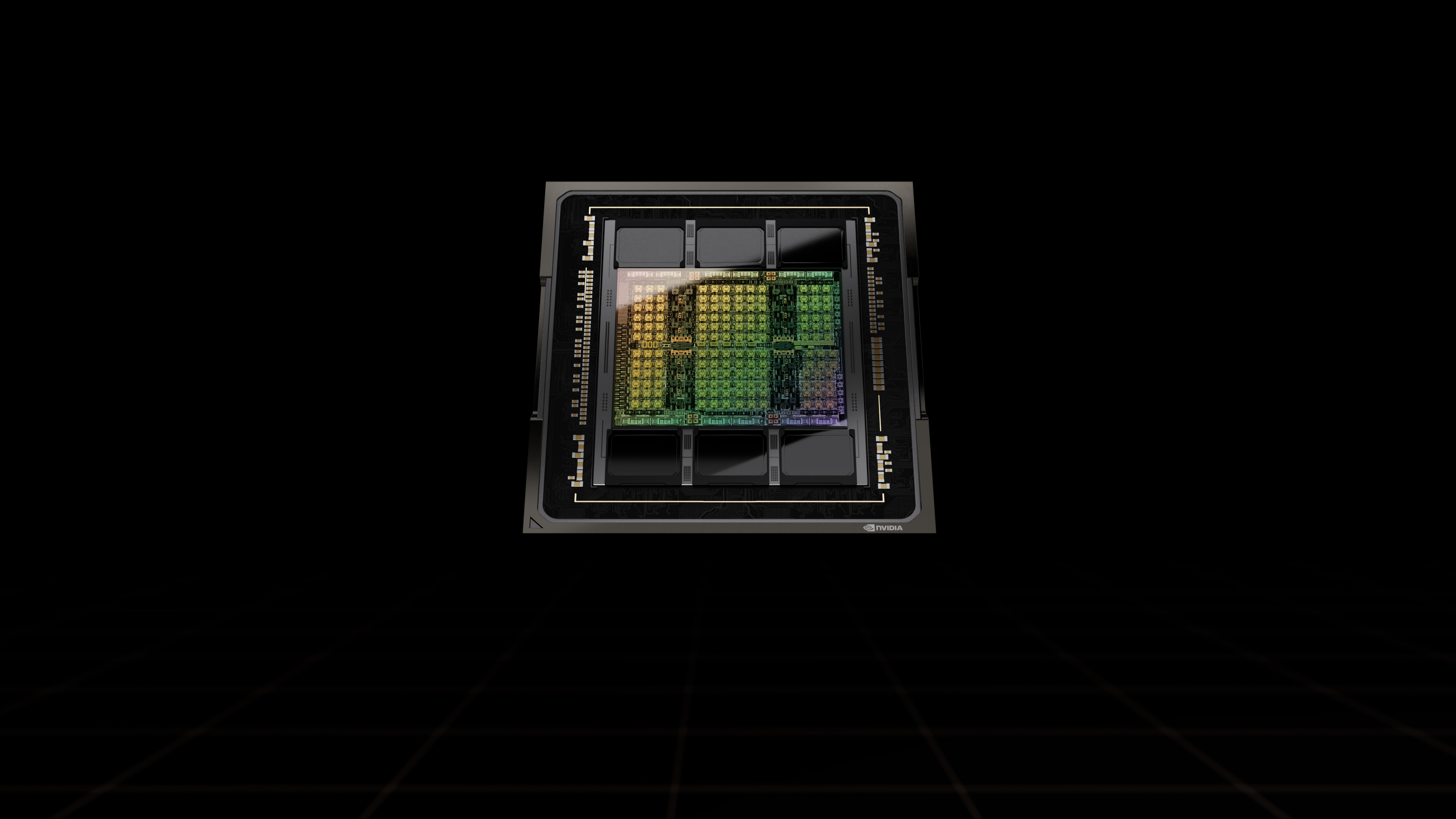

The H100 GPU

With Hopper, Nvidia is launching a number of new and updated technologies, but for AI developers, the most important one may just be the architecture’s focus on transformer models, which have become the machine learning technique de rigueur for many use cases and which powers models like GPT-3 and asBERT. The new Transformer Engine in the H100 chip promises to speed up model training by up to six times and because this new architecture also features Nvidia’s new NVLink Switch system for connecting multiple nodes, large server clusters powered by these chips will be able to scale up to support massive networks with less overhead.

“The largest AI models can require months to train on today’s computing platforms,” Nvidia’s Dave Salvator writes in today’s announcement. “That’s too slow for businesses. AI, high performance computing and data analytics are growing in complexity with some models, like large language ones, reaching trillions of parameters. The NVIDIA Hopper architecture is built from the ground up to accelerate these next-generation AI workloads with massive compute power and fast memory to handle growing networks and datasets.”

The new Transformer Engine uses customer Tensor Cores that can mix 8-bit precision and 16-bit half-precision as needed while maintaining accuracy.

“The challenge for models is to intelligently manage the precision to maintain accuracy while gaining the performance of smaller, faster numerical formats,” Salvatore explains. “Transformer Engine enables this with custom, NVIDIA-tuned heuristics that dynamically choose between FP8 and FP16 calculations and automatically handle re-casting and scaling between these precisions in each layer.”

The H100 GPU will feature 80 billion transistors and will be built using TSMC’s 4nm process. It promises speed-ups between 1.5 and 6 times compared to the Ampere A100 data center GPU that launched in 2020 and used TSMC’s 7nm process.

In addition to the Transformer Engine, the GPU will also feature a new confidential computing component.

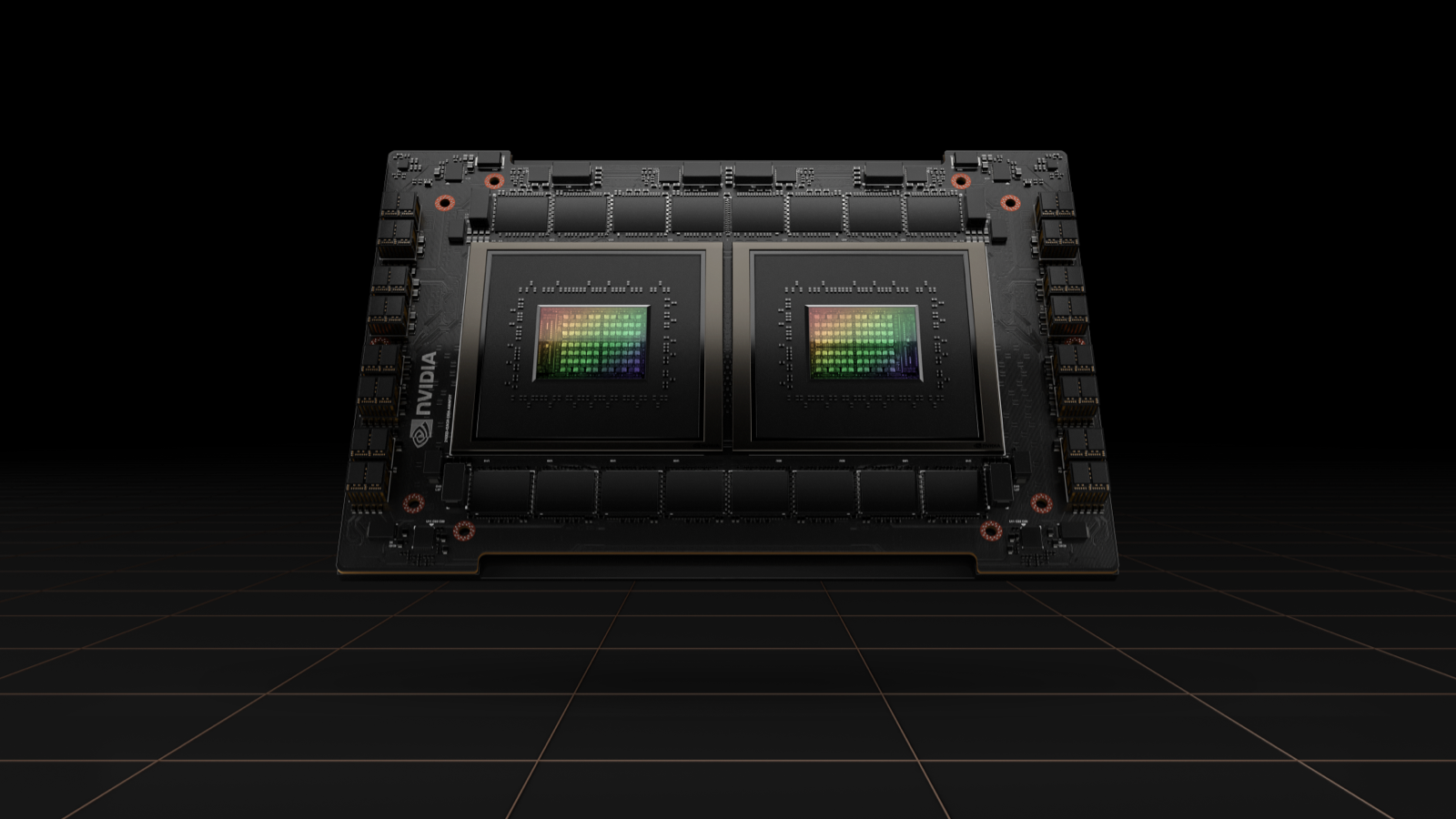

Grace (Hopper) Superchips

The Grace CPU Superchip is Nvidia’s first foray into a dedicated data center CPU. The Arm Neoverse-based chip will feature a whopping 144-cores with 1 terabyte per second of memory bandwidth. It actually combines two Grace CPUs connected over the company’s NVLink interconnect — which is reminiscent of the architecture of Apple’s M1 Ultra.

The new CPU, which will use fast LPDDR5X memory, will be available in the first half of 2023 and promises to offer 2x the performance of traditional servers. Nvidia estimates the chip will reach 740 points on the SPECrate®2017_int_base benchmark, which would indeed put it in direct competition with high-end AMD and Intel data center processors (though some of those score higher, but at the cost of lower performance per watt).

“A new type of data center has emerged — AI factories that process and refine mountains of data to produce intelligence,” said Jensen Huang, founder and CEO of Nvidia. “The Grace CPU Superchip offers the highest performance, memory bandwidth and NVIDIA software platforms in one chip and will shine as the CPU of the world’s AI infrastructure.”

In many ways, this new chip is the natural evolution of the Grace Hopper Superchip and Grace CPU the company announced last year (yes, these names are confusing, especially because Nvidia called the Grace Hopper Superchip the Nvidia Grace last year). The Grace Hopper Superchip combines a CPU and GPU into a single system-on-a-chip. This system, which will also launch in the first half of 2023, will feature a 600GB memory GPU for large models and Nvidia promises that the memory bandwidth will be 30x higher compared to a GPU in a traditional server. These chips, Nvidia says, are meant for “giant-scale” AI and high-performance computing.

The Grace CPU Superchip is based on the Arm v9 architecture and can be configured as standalone CPU systems or for servers with up to eight Hopper-based GPUs.

The company says it is working with “leading HPC, supercomputing, hyperscale and cloud customers,” so chances are these systems are coming to a cloud provider near you sometime next year.